Why Moonshot AI & Kimi Matters for Modern AI Strategy

Moonshot AI & Kimi represents one of the fastest-rising AI architectures in the world—built by Chinese startup Moonshot AI, founded by Yang Zhilin. The company went from zero to a 1-trillion-parameter model competing directly with OpenAI, Anthropic, and Google.

Quick Overview: What You Need to Know

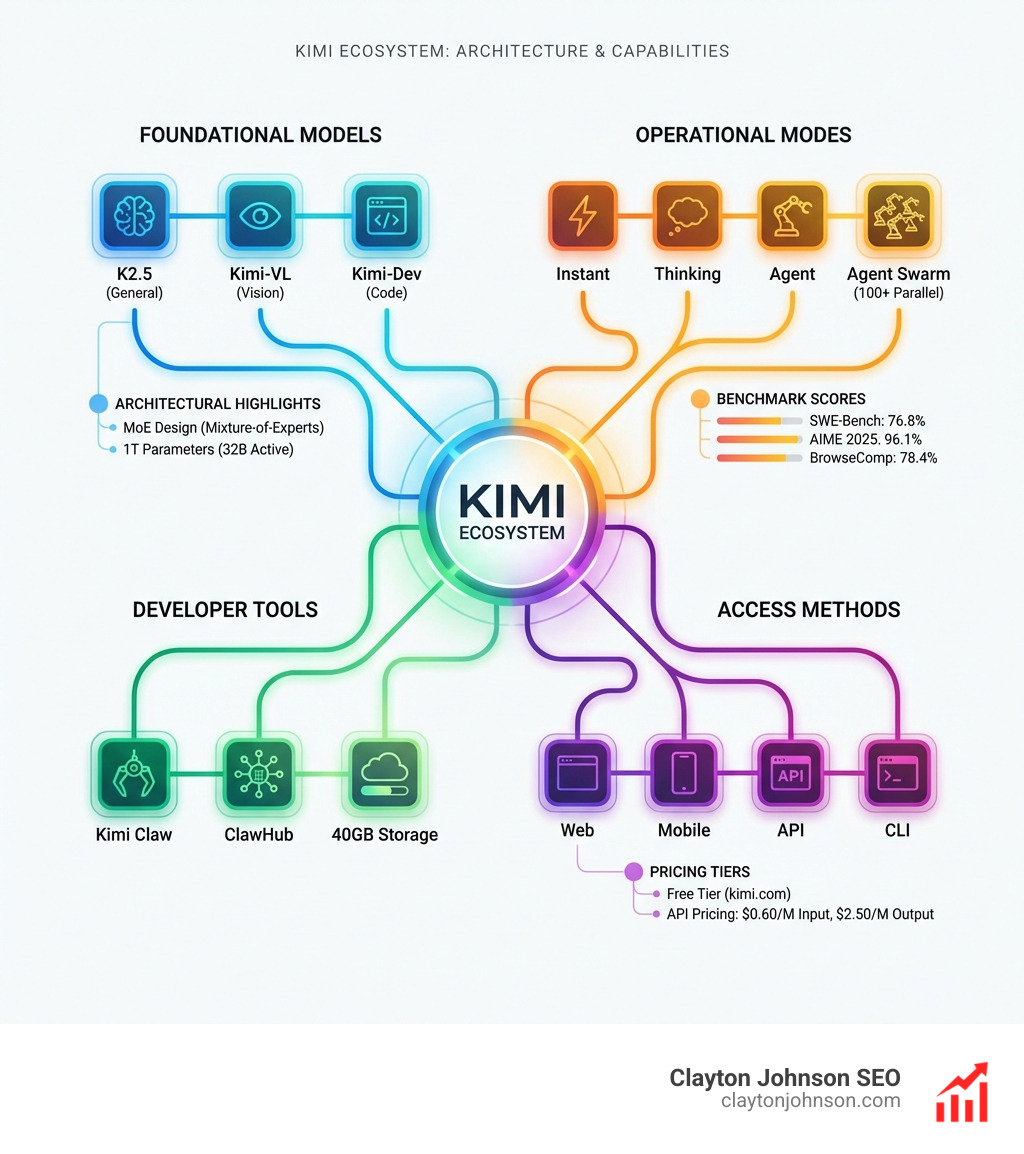

| What is it? | Kimi is an AI chatbot and LLM series known for extreme context windows (128K–2M characters) and agentic capabilities |

| Who built it? | Moonshot AI, a Chinese company founded by Tsinghua alumni |

| Key models | Kimi K2.5 (1T parameters, 32B active), Kimi-Dev (coding), Kimi-VL (vision), Kimi-Researcher (research) |

| What makes it unique? | Mixture-of-Experts architecture, Agent Swarm (100 parallel agents), native multimodal integration, open-source strategy |

| Access | Free tier via kimi.com, API pricing at $0.60/M input tokens, $2.50/M output tokens |

| Benchmark highlights | 76.8% SWE-Bench, 96.1% AIME, 78.4% BrowseComp with Agent Swarm |

Moonshot AI proves that algorithmic efficiency can compete with brute-force capital. The company reportedly trained Kimi K2 for a fraction of what some frontier models are estimated to cost by using synthetic data pipelines, self-critique reward systems, and sparse expert activation. The result is a model that activates only 32 billion parameters per request out of 1 trillion total, cutting computation by 96.8%.

The strategic implications are clear: long-context processing, agentic workflows, and multimodal vision capabilities are no longer exclusive to US frontier labs. Kimi supports up to 300-step tool calls in Agent mode, processes 1 million rows of data via OK Computer, and offers 5,000+ community-contributed skills through ClawHub. This is infrastructure-level AI, not incremental improvement.

As Clayton Johnson, I’ve spent years helping founders and marketing leaders architect scalable demand systems that compound over time—and Moonshot AI & Kimi represents exactly the kind of strategic shift that requires structural rethinking, not tactical adjustment. This guide breaks down how Kimi’s architecture, operational modes, and agentic ecosystem position it against global competitors, and what it means for teams building AI-powered workflows.

The Rise of Moonshot AI & Kimi

Moonshot AI didn’t just join the AI race; it sprinted to the front. Founded by Yang Zhilin, Zhou Xinyu, and Wu Yuxin, the company was born out of a conviction that the “wave” of generative AI was only just beginning. Yang, a Carnegie Mellon PhD who co-authored foundational papers on Transformer-XL and XLNet, brought a deep technical pedigree from Meta AI and Google Brain to the table.

The company’s headquarters in China quickly became a hub for “long-context” innovation. While other models struggled to remember the beginning of a long conversation, Moonshot AI & Kimi made “ultra-long context” its North Star. The first version of Kimi supported 128,000 tokens. It later expanded to a staggering 2 million character context window in closed beta. This wasn’t just a gimmick; it was a solution for students, researchers, and developers who needed to process entire textbooks or massive codebases without losing the thread.

You can explore their journey further at the Moonshot AI official website.

Evolution of the Kimi Model Series

The development history of Kimi is a masterclass in rapid iteration. After the initial chatbot success, Moonshot launched a series of specialized models designed to tackle specific industry pain points:

- Kimi K2 & K2.5: The flagship models. K2.5 is a 1-trillion parameter Mixture-of-Experts (MoE) powerhouse that maintains efficiency by only activating a fraction of its parameters at any given time.

- Kimi-Dev: A 72-billion parameter model focused specifically on coding, designed to rival the best in the business.

- Kimi-VL: A 16-billion parameter multimodal model (3B active) that handles text, images, and video with ease.

- Kimi-Researcher: An autonomous agent designed for end-to-end deep research tasks using reinforcement learning.

- Kimi Linear: A 48-billion parameter model (3B active) optimized for speed and efficiency.

Perhaps most importantly, Moonshot AI adopted a strategic open-source approach for many of these models, releasing weights for Kimi-VL and Kimi K2 on Hugging Face to accelerate global adoption.

Market Positioning and Global Competition

In the global arena, Moonshot AI & Kimi is positioned as a “disruptor” that offers frontier-level performance at a fraction of the cost. While OpenAI and Anthropic have historically dominated the conversation, Moonshot has proven that it can match or even beat these giants in specific benchmarks, particularly in coding and agentic tasks.

As noted in reports, Alibaba-backed Moonshot releases new Kimi AI model that rivals the top-tier versions of ChatGPT and Claude. This competition is part of a broader “war of the AI Tigers” in China, where companies like DeepSeek and MiniMax are also pushing the boundaries of what open-source and proprietary models can do.

Inside the 1-Trillion Parameter Architecture

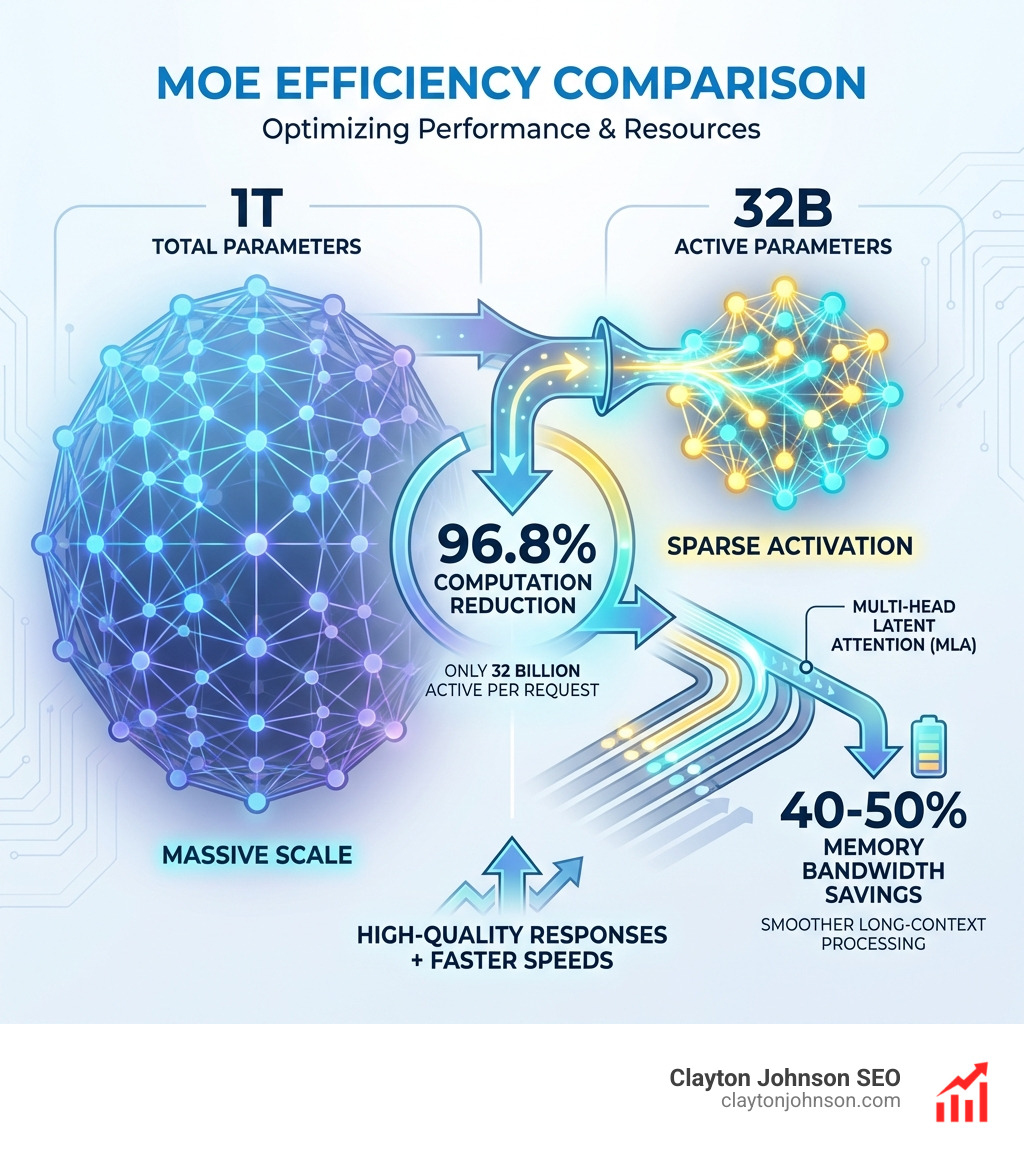

The secret sauce behind Moonshot AI & Kimi‘s performance is its Mixture-of-Experts (MoE) design. Rather than running a massive, dense 1-trillion parameter model for every query—which would be prohibitively expensive and slow—Kimi uses “sparse activation.”

Out of the 1 trillion total parameters in Kimi K2.5, only 32 billion are active during any single request. This reduces computation by 96.8%, allowing the model to deliver high-quality responses with the speed of a much smaller model.

To further optimize performance, Kimi utilizes:

- Multi-Head Latent Attention (MLA): This mechanism cuts memory bandwidth by 40-50%, making long-context processing significantly smoother.

- Muon Optimizer: A mathematical breakthrough in optimization that allows for more scalable and efficient LLM training.

- Kimi Delta Attention (KDA): A proprietary innovation that enhances the model’s ability to focus on relevant information within massive context windows.

Native Multimodal Integration in Moonshot AI & Kimi

Unlike models that “glue” a vision adapter onto a text-based foundation, Kimi K2.5 was trained natively on 15 trillion tokens of mixed visual and textual data. This means it understands the relationship between images and text at a fundamental level.

The MoonViT encoder features 400 million dedicated parameters for vision, enabling features like:

- Image-to-Code: Generating production-ready React or HTML directly from a UI mockup.

- Video Understanding: Processing temporal data to reconstruct websites or explain complex scenes.

- Visual Debugging: Comparing a rendered output against an original design and generating corrective edits autonomously.

Production Efficiency and Quantization

Efficiency isn’t just about training; it’s about deployment. Kimi K2.5 uses Native INT4 quantization, which delivers a 2x speed improvement without sacrificing accuracy. This makes the model highly flexible for various deployment scenarios, including local hosting for organizations with strict data privacy needs.

For those looking for the Technical details of Kimi K2, the model’s architecture is designed to be “production-ready” from day one, supporting high-concurrency environments without the massive overhead typically associated with trillion-parameter models.

Operational Modes: From Instant to Agent Swarm

One of the most user-friendly aspects of Moonshot AI & Kimi is its distinct operational modes. Users can toggle between these modes depending on whether they need a quick answer or a deep, multi-step investigation.

- Instant Mode: Optimized for speed. Responses typically arrive in 3-8 seconds, and token consumption is cut by 60-75% compared to more intensive modes.

- Thinking Mode: Best for math, logic, and complex reasoning. The model generates an internal “reasoning trace” to ensure accuracy in multi-step problems.

- Agent Mode: Designed for autonomous workflows. Kimi can execute 200-300 sequential tool calls (like searching the web, running code, or reading files) to complete a complex task without losing coherence.

Scaling Intelligence with the Moonshot AI & Kimi Agent Swarm

The real “moonshot” feature is the Agent Swarm. Instead of one agent working through a list of tasks one by one, the Agent Swarm can coordinate up to 100 sub-agents simultaneously.

Imagine you need to research top YouTube creators across 100 different niches. A standard AI would search for them one by one. Kimi’s Agent Swarm spawns 100 specialists, assigns one niche to each, and then synthesizes the results. This results in a 4.5x execution time reduction for parallelizable tasks. What would take 5,000 seconds sequentially can be finished in roughly 50 seconds.

This is powered by Parallel-Agent Reinforcement Learning (PARL), a training method that incentivizes the model to balance quality with “critical path efficiency.”

The Agentic Ecosystem: Kimi Claw and Developer Tools

For developers and data scientists, the browser tab is no longer just a place to chat—it’s a development environment. Kimi Claw (a rebranded version of the OpenClaw framework) provides a persistent, 24/7 AI agent environment native to the Kimi platform.

Key features of the Kimi Claw ecosystem include:

- ClawHub: A library of over 5,000 community-contributed skills that allow agents to interact with external tools.

- 40GB Cloud Storage: Dedicated space for storing large datasets, code repositories, and technical docs, facilitating high-volume Retrieval-Augmented Generation (RAG).

- Pro-Grade Search: Real-time data integration that fetches live info from sources like Yahoo Finance to eliminate the “knowledge cutoff” problem.

Specialized Agents for Research and Development

Moonshot AI has also introduced several specialized agentic tools:

- Kimi-Researcher: An end-to-end agent that performs deep research, capable of reading 500+ pages in a single search.

- OK Computer: An agentic feature capable of creating multi-page websites and editable slides from a simple prompt. It can process up to 1 million rows of input data at once.

- Kimi-Dev: A coding-focused model that achieves 76.8% on the SWE-Bench verified benchmark, proving its ability to navigate real-world GitHub issues.

You can find more on Kimi K2.5 performance and vision capabilities on their official blog.

Benchmarking Kimi Against Global AI Giants

Does the performance match the hype? According to recent benchmarks, the answer is a resounding yes. Moonshot AI & Kimi consistently places at the top of charts for coding, math, and multimodal understanding.

| Benchmark | Kimi K2.5 Score | Competitor Context |

|---|---|---|

| AIME (Math) | 96.1% | Beats most frontier models |

| SWE-Bench (Coding) | 76.8% | High-tier performance for GitHub issue resolution |

| MMMU Pro (Multimodal) | 78.5% | Strong academic/visual understanding |

| BrowseComp (Agent) | 78.4% | Significant lead over human baseline (29.2%) |

| MathVision | 84.2% | Expert-level visual-math reasoning |

Kimi’s ability to maintain stability across hundreds of sequential tool calls makes it particularly effective for “Agentic” tasks where other models might “drift” or hallucinate. For the full technical breakdown, see Kimi K2: Open Agentic Intelligence.

Frequently Asked Questions about Moonshot AI & Kimi

Is Kimi AI free to use for individuals?

Yes, Kimi offers a free tier accessible via kimi.com and the Kimi mobile app. There are usage limits for the free tier, which can be expanded through subscription plans like Moderato, Allegretto, or Vivace. These plans offer higher usage limits, access to K2 Turbo, and faster generation speeds.

How does Kimi’s context window compare to other LLMs?

Kimi is a pioneer in long-context windows. While many models started with 8K or 32K tokens, Kimi launched with 128K and quickly moved to a 2 million character window. This remains one of the largest context windows available in a consumer-facing chatbot.

What are the pricing tiers for the Kimi API?

The Kimi API is designed to be highly competitive. Current pricing for Kimi K2.5 is $0.60 per million input tokens and $2.50 per million output tokens. This is significantly lower than many US-based frontier models, making it an attractive option for developers looking to scale AI-powered applications.

Conclusion

The “Dark Side” of the Moonshot AI isn’t something to fear—it’s a new frontier of efficiency and agentic power. By prioritizing long-context memory and parallel processing through its Agent Swarm, Moonshot AI & Kimi has established itself as a top-tier contender in the global AGI roadmap.

At Demandflow.ai, we believe that the biggest challenge companies face isn’t a lack of AI tools—it’s a lack of structured growth architecture. Just as Kimi uses an orchestrator to manage a swarm of agents, your business needs a structured strategy to leverage these powerful technologies effectively. Whether you are using Kimi for deep research, autonomous coding, or large-scale data processing, the key is to move beyond tactical “chatting” and toward integrated, AI-augmented workflows.

Moonshot AI has proven that clarity and structure can lead to compounding growth in the AI space. Now, it’s time for founders and marketing leaders to apply that same level of architectural thinking to their own demand systems.

For more insights on building SEO content marketing services and structured growth systems, we’re here to help you navigate this new landscape.