What is Firecrawl and Why is it Essential for AI Applications?

At its core, Firecrawl is an API service designed by the team at Mendable.ai to remove the friction between the messy, chaotic web and the high-quality data requirements of Large Language Models (LLMs). While the internet is a goldmine of information, most of it is trapped in “dirty” HTML—cluttered with ads, navigation bars, tracking scripts, and cookie banners.

For developers building AI applications, this noise is more than just a nuisance; it’s a cost. Every extra token of boilerplate you feed into an LLM is money wasted and context window space lost. Firecrawl solves this by providing “LLM-ready” data. This means it doesn’t just grab the code; it intelligently parses the page, removes the junk, and converts the core content into clean markdown.

Beyond just cleaning data, Firecrawl handles the technical hurdles that typically require a dedicated engineering team:

- Anti-bot Bypass: It navigates sophisticated security layers that block standard scrapers.

- Cloudflare Handling: It manages the “Under Attack” pages and challenges that often stop automated tools in their tracks.

- JavaScript Rendering: It uses headless browsing to ensure that content loaded dynamically via React, Vue, or other frameworks is fully captured.

For those looking to integrate these capabilities into a broader growth strategy, our SEO Services can help you leverage this data for market dominance.

Solving the “Messy HTML” Problem for LLMs

When you feed raw HTML into a vector database for a Retrieval-Augmented Generation (RAG) system, you’re essentially asking your AI to find a needle in a haystack of

tags and classes. This noise leads to lower retrieval accuracy and higher hallucination rates.

Firecrawl acts as a high-fidelity filter. It identifies the “main content” of a page and discards the rest. By optimizing the context window, your AI agents can process more relevant information in a single pass. This is a critical component of modern analytics and data strategies, where the quality of the input directly dictates the value of the output.

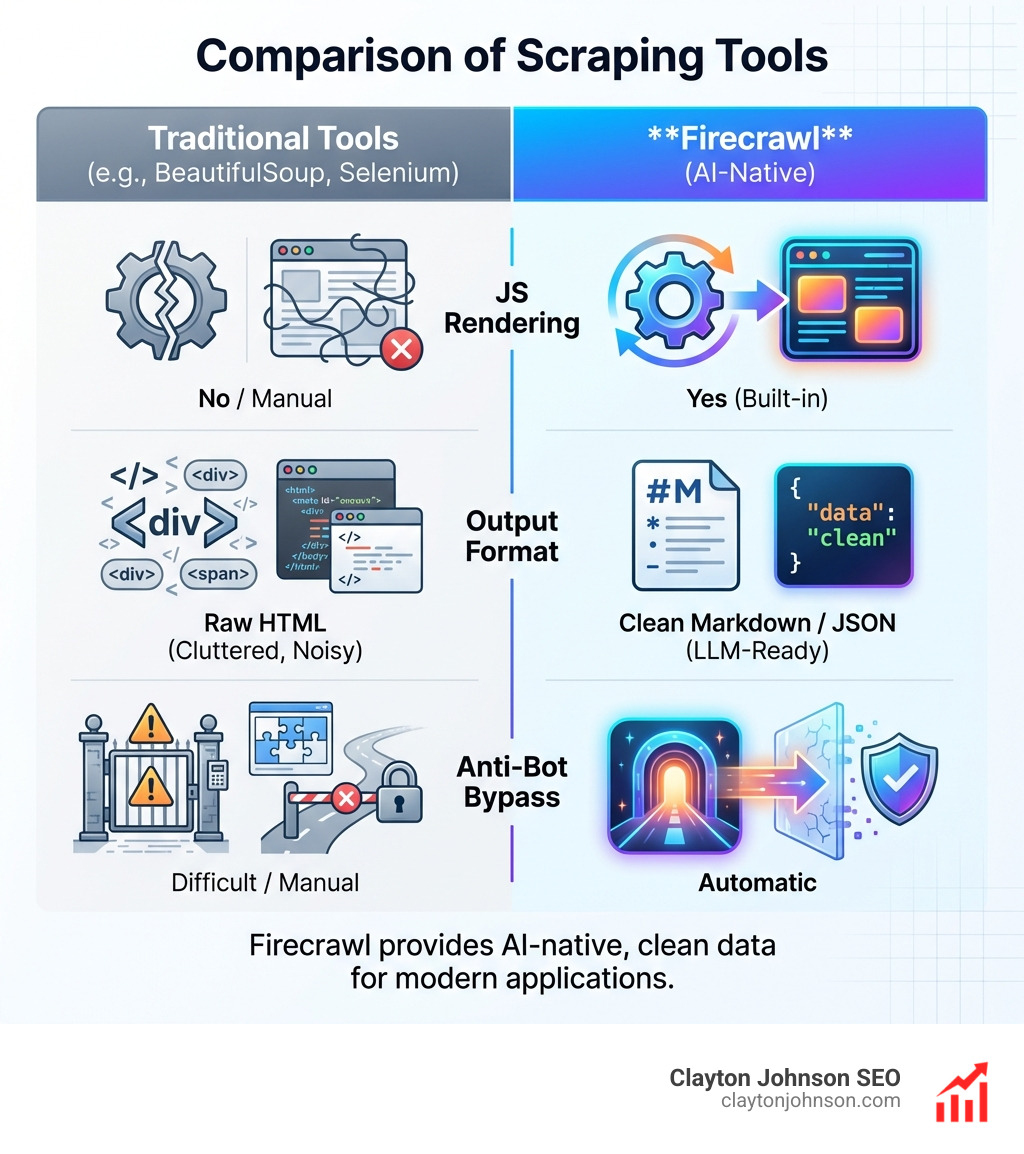

Firecrawl vs. Traditional Scraping Tools

Why use Firecrawl when tools like BeautifulSoup or Selenium have been around for decades? The difference lies in the “AI-native” approach.

| Feature | BeautifulSoup | Selenium / Puppeteer | Firecrawl |

|---|---|---|---|

| JS Rendering | No | Yes | Yes (Built-in) |

| Output Format | Raw HTML | Raw HTML | Clean Markdown / JSON |

| Anti-Bot Bypass | Manual | Difficult | Automatic |

| Scalability | Low (Single-threaded) | High (Resource heavy) | Very High (Cloud API) |

| AI Integration | None | None | Native (LangChain/LlamaIndex) |

Traditional tools are “blind.” They follow instructions but don’t understand context. Firecrawl is built to understand what an AI needs, offering a developer experience that prioritizes speed and data purity over manual DOM manipulation.

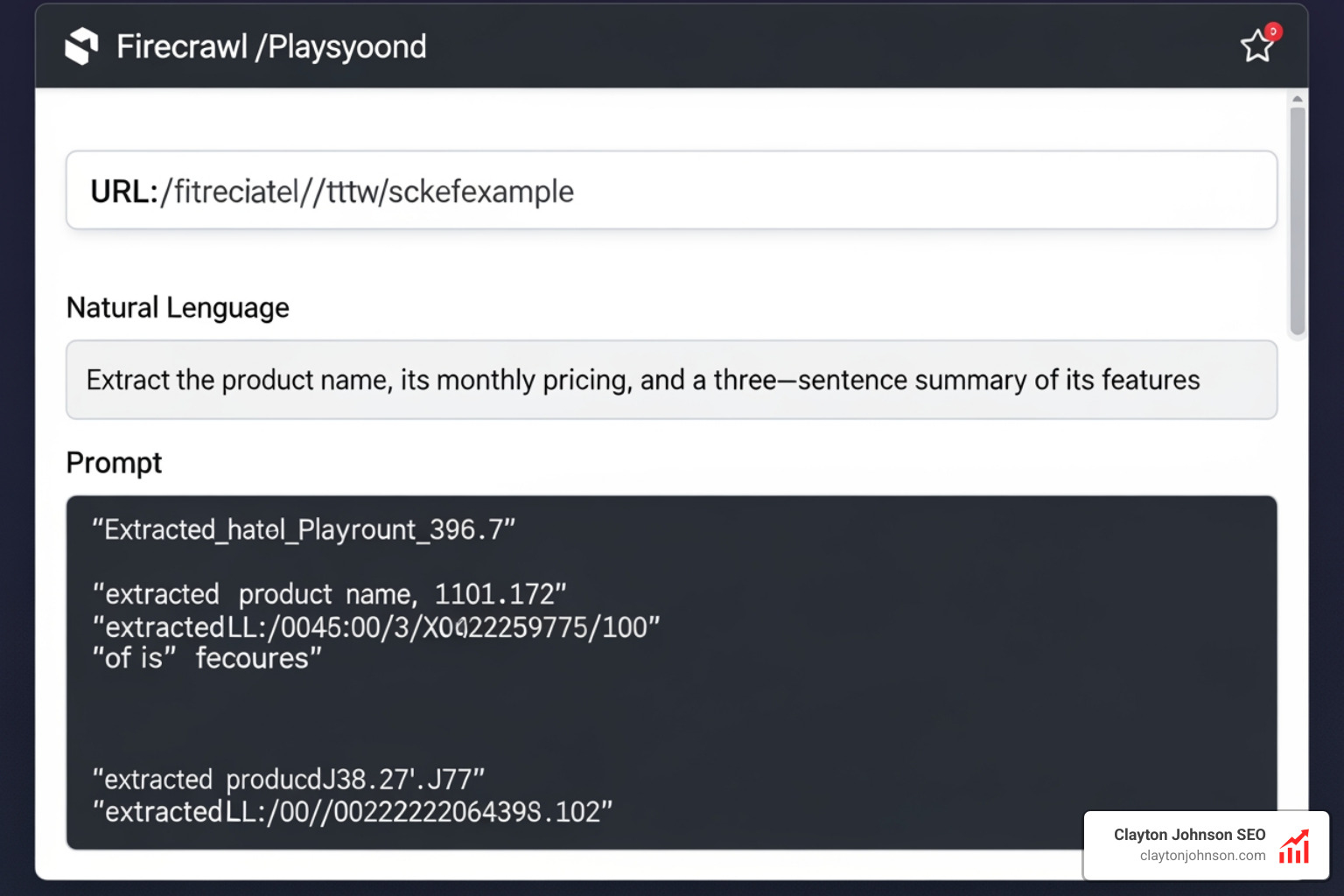

Core Features and the Power of the /extract Endpoint

The crown jewel of the Firecrawl ecosystem is the /extract endpoint. Currently in beta, this feature allows you to turn any website into structured data using nothing but a natural language prompt.

Imagine you want to scrape a directory of software tools. Instead of writing complex CSS selectors for the title, price, and description, you simply tell Firecrawl: “Extract the product name, its monthly pricing, and a three-sentence summary of its features.”

You can even use the Firecrawl playground to test these prompts before committing them to your code. This “schema-less” extraction is a game-changer for building AI agents that need to browse the web and bring back specific facts without human intervention.

Scrape, Crawl, and Map: Navigating the Web at Scale

Firecrawl offers three primary modes of operation to suit different needs:

- Scrape: Targets a single URL and returns clean markdown or HTML.

- Crawl: Recursively follows links to scan entire websites. Unlike traditional crawlers, it doesn’t even need a sitemap. It discovers subpages automatically and can be limited by

max_depthor specific URL patterns (e.g.,example.com/blog/*). - Map: Quickly discovers and returns a list of all URLs on a website, allowing you to “scout” a site before deciding what to scrape.

Efficiently navigating the web is one of the Digital Marketing Pillars we focus on when building competitive intelligence systems. Being able to map a competitor’s entire content library in seconds provides an immediate strategic advantage.

Advanced Capabilities: Actions and Batching in Firecrawl

For more complex interactions, Firecrawl supports Actions. This allows you to programmatically click buttons, scroll through infinite feeds, or wait for specific elements to appear before the data is captured.

The introduction of Parallel Agents and the FIRE-1 agent has further pushed the boundaries. You can now run thousands of queries simultaneously, effectively treating the entire web as a queryable database. If you’re curious about the underlying tech, you can always check the Firecrawl GitHub repository to see how the open-source community is contributing to these advancements.

Getting Started: Setup and Integration with AI Frameworks

Starting with Firecrawl is straightforward. Once you sign up and get your API key, you can install the SDK for your preferred language. For Python users, it’s as simple as pip install firecrawl-py.

When we build SEO Content Marketing systems for our clients, we often use these SDKs to automate the research phase, ensuring our content is backed by the most recent data available on the web.

Connecting Firecrawl to LangChain and LlamaIndex

Firecrawl is designed to fit perfectly into the modern AI stack. It features native integrations with:

- LangChain: Use the

FirecrawlLoaderto ingest web pages directly into your document chains. - LlamaIndex: Use the

FirecrawlReaderto build sophisticated RAG pipelines. - CrewAI: Empower your autonomous agents with the ability to search and scrape the web in real-time.

By providing clean markdown, Firecrawl ensures that the embeddings generated for your vector databases are high-quality, which is the secret sauce for reducing hallucinations in production environments. You can find detailed implementation guides in the Python SDK documentation.

Self-Hosting vs. Cloud API

One of the unique aspects of Firecrawl is its commitment to open source. The core engine is available under the AGPL-3.0 license, meaning you can self-host the backend using Docker.

- Cloud API: Best for teams who want to “set it and forget it.” It handles all the infrastructure, proxy rotation, and scaling.

- Self-Hosting: Ideal for developers who want full control over their data or need to bypass certain cost structures for massive, non-commercial projects.

While the cloud version offers the most “production-ready” features (like the new /extract v2 and advanced actions), the self-hosted version maintains high feature parity for core scraping and crawling tasks.

Real-World Use Cases and Enterprise Performance

We’ve seen Firecrawl used in everything from small hobbyist projects to enterprise-grade AI agents. One prominent example involves enterprise customers extracting over 6 million URLs monthly to keep their internal knowledge bases fresh. This level of scale is only possible when you offload the “undifferentiated heavy lifting” of web scraping to a specialized API.

Common enterprise use cases include:

- Competitive Intelligence: Monitoring competitor pricing and feature updates daily.

- Lead Enrichment: Scraping company websites to find mission statements, key personnel, and product offerings to populate a CRM.

- AI Phone Agents: Feeding live, scraped content into voice models for real-time customer support.

For more on how high-volume users manage this, you can read about how enterprise teams use Firecrawl to power their production agents.

Building Production-Ready RAG Pipelines

A RAG system is only as good as the data it retrieves. If your bot is citing a page that was updated three months ago, it’s useless. Firecrawl supports data freshness through its high-speed async crawler and “last known good” feature, which allows you to retrieve the last successful scrape even if a site is temporarily down.

This level of reliability is essential for maintaining high Google Rankings when using AI-assisted content workflows. If your AI is generating content based on broken or outdated web data, your search visibility will suffer.

Frequently Asked Questions about Firecrawl

How does the Firecrawl credit system work?

Firecrawl uses a credit-based system that scales with your needs. The plans range from a Free tier (perfect for testing) to Hobby, Standard, and Growth tiers.

- Spark 1 Mini: A newer, more efficient model that is 60% cheaper for everyday extraction tasks.

- Credit Packs: If you hit your limit mid-month, you can buy top-up packs or enable Auto-Recharge.

- Rate Limits: Higher-tier plans offer significantly doubled rate limits to handle massive batch jobs.

For the most current details, check the Firecrawl pricing page.

Does Firecrawl handle JavaScript-heavy sites?

Yes, absolutely. Firecrawl uses headless browsers to execute JavaScript, meaning it can “see” the page exactly as a human user would. It handles React, Angular, and other dynamic frameworks with ease. You can even use “Wait” actions to ensure a specific element has loaded before the scrape begins, or take a screenshot to verify the layout.

What integrations does Firecrawl support?

Firecrawl has one of the most robust integration ecosystems in the AI space. It supports:

- Low-code: Zapier, Pipedream, Make, Dify, and Langflow.

- IDE/AI Tools: Claude Code, Cursor, Windsurf, and Gemini CLI.

- Frameworks: LangChain (Python/JS), LlamaIndex, CrewAI, and Composio.

Conclusion

Building in the age of AI requires a new set of tools. You can no longer rely on fragile, manual scraping methods if you want to build agents that are truly autonomous and reliable. Firecrawl has redefined web data extraction by making it “AI-ready” out of the box.

At Clayton Johnson SEO, we specialize in building the growth strategies and AI-assisted workflows that help founders and marketing leaders win. Whether you’re looking to diagnose a growth problem or execute a complex SEO strategy, we’re here to help you navigate the future of digital marketing.

Ready to turn the web into your competitive advantage? Work with me to start building your AI-powered growth engine today.