What Is AI — and Why It Changes Everything

AI — short for artificial intelligence — is the capability of computers to perform tasks that normally require human intelligence, like reasoning, learning, and understanding language.

Here’s a quick snapshot of what AI actually is:

- What it is: Technology that lets machines learn from data, recognize patterns, and make decisions

- Main types: Narrow AI (task-specific), Generative AI (creates content), AGI (human-level reasoning — not yet real)

- How it works: Algorithms analyze data → find patterns → make predictions or generate outputs

- Where you see it: Search engines, fraud detection, medical imaging, virtual assistants, recommendation feeds

- Why it matters: AI is reshaping every industry — from healthcare to finance to how you find information online

Once a concept from science fiction, AI now sits at the center of how businesses compete, how products get built, and how people find answers. It is not one technology. It is an entire ecosystem of methods — machine learning, deep learning, neural networks, and more — all working together to give machines the ability to think, adapt, and act.

And it is moving fast. From Deep Blue defeating chess champion Garry Kasparov to ChatGPT rewriting the rules of search and content in recent years, AI has gone from a research curiosity to the most consequential technology of our time.

This guide breaks it all down — no PhD required.

I’m Clayton Johnson, an SEO strategist and growth operator who has spent years building AI-augmented marketing systems and helping founders understand how to use AI not just tactically, but as a structural advantage. If you want to go beyond the buzzwords and actually understand how this technology works — and what it means for your business — you’re in the right place.

Defining the Field: From Logic to Neural Networks

To truly understand AI, we have to look past the robots and the glowing blue brains in movies. At its core, Artificial intelligence (AI) is a set of technologies that empowers computers to learn, reason, and perform advanced tasks. It is an interdisciplinary field that pulls from computer science, data analytics, linguistics, neuroscience, and even philosophy.

In the early days, researchers followed a path called “Symbolic AI.” This involved creating massive databases of facts and rules. If the computer encountered “Condition A,” it would follow “Rule B.” It was logical, but it was also rigid. It lacked the “common sense” that humans use to navigate the world.

Today, the leading approach has shifted toward data-driven systems. Instead of telling a computer exactly what a “cat” looks like using thousands of lines of code, we show it a million pictures of cats and let the computer identify the patterns itself. This mimics the human brain’s ability to learn from experience.

Even government agencies have had to get specific about what this means. According to NASA AI standards, AI refers to systems that can perform complex tasks normally done by humans—reasoning, decision-making, and creating—often operating under varying circumstances without significant human oversight.

The Evolution of AI Milestones

The history of AI is a rollercoaster of “booms” and “winters.” We have seen periods of massive excitement followed by years where funding dried up because the technology couldn’t live up to the hype.

- The Turing Test: Proposed by Alan Turing, this was the first real benchmark for machine intelligence. It asked: Can a machine mimic a human so well that a real person can’t tell the difference?

- Deep Blue vs. Kasparov: A major turning point occurred when IBM’s Deep Blue defeated world chess champion Garry Kasparov. It proved that machines could out-calculate the best human minds in structured environments.

- AlphaGo: More recently, Google DeepMind’s AlphaGo won four out of five games against Go champion Lee Sedol. This was a massive leap because Go is infinitely more complex than chess, requiring what many call “intuition.”

- Computer Vision: We’ve moved from basic object recognition to systems that can spot breast cancer in medical images with higher accuracy than some specialists.

The History of symbolic AI shows us that we have always wanted to build “electronic brains.” The difference now is that we finally have the data and the hardware to make it happen.

Subfields of Modern Intelligence

We often use “AI” as a catch-all term, but the field is actually a collection of specialized subfields. Think of AI as the “Science of Biology,” while the subfields are like “Genetics” or “Botany.”

- Machine Learning (ML): This is the most widely used subfield today. It involves getting a computer to analyze data to identify patterns that can be used to make predictions.

- Deep Learning: A subset of ML that uses multi-layered neural networks. It is the engine behind most cutting-edge research, including self-driving cars and voice assistants.

- Natural Language Processing (NLP): This is how machines understand, interpret, and generate human language. If you’ve ever talked to Siri or used a translation app, you’ve used NLP.

- Robotics: This combines AI with hardware to create machines that can move and interact with the physical world, like the robots making coffee in some Minneapolis cafes or autonomous delivery drones.

How AI Works: The Mechanics of Machine Learning

If you open the hood of an AI system, you won’t find a tiny person thinking. You’ll find math—lots and lots of math. Most modern AI operates through machine learning, where the system “learns” by processing data.

The process starts with an algorithm—a sequence of instructions written by humans. This algorithm tells the computer how to analyze data to find statistical patterns. The output of this process is a “model.” When you feed new data into this model, it generates a prediction.

Deep learning explained often focuses on “unstructured” data—things like text, images, and sound. While traditional machine learning might need a human to label parts of the data, deep learning can often figure out the features on its own through multiple layers of processing.

Training Methodologies

How does the machine actually learn? We generally use three main methods:

- Supervised Learning: This is like a student with a teacher. We give the AI labeled data (e.g., “This is a picture of a cat,” “This is a picture of a dog”). The system learns the patterns associated with each label.

- Unsupervised Learning: Here, we give the AI data with no labels and tell it to “find the patterns.” This is great for discovering hidden structures in data, like grouping customers by similar buying habits.

- Reinforcement Learning: This is learning through trial and error. The AI gets a “reward” for a correct action and a “penalty” for a wrong one. This is how AlphaGo learned to master the game of Go.

For those looking to build their own systems, Machine learning platforms provide the infrastructure to run these complex calculations without having to build the hardware from scratch.

The Role of Neural Networks

Neural networks are the “brains” of modern AI. They are collections of algorithms loosely modeled on the human brain. They consist of “artificial neurons” that are connected in layers.

When a neural network learns, it adjusts the “weights” or the strength of the connections between these neurons. This process is called “backpropagation.” If the network makes a mistake, it sends a signal back through the layers to adjust the connections so it doesn’t make that mistake again.

Different Neural network architectures are suited for different tasks. For example, “Convolutional Neural Networks” are inspired by the visual cortex and are amazing at image recognition, while “Recurrent Neural Networks” are better for sequential data like speech or text.

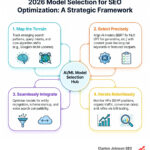

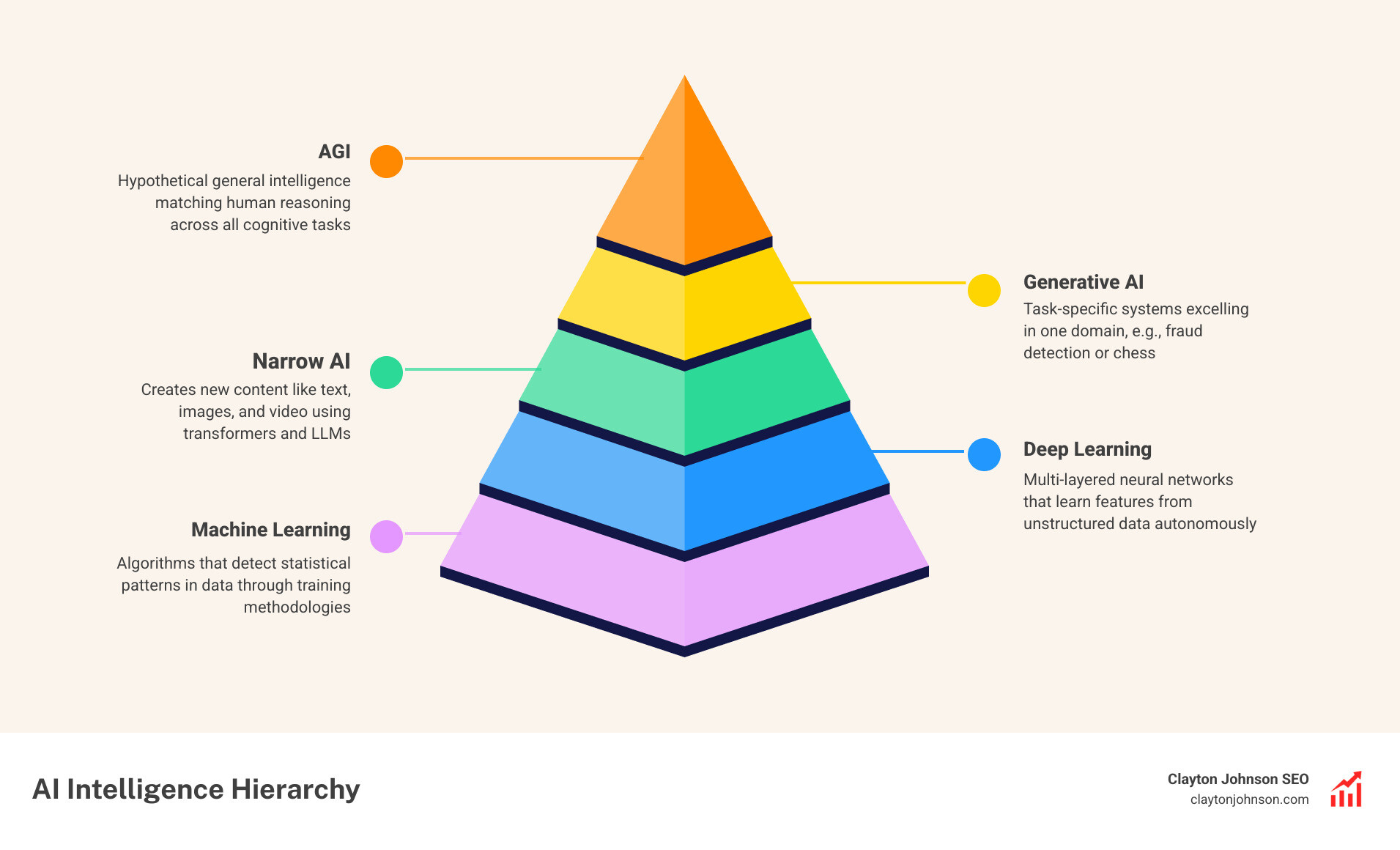

Narrow AI vs. Generative AI vs. AGI

Not all AI is created equal. To understand where we are—and where we are going—we need to distinguish between the three main categories of machine intelligence.

Most AI we use today is Narrow AI. It is designed to do one thing exceptionally well—like predicting the weather, suggesting a movie, or detecting credit card fraud. It might be “smarter” than a human at that specific task, but it can’t do anything else. A chess-playing AI can’t write a poem or drive a car.

Generative AI is a newer, more exciting flavor. Instead of just analyzing existing data, it uses deep learning to create new content. This is what powers tools like ChatGPT, Claude, and Gemini. These systems are built on “Large Language Models” (LLMs) and use a specific architecture called a “transformer.”

How Generative AI Transforms Content

Generative AI has changed the game because it makes “creation” accessible to everyone.

- Text: LLMs learn by Next-word prediction. They guess which word should come next in a sentence based on the patterns they found in billions of pages of internet text.

- Images: Tools like Stable Diffusion or Midjourney use “diffusion models” to synthesize images from text prompts.

- Video: New models are now capable of generating high-quality video clips from a simple description.

This Generative AI overview highlights how these models are “foundation models.” They aren’t just for one task; they can be adapted to do a thousand different things, from writing code to summarizing legal documents.

The Quest for General Intelligence

The “Holy Grail” of research is Artificial General Intelligence (AGI). This refers to a Hypothetical AGI that can master any cognitive task a human can. It wouldn’t just follow patterns; it would reason, plan, and have a sense of “common sense.”

Some scientists believe we are on the doorstep of AGI, while others argue that today’s LLMs are just “stochastic parrots”—they are just repeating patterns they’ve seen without truly understanding them. If we do reach AGI, many predict an inflection point known as “The Singularity,” where technological progress accelerates beyond our ability to track it.

Real-World Applications of AI Across Industries

AI is no longer just for tech companies. It is being deployed in our neighborhoods, our hospitals, and our banks. In Minneapolis, we see AI being used to optimize traffic flow and help local businesses scale their marketing efforts.

In healthcare, the impact is literally life-saving. For example, AlphaFold 2 can approximate the 3D structure of a protein in hours rather than months. This is a massive breakthrough for drug discovery. We are also seeing AI in healthcare implementation through diagnostic tools that can spot diseases in X-rays and MRIs with incredible precision.

Finance and Gaming

The world of finance was an early adopter of AI. Banks use machine learning to:

- Detect Fraud: Algorithms scan millions of transactions in real-time to spot anomalies.

- Algorithmic Trading: Machines execute trades at speeds impossible for humans, reacting to market news in milliseconds.

- Credit Scoring: AI in financial services allows for more nuanced risk assessment, though it also brings up questions about fairness and bias.

In gaming, AI has moved beyond simple “bots.” DeepMind’s AlphaStar reached grandmaster status in StarCraft II, a game that requires long-term planning and the ability to deal with “imperfect information” (you can’t see what your opponent is doing). This research helps us build AI that can navigate the messy, unpredictable real world.

Everyday Consumer Technology

You likely interact with AI dozens of times a day without realizing it.

- Search Engines: Google and Bing now use LLMs to provide “AI Overviews,” summarizing answers so you don’t have to click through ten links.

- Virtual Assistants: Siri and Alexa rely on NLP to understand your voice and execute commands.

- Autonomous Vehicles: Self-driving cars use computer vision to “see” the road and reinforcement learning to navigate safely.

- Recommendations: Netflix and Spotify use AI to analyze your habits and suggest your next favorite show or song.

The introduction of Copilot in search is a prime example of how generative AI is being woven directly into the tools we use to navigate the internet.

The Ethical Landscape and Future Trends

As with any transformational technology, AI brings significant risks and challenges. We can’t talk about the “Silicon Heaven” of AI without acknowledging the hurdles we need to clear.

One major concern is Algorithmic Bias. Because AI learns from human data, it can inherit human prejudices. For example, some facial recognition systems have struggled to accurately identify people with darker skin tones because the training data was skewed. There are also “Hallucinations”—situations where a chatbot confidently states a fact that is completely made up.

Ethics of AI and robotics is now a major field of study, focusing on how we can ensure these systems are transparent, fair, and accountable.

Hardware and Infrastructure

The “brains” of AI require a lot of “brawn” in the form of hardware. Graphics Processing Units (GPUs) have become the gold standard for training AI because they can perform thousands of calculations simultaneously.

We are also seeing a shift in how we think about computing power.

- Moore’s Law: The idea that transistor density doubles every 18 months has held true for decades.

- Huang’s Law: Named after Nvidia’s CEO, this suggests that GPU performance for AI is advancing even faster than Moore’s Law predicted.

However, this comes with a cost. AI power demand is skyrocketing. Data centers could consume up to 8% of US power by the end of the decade. This is leading tech giants to look for “miracle” energy solutions, including reopening nuclear power plants to feed the hunger of AI server farms.

Risks and Governance

Beyond energy and bias, there are broader societal risks:

- Job Displacement: Many “white-collar” tasks like data entry, basic coding, and even some writing are being automated.

- Deepfakes: Generative AI can create convincing videos of people saying things they never said, which poses a massive threat to truth and democracy.

- Regulation: Governments are racing to catch up. The EU AI Act and the Bletchley Declaration are early attempts to create a global framework for safe AI development.

The Existential risks of AI are debated by experts—some fear a “Terminator” scenario, while others are more worried about the “Black Box” nature of these systems, where we don’t fully understand why an AI made a specific decision.

Frequently Asked Questions about Artificial Intelligence

What is the difference between AI and Machine Learning?

AI is the broad goal of creating machines that act intelligently. Machine Learning is a method used to achieve that goal by allowing computers to learn from data patterns without being explicitly programmed for every scenario.

Can AI truly think like a human?

Not yet. Current AI is excellent at statistical guessing and pattern matching. While it can produce results that look like human reasoning, it lacks the consciousness, biological intuition, and general flexibility of the human brain.

Is Generative AI the same as AGI?

No. Generative AI (like ChatGPT) is a very advanced tool that can create content across many domains, but it is still limited to the patterns it was trained on. AGI would be a system that can learn and apply its intelligence to any task, even ones it has never seen before.

Conclusion

The era of AI is not a distant future—it is our current reality. For founders and marketing leaders, the challenge isn’t just “using AI.” It’s about building a structured growth architecture that leverages these tools for long-term success.

At Clayton Johnson SEO, we believe that clarity leads to structure, and structure leads to leverage. We are building Demandflow.ai to provide exactly that—a growth operating system that combines actionable strategic frameworks with AI-augmented marketing workflows.

We don’t just want to help you create content; we want to help you build structured growth infrastructure. Whether you are in Minneapolis or building a global brand, the goal is the same: compounding growth through smart systems.

If you are ready to stop chasing tactics and start building an authority-building ecosystem, explore our Demandflow growth systems and see how we can help you turn AI from a buzzword into a competitive advantage.