Why Organizations Are Racing to Build AI Oversight Structures

Implementing AI governance frameworks has become one of the most urgent strategic imperatives for organizations in 2025. According to recent research, 60% of legal, compliance, and audit leaders now cite technology as their top risk concern—yet only 29% of organizations have comprehensive AI governance plans in place. The gap between AI adoption and responsible oversight is creating real exposure: reputational damage, regulatory penalties, algorithmic discrimination, and erosion of stakeholder trust.

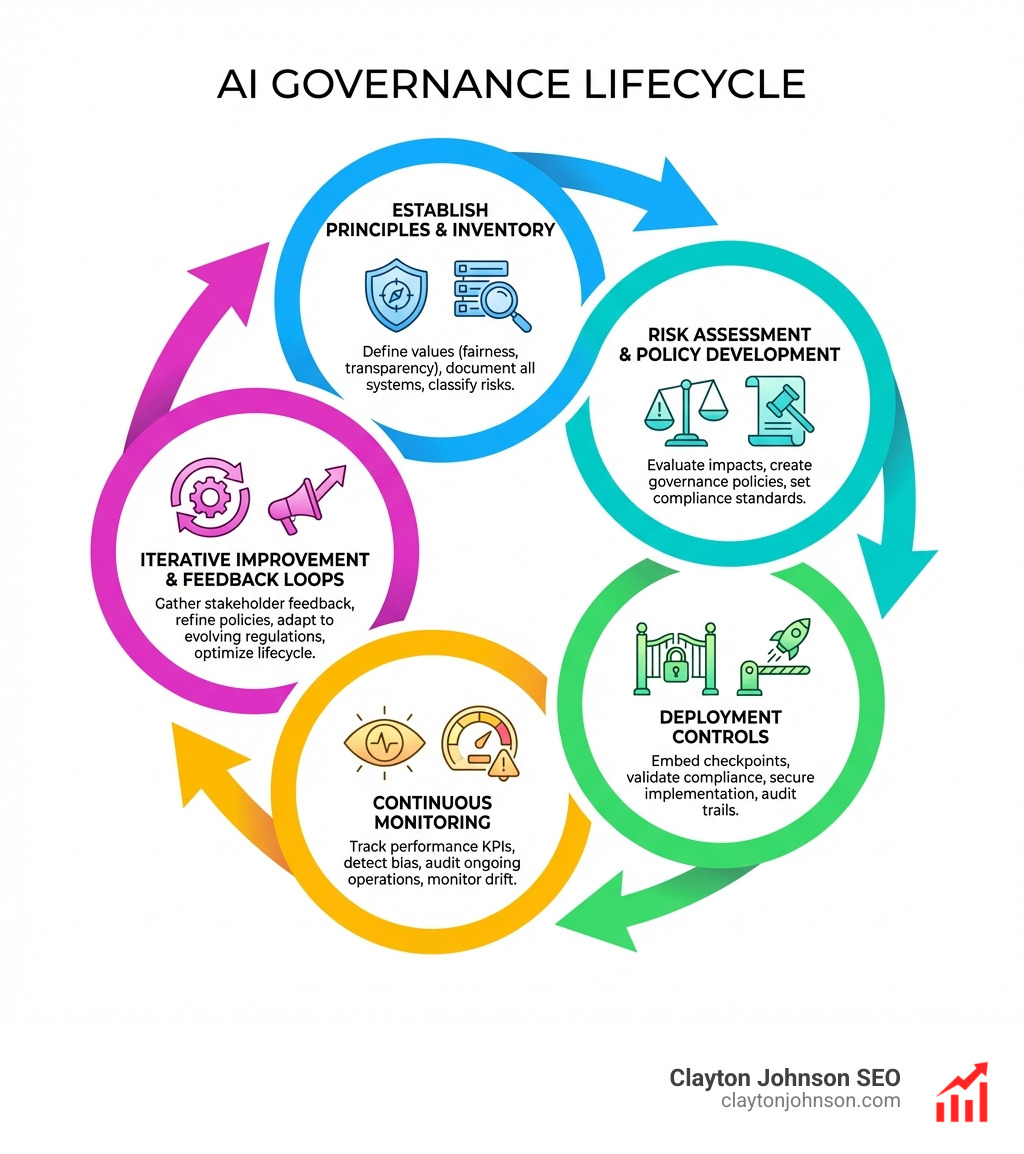

Quick Answer: Core Steps for Implementing AI Governance Frameworks

- Establish foundational principles — Define organizational values around fairness, transparency, accountability, privacy, and security

- Create an AI inventory — Document all AI systems (including shadow AI) and classify by risk level

- Define clear ownership — Assign executive sponsors and build cross-functional governance committees

- Implement lifecycle controls — Embed checkpoints at design, testing, deployment, and monitoring phases

- Monitor and adapt continuously — Track KPIs, conduct audits, and update policies as technology and regulations evolve

The challenge isn’t just technical. Organizations face a “confusing hodgepodge” of regulatory frameworks—from the EU AI Act’s risk-based classifications to NIST’s voluntary guidance to emerging state-level laws. Without clear governance structures, companies inadvertently create “shadow risk functions” where AI decisions lack accountability, transparency suffers, and bias goes undetected until it causes harm.

But there’s a bigger issue: most organizations are treating AI governance as an afterthought rather than a strategic foundation. They’re deploying systems without adequate documentation, oversight, or testing—then scrambling to retrofit governance when incidents occur. This reactive approach is costly and dangerous.

The good news? Reaching high levels of responsible AI maturity takes two to three years when done right. Organizations that embed governance early—with CEO-level involvement and integration into existing corporate structures—realize 58% more business benefits than those that don’t. Strong governance doesn’t slow innovation. It enables it by building trust, reducing risk, and ensuring AI systems actually work as intended.

I’m Clayton Johnson, an SEO and growth strategist who helps organizations build structured systems for measurable outcomes. Through my work implementing AI governance frameworks across multiple companies, I’ve learned that the most effective approaches combine strategic clarity with practical, repeatable processes—exactly what this guide will help you build.

Why Implementing AI Governance Frameworks is Essential

In the current landscape, AI is the new epicenter of value creation. However, as we integrate these tools into our core operations, we must acknowledge that “shadow AI”—where employees use unsanctioned tools like ChatGPT for sensitive tasks—is a growing reality. Implementing AI governance frameworks isn’t just about checking a box for legal; it’s about protecting your brand’s most valuable asset: trust.

When we look at Using AI Competitive Insights to Outsmart Your Rivals, we see that the most successful companies aren’t just the fastest; they’re the ones with the most reliable data. Governance ensures that your AI outputs are accurate and defensible. Furthermore, as we’ve discussed in Why Your Brand Needs an AI Growth Strategy Right Now, scaling without a safety net leads to “algorithmic monoculture,” where one small error in a model can lead to a massive, correlated failure across your entire enterprise.

Effective governance also provides:

- Liability Reduction: By documenting your decision-making process, you create a “paper trail” that is essential for regulatory defensibility.

- Ethical Alignment: Ensuring your AI reflects your company’s mission and values, rather than just chasing efficiency at any cost.

- Operational Efficiency: Standardizing how AI is procured and deployed reduces the “red tape” that often slows down innovation.

The 5 Key Principles of a Successful AI Framework

A robust framework is built on five core pillars. These aren’t just abstract concepts; they are the functional requirements for any system that interacts with your customers or your data.

1. Fairness and Bias Mitigation

AI models can inherit historical biases from their training data, leading to what we call algorithmic discrimination. This is particularly dangerous in high-stakes sectors like recruitment, lending, or healthcare.

A famous example is the ProPublica findings on COMPAS, an algorithm used to predict recidivism. The study found that the system was twice as likely to falsely flag Black defendants as high-risk compared to white defendants. To prevent this, we must:

- Use representative datasets that reflect the diversity of the real world.

- Conduct disparate impact analysis to see if the model’s outcomes differ significantly across protected groups.

- Adopt inclusive design practices that bring diverse perspectives into the development phase.

2. Transparency and Explainability

Many modern AI systems are “black boxes”—even the developers can’t always explain exactly why a specific output was generated. This is no longer acceptable under frameworks like the EU AI Act transparency requirements, which demand that high-risk systems be understandable to users.

To move from “black box” to “glass box,” we use tools like:

- SHAP and LIME: These are techniques that help explain individual predictions by showing which features had the most influence.

- Traceable Reasoning: For agentic AI, we should require the system to provide an “internal monologue” or action ledger in natural language.

- Stakeholder Communication: Clearly labeling AI-generated content so users know they are interacting with a machine.

3. Accountability and Human Oversight

In AI governance, we often say, “You need a throat to squeeze.” This means every AI system must have a clearly defined owner who is responsible for its outcomes.

The U.S. Blueprint for an AI Bill of Rights emphasizes that humans should always have the ability to opt-out or seek human intervention. We recommend using a RACI matrix to define:

- Responsible: The technical team building the model.

- Accountable: The business leader or executive sponsor.

- Consulted: Legal and ethics committees.

- Informed: The end-users and stakeholders.

4. Privacy and Data Protection

AI thrives on data, but this creates a natural tension with privacy. We must balance the need for large datasets with the principle of data minimization. Following Google’s AI Principles, we focus on:

- PII Protection: Using techniques like differential privacy to ensure individual identities cannot be reverse-engineered from the model.

- Anonymization: Scrubbing sensitive data before it ever hits the training pipeline.

- Encryption: Ensuring data is secure both at rest and in transit.

5. Robust Security and Resilience

AI systems introduce new attack vectors, such as prompt injection (tricking a chatbot into ignoring its safety rules) or model inversion (stealing the training data).

We look to the MITRE ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems) for a matrix of these threats. To build resilience, organizations should follow National Cyber Security Centre guidelines by implementing:

- Adversarial Testing: “Red-teaming” your models to find vulnerabilities before hackers do.

- Output Filtering: Using secondary AI systems to monitor and block unsafe or biased outputs in real-time.

Global Standards: Navigating the Regulatory Landscape

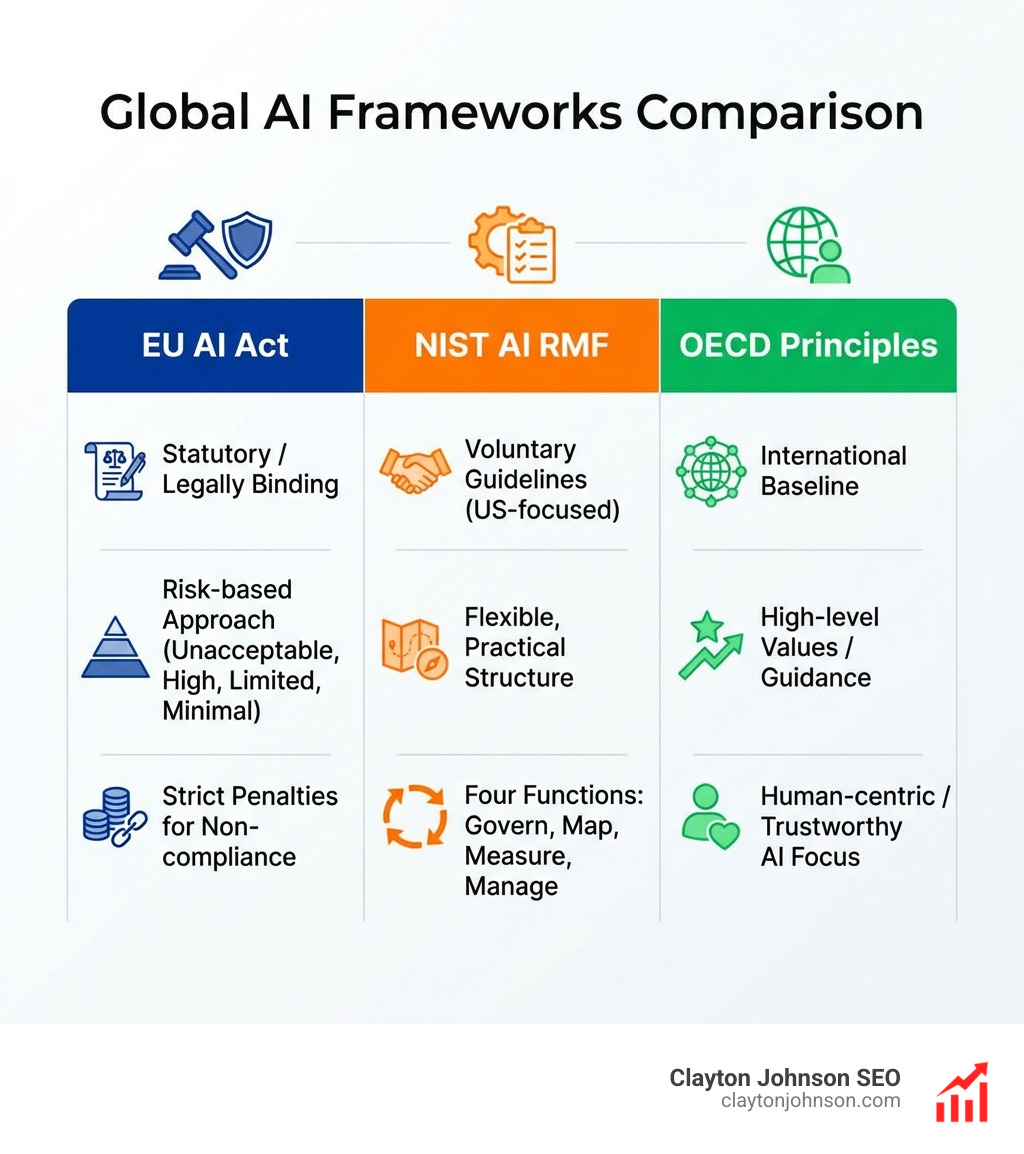

Navigating AI regulation can feel like a maze. While the US currently favors a pro-innovation approach with voluntary guidelines, the EU has moved toward strict, legally binding rules.

Key global standards include:

- The EU AI Act: The world’s first comprehensive AI law, which bans “unacceptable risk” systems (like social scoring) and imposes heavy requirements on “high-risk” systems in healthcare and education.

- NIST AI Risk Management Framework: A highly practical, voluntary framework from the US that focuses on four functions: Govern, Map, Measure, and Manage. You can find the full NIST AI Risk Management Framework document to help structure your internal processes.

- OECD AI Principles: These OECD AI Principles serve as an international baseline for trustworthy AI, focusing on human-centric values and transparency.

- ISO/IEC 42001: This is the international standard for an AI Management System (AIMS), providing a certifiable structure for organizations to prove their governance maturity.

A Practical Guide to Operationalizing Oversight

Knowing the principles is one thing; putting them into practice is another. The first step is creating a comprehensive AI inventory. You can’t govern what you don’t know exists. This inventory should include sanctioned tools, third-party APIs, and even the “shadow AI” being used by your marketing team.

As you build your team, 8 Essential AI Skills Every Marketer Needs includes a strong understanding of ethics and data privacy. You aren’t just looking for coders; you’re looking for “AI Champions” who can bridge the gap between technical capability and ethical responsibility.

Best Practices for Implementing AI Governance Frameworks

We recommend a phased implementation approach. Don’t try to do everything at once. Start with your most high-impact, high-risk use cases.

- Secure Executive Buy-in: Research shows that when the CEO is involved in Microsoft’s Responsible AI principles adoption, business benefits increase by 58%.

- Conduct Ethical Impact Assessments: Before a model is deployed, evaluate its potential impact on people and society.

- Continuous Monitoring: AI models “drift” over time as the world changes. Use automated auditing tools to track performance and bias in real-time. Our The Ultimate Guide to Digital Marketing AI provides more context on how these tools fit into a broader marketing stack.

Integrating Governance with Existing Policies

You don’t need to reinvent the wheel. The most effective AI governance “lives off the land” by integrating with your existing GRC (Governance, Risk, and Compliance) structures.

- Data Privacy: Align your AI data usage with your existing GDPR or CCPA policies.

- Cybersecurity: Treat AI models as critical infrastructure within your existing security protocols.

- Procurement: Use the Databricks Essential AI Governance Framework to set standards for third-party AI vendors. Ensure that any AI you “buy” meets the same ethical standards as the AI you “build.”

Overcoming Challenges in the Implementation Process

The biggest challenge in implementing AI governance frameworks is the sheer pace of the technology. By the time you draft a policy for LLMs, your team might already be moving toward “agentic AI”—systems that can take actions on their own.

Common Pitfalls When Implementing AI Governance Frameworks

- Governance as an Afterthought: Trying to “bolt-on” ethics after a model is already in production is expensive and often ineffective.

- Underestimating Data Engineering: If your data is “garbage,” your governance will be too. High-quality data is the foundation of fairness.

- Siloed Accountability: If governance is only “Legal’s problem,” the technical teams will find ways to bypass it. It must be a cross-functional effort.

- Documentation Gaps: Failing to maintain Best Practices in AI Documentation makes it impossible to audit your systems later.

Adapting to Evolving Technologies

As we move toward more autonomous systems, our governance must evolve. We need to consider the MIT research on GenAI environmental impact, as the massive energy and water requirements of AI become a core part of ESG (Environmental, Social, and Governance) reporting.

We also need to prepare for “model drift.” An AI that was fair and accurate in January might become biased by June as the underlying data patterns change. This requires automated retraining pipelines and constant feedback loops to ensure the system stays within its “guardrails.”

Frequently Asked Questions about AI Governance

What is the difference between ethical AI and responsible AI?

While the terms are often used interchangeably, there is a key distinction. Ethical AI is philosophical; it focuses on abstract principles, societal implications, and the “why” behind AI development. Responsible AI is tactical; it is the practical application of those principles through specific frameworks, accountability structures, and regulatory compliance. It’s about the “how.”

Who should lead the AI governance committee?

AI governance is a shared responsibility. We recommend a cross-functional committee led by a Chief AI Ethics Officer or a combination of the Chief Technology Officer (CTO), General Counsel (Legal), and the Chief Risk Officer (CRO). This ensures that technical feasibility, legal compliance, and business risk are all balanced.

How do you measure the success of an AI governance program?

Success isn’t just the absence of a disaster. We measure it through specific KPIs:

- Bias Findings: A reduction in the number of biased outcomes detected during audits.

- Audit Completeness: The percentage of AI systems that have full documentation and risk assessments.

- Incident Response Speed: How quickly the team can identify and mitigate a model failure.

- Compliance Rate: The number of AI projects that pass through the “gatekeeping” process without requiring major remediation.

Conclusion

At Clayton Johnson SEO, we believe that growth and responsibility go hand-in-hand. By implementing AI governance frameworks today, you aren’t just avoiding risk—you’re building the foundation for sustainable, scalable innovation. Whether you are using our Demandflow tools or seeking a practical growth strategy, our mission is to help you achieve measurable results through actionable frameworks.

Ready to secure your AI future and outsmart the competition? More info about SEO services is available to help you integrate these advanced workflows into your marketing and operations. Let’s build something responsible together.