Why Replicate’s Developer Tools Strategy Matters for Modern AI Development

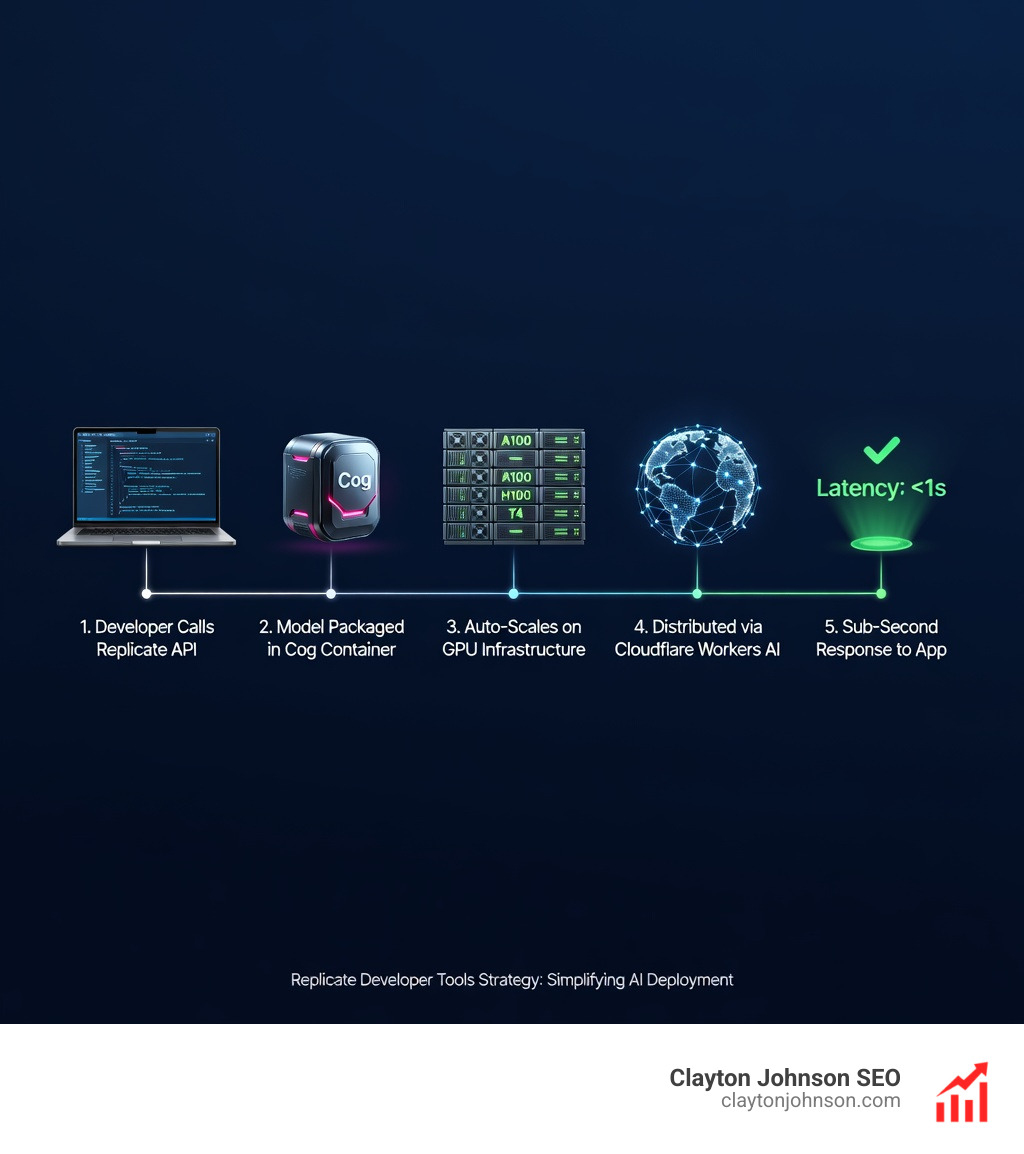

Replicate developer tools strategy centers on making AI model deployment as simple as running a line of code. At its core, this approach abstracts away GPU infrastructure, containerization complexity, and scaling challenges—letting developers focus on building products instead of managing backends.

Key elements of Replicate’s strategy:

- API-first architecture – Run 50,000+ models with simple HTTP calls

- Cog packaging system – Containerize any model into production-ready Docker images

- Automatic scaling – Scale from zero to hundreds of instances based on traffic

- Open-source foundation – Maintain SDKs for JavaScript, Python, Go, and Swift

- Model marketplace – Access community-contributed models with one-line deployment

- Cloudflare integration – Deploy to global edge network for low-latency inference

The strategy reflects a fundamental belief expressed by Replicate’s team: “AI shouldn’t be locked up inside academic papers and demos; it should be made real via platforms like Replicate.” This philosophy drives every technical decision—from the choice to build Cog as an open-source tool to the recent adoption of Stainless for automated SDK generation.

Why this matters now: AI development has shifted from specialized data science teams to general software engineers. The barrier isn’t understanding neural networks—it’s operationalizing them. Replicate removes that friction by treating models like npm packages: discoverable, forkable, and deployable without infrastructure expertise.

The recent Cloudflare acquisition accelerates this vision. By integrating Replicate’s catalog into Workers AI, developers gain one-line access to any model on a globally distributed network. This isn’t just about faster inference—it’s about democratizing AI deployment at scale.

I’m Clayton Johnson, and I’ve spent years building scalable traffic systems and AI-augmented marketing workflows where understanding platforms like Replicate developer tools strategy directly impacts execution speed and competitive positioning. This guide breaks down how Replicate’s approach creates leverage for developers and what the Cloudflare integration means for the AI infrastructure landscape.

Essential replicate developer tools strategy terms:

The Core Pillars of the Replicate Developer Tools Strategy

At the heart of Replicate’s success is a commitment to the developer experience (DX). We often see platforms that offer powerful AI capabilities but require a Ph.D. in infrastructure management to actually use them. Replicate flipped the script. Their core mission is to simplify AI model deployment so that any software engineer can treat a machine learning model like a standard library.

The replicate developer tools strategy is built on an API-first architecture. This means you don’t need to worry about CUDA drivers, Python environment conflicts, or provisioning expensive GPU instances manually. Instead, you interact with a massive model marketplace through a unified interface. Whether you are using the Replicate Playground to test a new image generator or calling the API from a production app, the experience is seamless.

Simplifying Deployment with a Replicate Developer Tools Strategy

The “magic” of Replicate is the one-line deployment. For most developers, the journey starts with a simple import. You should be able to import an image generator the same way you import an npm package. This philosophy is evident in their replicate-101/ guides, which emphasize getting from “idea” to “running model” in minutes.

By integrating deeply with GitHub, Replicate allows developers to push custom models and have them automatically converted into a cloud API. This creates environment consistency that is often missing in the “it works on my machine” world of data science. Every model run on Replicate is versioned, making machine learning reproducible—a critical requirement for any production-grade software.

Scaling and Hardware Flexibility

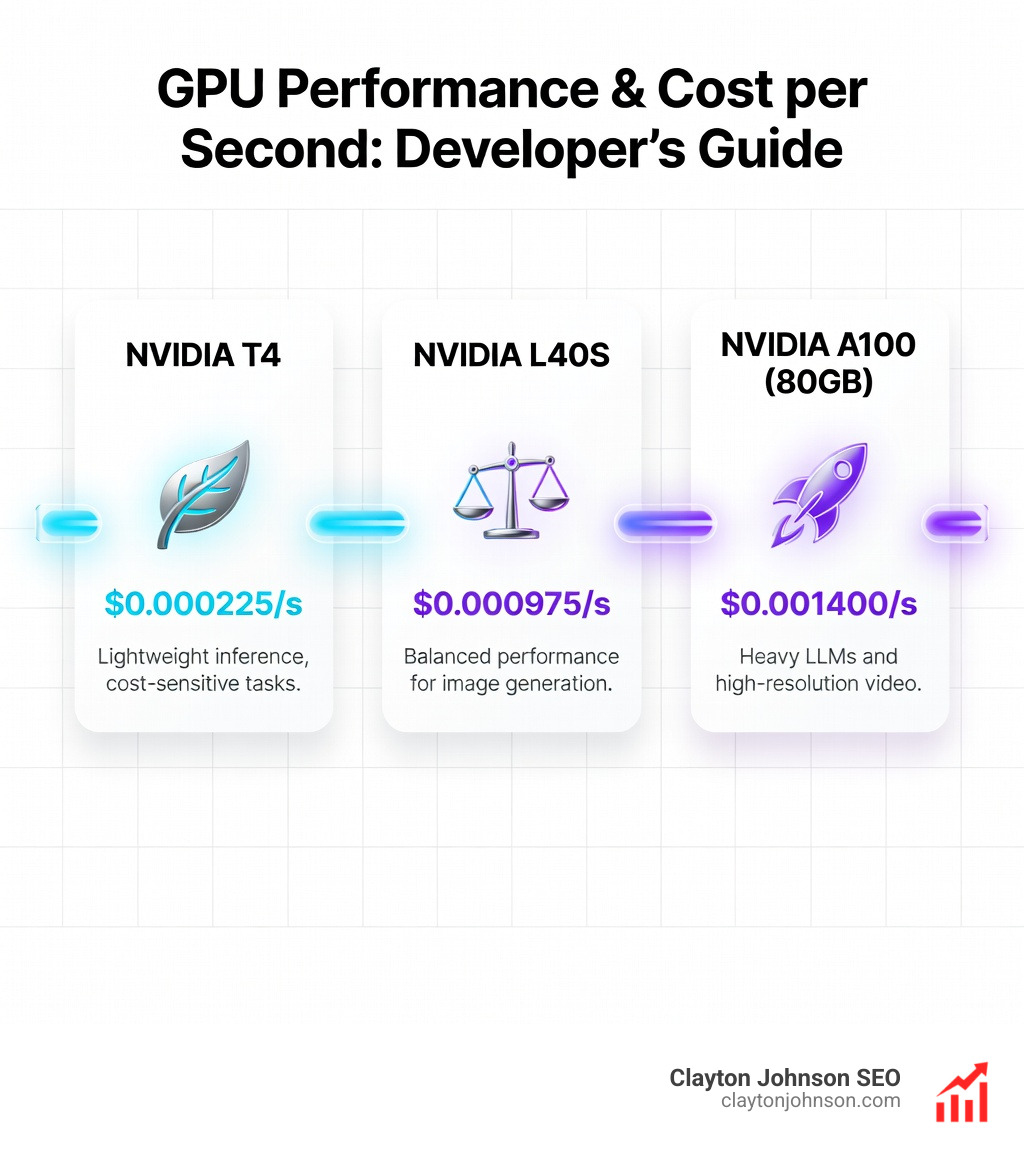

One of the biggest headaches in AI is managing hardware. Do you need an NVIDIA T4 for cost-efficiency or an H100 for raw speed? Replicate removes the need to choose permanently. Their “Deployments” feature allows for incredible hardware flexibility. You can switch hardware types—from A100s to L40S GPUs—without changing a single line of code.

More importantly, the replicate developer tools strategy includes intelligent, automatic scaling. The platform can scale from zero to hundreds of instances to handle traffic spikes and then scale back to zero when idle. This “scale-to-zero” capability is a game-changer for startups because you only pay for the seconds the GPU is actually processing your request.

| GPU Type | Cost per Second | Best Use Case |

|---|---|---|

| Nvidia T4 | $0.000225 | Lightweight inference, cost-sensitive tasks |

| Nvidia L40S | $0.000975 | Balanced performance for image generation |

| Nvidia A100 (80GB) | $0.001400 | Heavy LLMs and high-resolution video |

| 8x Nvidia A100 | $0.011200 | Massive batch processing and training |

Strategic Acquisition: How Cloudflare Enhances Replicate’s Reach

The announcement that Replicate is joining Cloudflare marks a massive shift in the “Connectivity Cloud” landscape. Cloudflare’s goal has always been to abstract away infrastructure complexity, and Replicate is the perfect missing piece for their AI vision.

By combining Replicate’s model management with Cloudflare’s global network, we are seeing the birth of a truly distributed AI cloud. Cloudflare Workers can now leverage Replicate’s expertise to run custom models and pipelines across thousands of edge locations. This reduces latency by bringing the “brain” (the AI model) closer to the “user” (the application).

Integrating the Replicate Developer Tools Strategy into Workers AI

The integration brings over 50,000 production-ready models directly to the fingertips of millions of Cloudflare users. This creates an “all-in-one shop” for AI development. Developers can discover a model in the Replicate catalog, fine-tune it with their own data, and deploy it as a serverless application on Cloudflare Connectivity Cloud with zero infrastructure overhead.

This move reinforces a broader trend: AI is moving from specialized data-science silos into general software engineering groups. When you can call a complex Flux image model or a Llama 3 LLM with one line of code from a Worker, the speed of innovation increases exponentially.

Open-Source Foundations and the Role of Cog

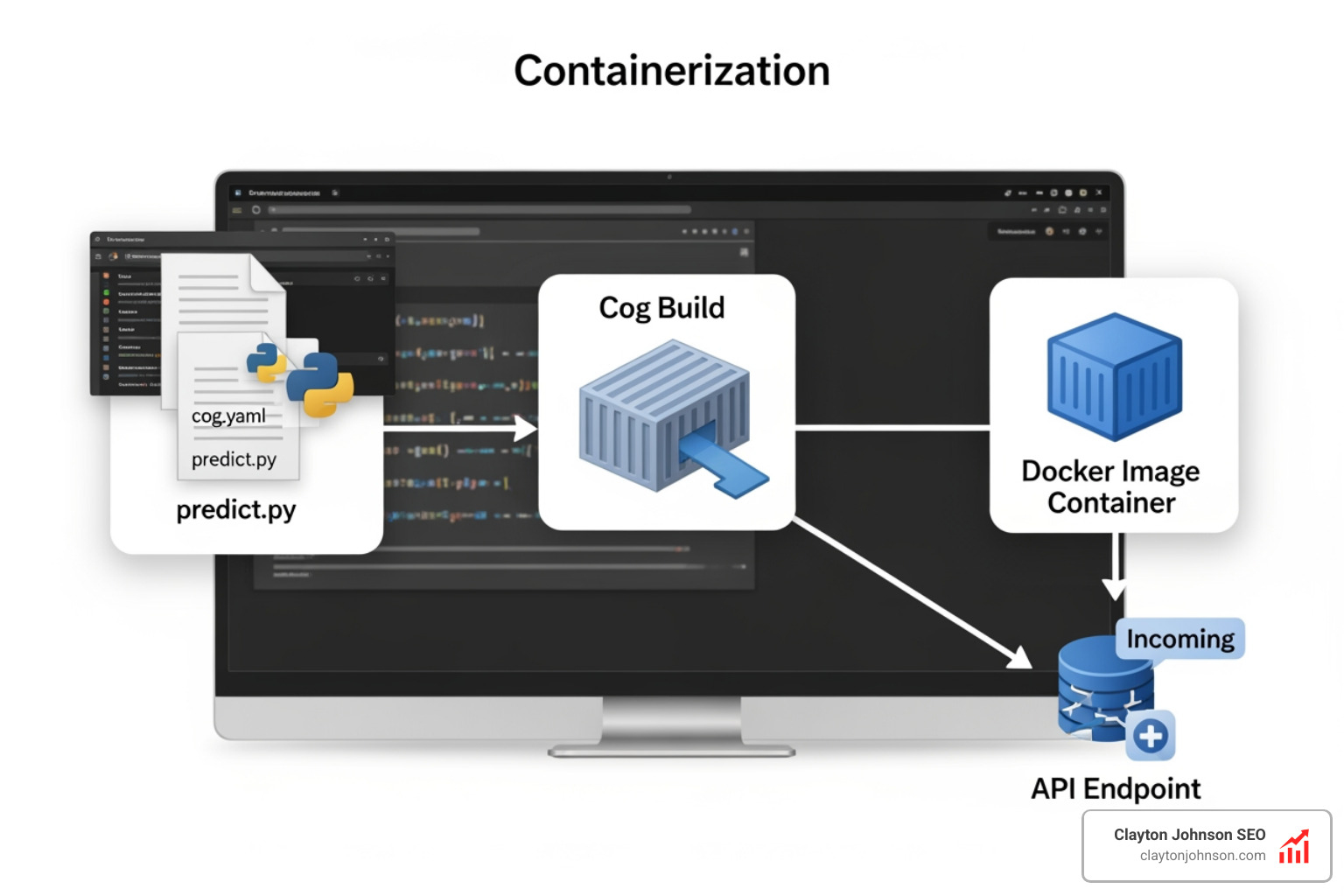

A key differentiator in the replicate developer tools strategy is their commitment to open source. Founders Ben Firshman (the creator of Docker Compose) and Andreas Jansson (former Spotify research engineer) applied their deep infrastructure expertise to create Cog.

Cog is an open-source tool that lets you package machine learning models in standard, production-ready Docker containers. It handles the “dependency hell” of ML by generating a cog.yaml file that defines your environment and a predict.py file for inference. Every model you see on Replicate is packaged with Cog, ensuring that if it runs on Replicate, it can run anywhere. You can find the source and contribute at Cog on GitHub.

Automated SDKs with Stainless

Maintaining SDKs for multiple languages (Python, JavaScript, Go, Swift) is a massive burden for any API company. To solve this, Replicate adopted a strategy of automated generation using Stainless.

By using Stainless to turn their OpenAPI specs into high-quality SDKs, Replicate ensured their client libraries never fall out of sync with the underlying API. As noted in the Stainless SDK Case Study, this moved SDKs from a “maintenance liability” to a “DX advantage.” Developers get better type safety, improved auto-pagination, and faster access to new features without waiting for manual updates.

Market Positioning and Competitive Differentiation

While giants like AWS and Google Cloud offer raw GPU power, and Hugging Face offers a massive model repository, Replicate occupies a unique “middle ground” of extreme usability. They aren’t just a place to store models; they are a place to run them instantly.

The replicate developer tools strategy differentiates itself through:

- Simplicity: No need to manage Kubernetes clusters or VPCs.

- Community: Thousands of models contributed by users, with popular ones like

prunaai/z-image-turboreaching over 21.6M runs. - Pricing: A pure pay-per-use model that avoids the “minimum monthly spend” traps of traditional cloud providers.

Traction and Growth Metrics

The numbers back up this strategy. Replicate has raised a total of $57.8 million in funding, with their most recent rounds valuing the company at $350 million. Their growth metrics demonstrate clear product-market fit:

- Active users grew by 150% in the last year.

- Monthly Recurring Revenue (MRR) increased by 40% year-over-year.

- Net Revenue Retention (NRR) is maintained at a healthy 120%.

- Customer Acquisition Cost (CAC) was reduced by 25%, proving that a developer-first, organic growth strategy works.

Real-World Impact and Future Roadmap

What can you actually build with these tools? The use cases are as diverse as the developer community itself. We’ve seen everything from:

- Autonomous Robots: Using zero-shot open-source models for navigation.

- Creative Apps: iPad apps that let users “paint with AI” in real-time.

- Enterprise Solutions: Companies using fine-tuned models to generate brand-consistent marketing assets.

Looking ahead, Replicate’s roadmap focuses on deeper enterprise features. This includes more robust production monitoring, canary releases (testing new model versions on a small slice of traffic), and zero-downtime deployments. As they integrate further with Cloudflare, expect to see even more “intelligent scaling” features that eliminate cold starts entirely for mission-critical applications.

Frequently Asked Questions about Replicate

How does Replicate simplify AI for developers?

Replicate abstracts the entire infrastructure stack. Instead of setting up servers and managing GPUs, you use Cog to package your model and the Replicate API to run it. It turns complex machine learning into a simple, scalable web service.

What is the benefit of the Cloudflare acquisition?

The acquisition marries Replicate’s model library with Cloudflare’s global edge network. This means developers can run AI inference closer to their users, significantly reducing latency while benefiting from Cloudflare’s security and serverless ecosystem.

How does Replicate handle scaling and costs?

Replicate uses a pay-per-use model. You are only charged for the seconds your model is actively running. Their intelligent scaling handles everything from “scaling to zero” (no cost when idle) to scaling up to hundreds of GPUs to handle massive traffic spikes.

Conclusion

The replicate developer tools strategy is a masterclass in lowering the barrier to entry for complex technology. By focusing on the developer experience, open-source reliability, and strategic partnerships like the one with Cloudflare, they have made AI accessible to the “masses” of software engineers.

At Clayton Johnson SEO, we focus on these same principles of leverage and growth. Whether it’s building AI-assisted content systems or diagnosing growth problems through competitive analysis, we help founders and marketing leaders execute with measurable results. If you’re looking to integrate these types of advanced workflows into your own SEO Services, we’re here to help you navigate the future of digital growth.