The Real Truth About Coding AI Growth

Why Enterprise Developers Are Betting Billions on AI Coding Tools

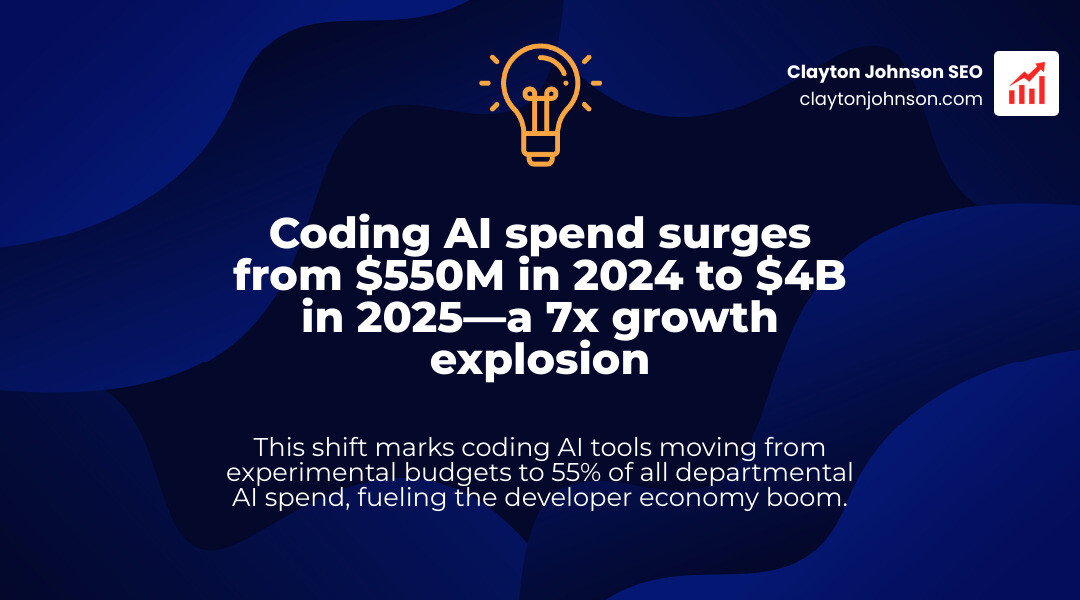

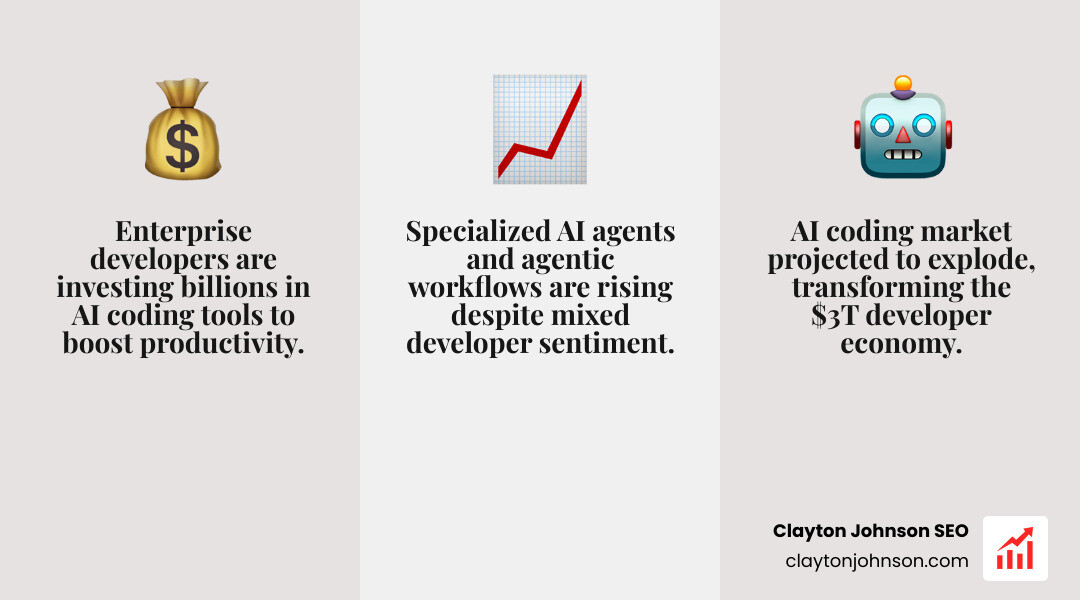

Coding AI growth has exploded into the single largest category of enterprise AI spending in 2025, capturing 55% of all departmental AI budgets at $4 billion – a staggering 7x year-over-year increase from just $550 million in 2024.

Key facts about Coding AI growth in 2025:

- Market size: $4B in coding AI spend out of $7.3B total departmental AI budget

- Growth rate: 7x year-over-year, fastest of any AI category

- Developer adoption: 84% using or planning to use AI tools (up from 76% in 2024)

- Daily usage: 51% of professional developers use AI coding tools every day

- Productivity gains: Teams report 15%+ velocity improvements across the development lifecycle

- Top tools: Cursor reached $500M ARR in 15 months; Claude Code hit $400M ARR in 5 months

But here’s what the numbers don’t tell you: positive sentiment is dropping even as adoption climbs. Only 60% of developers now view AI coding tools favorably, down from 70%+ in prior years. More developers distrust AI accuracy (46%) than trust it (33%). And 66% cite “almost right but not quite” solutions as their top frustration.

This isn’t hype. It’s not a bubble. It’s a messy, complicated change happening in real time – with real money, real productivity gains, and real friction points that nobody’s talking about honestly.

The companies winning this market didn’t hire enterprise sales teams. They didn’t run traditional marketing playbooks. They built tools developers actually wanted to use, then let product-led growth do the work. Cursor hit $200M in revenue before hiring a single enterprise sales rep. That’s not a marketing story – that’s a signal that the old rules don’t apply anymore.

I’m Clayton Johnson, and I’ve spent years building SEO and growth systems for companies navigating technology shifts like Coding AI growth. What I’m seeing now is fundamentally different from past adoption curves – and the implications stretch far beyond productivity metrics into how we think about software careers, education, and the $3 trillion global developer economy.

Note: If your publishing checks flag “gibberish” in this infographic, the issue is likely unreadable text introduced during image rendering, compression, or scaling (not the article copy). If the warning persists, swap the infographic file for a higher-resolution version with clearly legible typography while keeping the same chart content.

The Economic Explosion of Coding AI Growth

The sheer scale of coding AI growth has caught many industry analysts off guard. While total departmental AI spending hit $7.3 billion in 2025, a massive $4 billion of that was funneled directly into engineering and product teams. This represents a 7x year-over-year jump. To put that in perspective, while marketing and customer support are often cited as prime AI use cases, they combined for less than 20% of the total spend.

Why is the money flowing here? Because unlike a “creative” AI tool where ROI can be fuzzy, coding is highly measurable. We can track lines of code shipped, pull requests merged, and deployment velocity. According to the Menlo Ventures 2025 State of Generative AI, coding has become AI’s first true “killer use case.”

The market is currently consolidating around a few dominant players. Cursor, an AI-native IDE, reached a $500 million ARR and an almost $10 billion valuation within just 15 months. Anthropic’s Claude Code has seen similar explosive trajectory, hitting $400 million ARR in less than half a year. These tools didn’t win through aggressive enterprise sales; they won through a pure Product-Led Growth (PLG) motion. Developers tried them, realized they worked, and pulled them into the enterprise.

Measuring Productivity Gains in Coding AI Growth

If you ask a developer why they use these tools daily, the answer usually comes down to “velocity.” We aren’t just talking about typing faster; we’re talking about a fundamental shift in the software development lifecycle (SDLC).

Teams are reporting 15%+ velocity gains across the board. In top-quartile organizations – the ones most aggressive about AI integration – daily usage of AI tools sits at 65%. For these high-performers, AI is no longer a “plugin”; it is the primary interface for writing software.

Data from the Stack Overflow 2025 Survey data reveals that 51% of professional developers now use these tools daily. The biggest gains are seen in:

- Prototyping: Building a functional “vibe” of an app in minutes rather than days.

- Boilerplate: Eliminating the repetitive setup tasks that used to take up the first 20% of every project.

- Refactoring: Using tools like Claude Code to restructure legacy codebases with a single prompt.

Navigating the Risks of Rapid Coding AI Growth

However, we have to acknowledge the growing friction. Despite the massive coding AI growth, a “trust gap” is forming. The more developers use these tools, the more they realize their limitations.

Currently, 46% of developers say they distrust the accuracy of AI-generated code, compared to only 33% who trust it. This isn’t just skepticism; it’s a reaction to the “almost right” phenomenon. AI models are incredibly good at generating code that looks perfect but contains a subtle logic error or a security vulnerability.

Scientific research on AI accuracy suggests that while AI can handle simple functions with high reliability, its performance on complex, multi-file architectural tasks still requires heavy human intervention. This has led to “debugging fatigue,” where developers spend more time hunting for AI-generated hallucinations than they would have spent writing the code from scratch.

Why Developer Sentiment is Souring Despite Adoption

It sounds like a paradox: why is usage at an all-time high (84%) while favorability is at a record low (60%)?

The answer lies in the nature of the work. As we move from “AI as a search engine” to “AI as a co-pilot,” the stakes get higher. The top frustration for 66% of developers is the “almost right” solution. It’s the code that compiles but fails in production under edge cases.

According to Latest research on developer sentiment, the “honeymoon phase” of AI coding is over. Developers are now in the “grind phase,” where they realize that using AI doesn’t mean working less—it means working differently.

- 45% of developers report that debugging AI-generated code is more frustrating than debugging their own.

- Human verification has become the most critical skill in the stack. We are shifting from being “writers” to being “editors.”

- Shallow learning curves: There is a fear that junior developers are losing the “findy phase” of learning because the AI gives them the answer too quickly, preventing them from understanding the why behind the code.

The Rise of Specialized AI Agents and Agentic Workflows

We are now moving beyond simple code completion into the era of AI agents. These are tools that don’t just suggest a line of code; they can take a high-level goal (e.g., “Add a login page with Google OAuth”), plan the steps, write the code, run the tests, and open a pull request.

The GitHub Octoverse analysis shows that 1 in 7 pull requests now involves an AI agent – a 14x increase since early 2024. However, this “agentic” shift brings new headaches:

- Accuracy (87%): The fear that an autonomous agent will make a massive architectural mistake.

- Security (81%): The risk of agents pulling in insecure dependencies or leaking API keys.

To manage this, a new “Agent Stack” is emerging. Developers are using orchestration frameworks like LangChain and local model runners like Ollama to build guardrails around these agents.

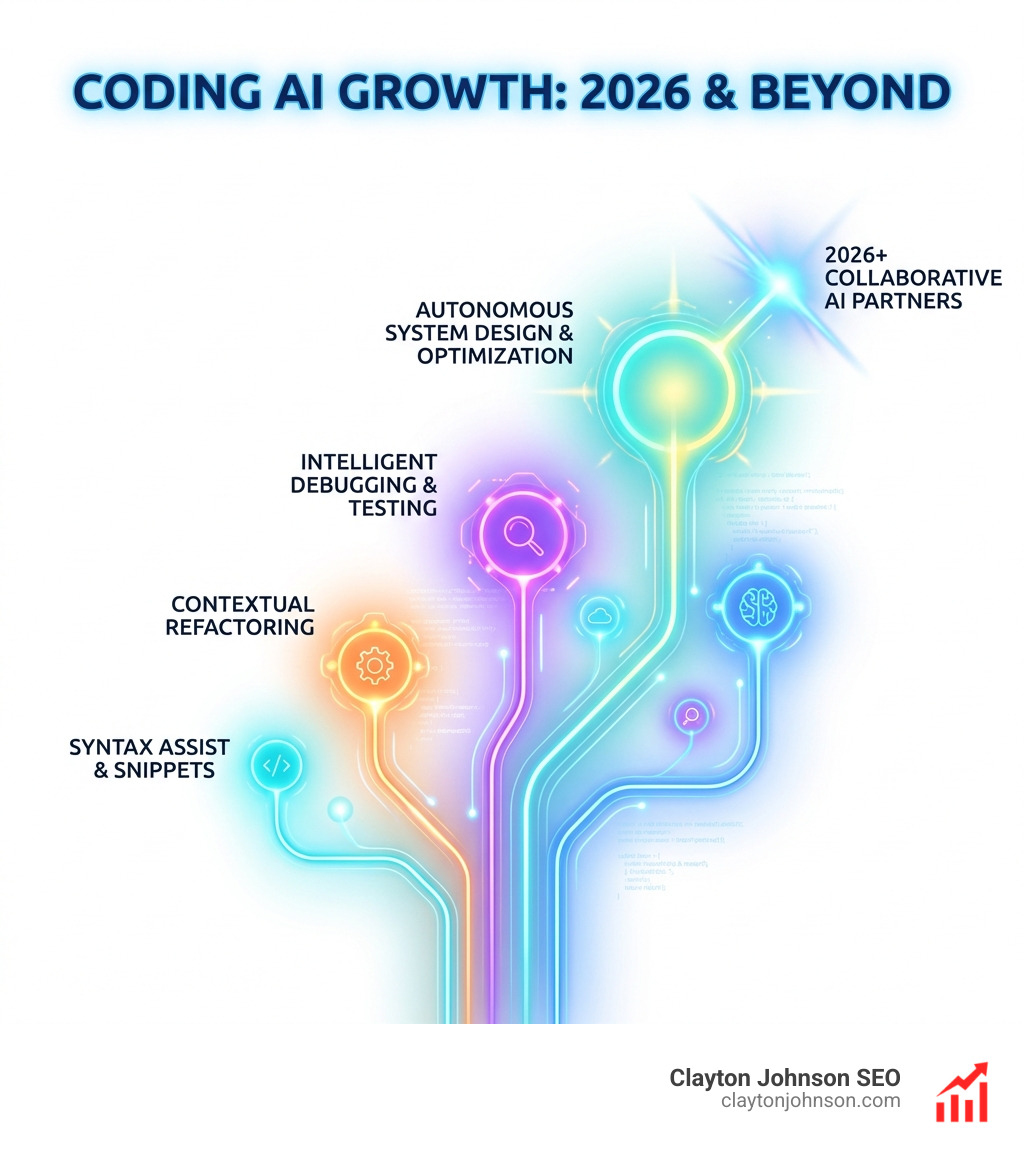

Integration Stages in the Development Lifecycle

Where exactly is coding AI growth hitting the hardest? It’s not equal across the workflow.

- Documentation (Most Integrated): Almost everyone is using AI to write README files and docstrings.

- Testing: AI is excellent at generating unit tests, though 45% of devs still struggle with debugging them.

- Legacy Migration: This has become a “killer app.” Enterprises are using AI to move from COBOL or Fortran to Java and Python at record speeds.

- Code Review: Tools like CodeRabbit and GitHub’s Copilot code review are now used by 72% of developers to catch errors before they hit production.

- Deployment (Least Integrated): 76% of developers still refuse to let AI touch deployment. The risk of an automated system taking down a production server is still too high.

The Future of the $3T Developer Economy and Junior Talent

The most sobering part of the coding AI growth story is its impact on the next generation of talent. The global developer economy is estimated at $3 trillion, assuming roughly 30 million developers each generate $100,000 in economic value.

But for those just starting out, the “promise” of a coding career feels volatile. According to a Stanford Digital Economy Study, IT and software engineering jobs for workers aged 22-25 have declined by 6%, while hiring for experienced developers (ages 35-49) has increased by 9%.

Companies are hesitant to hire juniors because AI can now do the “grunt work” that interns and entry-level devs used to handle. In 2024, entry-level tech hiring decreased by 25%. This is creating a “seniority gap” that could haunt the industry in a decade.

Education is also being disrupted. 97% of students now use AI for their studies. While this helps with exam scores (showing a 10% improvement), there is a significant concern about skill atrophy. If you never learn to struggle with a bug because the AI fixed it for you, do you actually know how to code?

Frequently Asked Questions about Coding AI Growth

Why is coding the largest category of AI spending?

Coding represents 55% of departmental AI spend because it offers the clearest ROI. Developers are expensive, and their output is digital and easily measured. A 20% boost in developer productivity translates directly to millions of dollars in saved time and faster product launches. Furthermore, the “Claude Sonnet 3.5 inflection point” in mid-2024 proved that models could finally handle complex logic reliably enough for professional use.

Are AI coding tools making junior developers redundant?

It’s a complicated “yes and no.” AI has made the tasks of a 2015-era junior developer redundant (writing boilerplate, basic debugging, simple CSS). However, it has created a new role for “AI-augmented” juniors who can act as project managers for AI agents. The risk is that without the “manual” experience, these juniors may struggle to become the senior architects of the future.

What are the biggest technical challenges with AI agents in 2025?

Accuracy remains the #1 hurdle (cited by 87% of developers). “Hallucinations” in code are often harder to find than standard syntax errors. Security is #2 (81%), as agents can inadvertently introduce vulnerabilities. Lastly, the cost of running high-context models (like Claude Opus) on large repositories can reach $10,000+ per developer per year, leading to “capex fatigue” in some organizations.

Conclusion

The coding AI growth we’ve witnessed over the last 15 months isn’t just a trend – it’s a fundamental re-architecting of how human beings create technology. We are moving toward a world where the “vibe” or the “intent” matters more than the syntax. But as we’ve seen, that transition is fraught with distrust, debugging headaches, and a shifting job market.

At the end of the day, AI isn’t replacing the developer; it’s replacing the keyboard. The developers who thrive will be those who stop focusing on individual lines of code and start focusing on overall software architecture and agent orchestration.

We at Clayton Johnson specialize in helping organizations steer these types of massive technological shifts. Whether you’re looking to optimize your SEO strategy or integrate AI-assisted workflows into your operations, we provide the frameworks to help you execute with measurable results. The “killer use case” for AI has arrived – it’s time to build.