The AI Selection Mistakes That Are Costing You Clarity (and Budget)

How to evaluate AI is one of the most important decisions a founder or marketing leader can make — and most teams get it wrong.

Here’s a quick framework to evaluate any AI tool before you commit:

- Define the task — Can AI actually complete it reliably, or are you hoping it can?

- Check the ground truth — What data was used to train and validate the model? Is it objective or subjective?

- Test with real inputs — Run your actual use cases, not vendor demos.

- Measure what matters — Use task-specific metrics (accuracy, tool use, completion rate), not just overall benchmark scores.

- Verify outputs — Check for hallucinations, fabricated sources, and factual errors.

- Assess consistency — Run the same prompt multiple times. Does it produce reliable results?

- Evaluate ethics and bias — Does the model behave fairly across different inputs and user types?

Most teams pick AI tools based on benchmark scores and demo videos. Then they deploy — and reality hits.

The outputs are inconsistent. The agent breaks on edge cases. A fix in one area creates three new failures. This is what practitioners call “vibe-testing” — informal, reactive, and impossible to defend when things go wrong.

The problem isn’t the AI. It’s the evaluation process. Or rather, the lack of one.

Frontier models have jumped from 40% to over 80% on rigorous coding benchmarks in a short time. But teams that lack structured evaluation frameworks don’t benefit from that progress — they can’t even measure it. Without a repeatable way to assess AI performance, every model upgrade becomes a guessing game and every deployment a risk.

I’m Clayton Johnson, an SEO and growth strategist who has built AI-assisted marketing workflows and evaluation systems across a range of business models — and knowing how to evaluate AI has been the difference between systems that compound and tools that just create noise. This guide gives you the structured framework to make that call clearly.

How to evaluate AI helpful reading:

The Core Framework: Moving Beyond Single-Turn Benchmarks

When we first start looking at how to evaluate AI, it’s tempting to look at a single score. We want a “grade” that tells us if the AI is smart. But in a production environment, especially in Minneapolis where we focus on structured growth architecture, a single-turn benchmark is almost useless.

A single-turn evaluation is like asking a job candidate one trivia question and hiring them based on the answer. Real AI agents operate in layers. They don’t just “answer”; they reason, use tools, and modify the state of their environment.

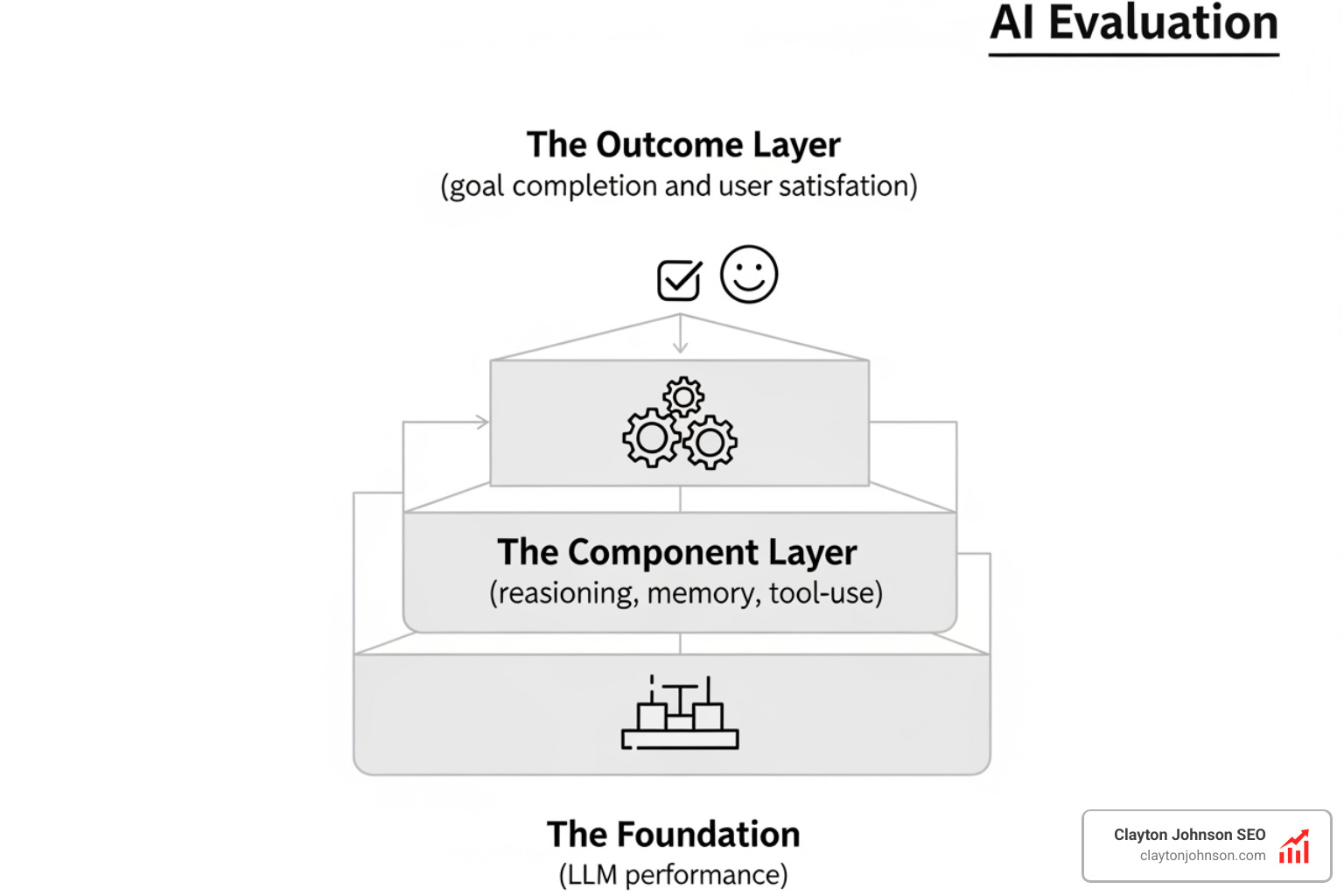

To build a robust evaluation, we must look at three distinct layers:

- The Foundation: The raw capability of the model.

- The Component Layer: How well the agent handles specific sub-tasks like intent detection, memory retrieval, and tool-use.

- The Outcome Layer: Did the agent actually solve the user’s problem?

We recommend using a mix of deterministic graders and model-based evaluation. Deterministic graders are the “gold standard” because they are objective and fast—think of a script that checks if a piece of code actually runs. However, for nuanced tasks like tone or empathy, we often turn to Amazon Bedrock AgentCore Evaluations, which provides automated assessment tools to measure how well agents handle multi-turn conversations and edge cases.

Why Agentic Systems Require a Different Approach to How to Evaluate AI

Evaluating an agent is significantly more complex than evaluating a standard chatbot. Agents use tools across many turns, modifying state in the environment and adapting as they go. This means mistakes can propagate and compound. If an agent makes a small error in step two of a ten-step process, that error can lead to a catastrophic failure by step ten.

Research from 𝜏2-bench Agent evaluations shows that agents are even more complex because frontier models can find creative solutions that surpass the limits of static evals. For instance, an agent might find a “loophole” in a booking policy that technically solves the user’s request but fails a rigid, pre-defined evaluation script.

When we evaluate an agent, we are evaluating the “harness” (the code that gives the AI tools) and the model working together. You cannot separate the two. A brilliant model in a poorly designed harness will still fail your business objectives.

Measuring Success with pass@k and pass^k Metrics

Because AI is non-deterministic—meaning it can give different answers to the same prompt—we can’t rely on a single trial. If an agent succeeds once, is it “good,” or did it just get lucky?

This is where statistical metrics like pass@k and pass^k come in:

- pass@k: This measures the likelihood that an agent gets at least one correct solution in k attempts. It tells you the “upside” potential of the model.

- pass^k: This measures the probability that all k trials succeed. This is a much harder bar to clear and is essential for high-stakes enterprise workflows where consistency is non-negotiable.

If your agent has a 75% per-trial success rate, the probability of passing three consecutive trials (pass^3) drops to roughly 42%. If your business requires 99% reliability, a 75% success rate is a failure.

Avoiding the Ground Truth Trap in AI Selection

The most dangerous mistake in how to evaluate AI is failing to scrutinize the “ground truth.” Ground truth is the set of labels or “correct answers” used to train and test the system. But here is the secret: ground truth is often just a collection of subjective human opinions.

| Feature | Objective Ground Truth | Subjective Ground Truth |

|---|---|---|

| Examples | Code execution, math answers, database state | Tone, summaries, creative writing |

| Verification | Can be verified by a script | Requires expert human judgment |

| Risk | Low (True/False) | High (Bias, inconsistency) |

| Scalability | High | Low |

In the study Is AI Ground Truth Really True?, researchers found that AI tools often fail in production because they were validated against “know-what” (simple labels) instead of “know-how” (the complex deliberative process experts use). For example, a medical AI might be trained to spot a tumor on an image, but it lacks the “know-how” of a doctor who considers the patient’s entire history.

Identifying Hallucinations and Fabricated Sources

We must distinguish between factual requests and conversational requests. An AI can be incredibly charming and conversational while being factually bankrupt.

Generative AI predicts the next word; it does not “think” in the human sense. This leads to the creation of fabricated sources—links that look real but lead nowhere, or citations of laws that don’t exist. When we evaluate AI, we must implement verification steps that cross-reference outputs against a trusted knowledge base. If an AI tool cannot provide a verifiable “paper trail” for its claims, it is a liability, not an asset.

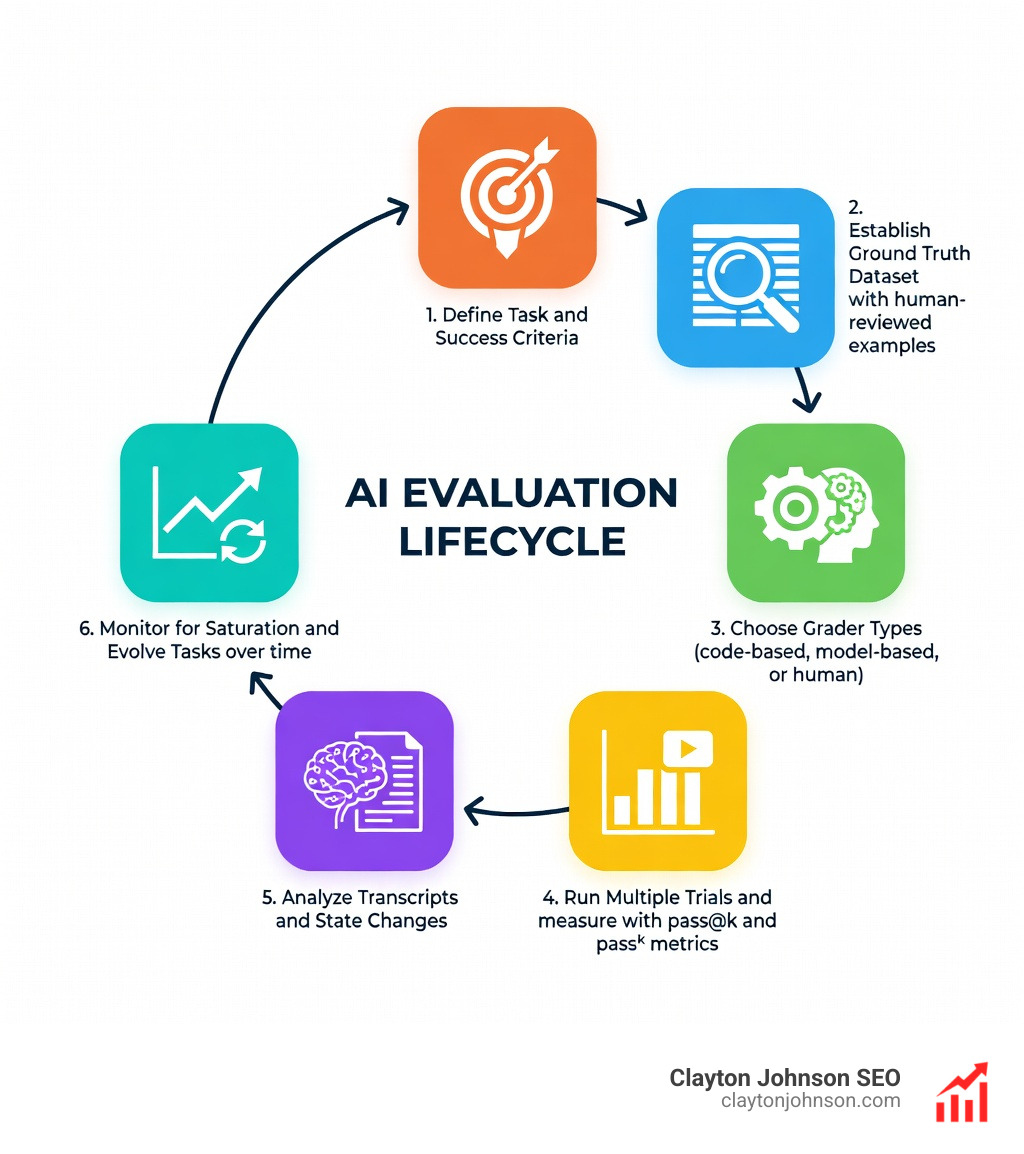

A Practical Roadmap for Implementing How to Evaluate AI

You don’t need a perfect system on day one. In fact, waiting for perfection is a great way to fall behind. We recommend a “zero to production” roadmap:

- Collect 20-50 Real Failures: Don’t use generic datasets. Pull real examples from your customer support logs or manual testing where the AI failed.

- Build an Evaluation Harness: This is an isolated environment where you can run your agent against these tasks without affecting real data.

- Deploy Graders: Use a mix of simple code checks (e.g., “Did it return a valid JSON?”) and LLM-as-a-judge (e.g., “Was the tone professional?”).

- Run Regression Testing: Every time you change a prompt or update a model, run your entire suite. This ensures that fixing one bug didn’t create three new ones.

Tools like Promptfoo and Braintrust are excellent for managing this lifecycle. They allow you to visualize how different prompts perform across hundreds of test cases simultaneously.

Integrating Automated Evals with Human-in-the-Loop Systems

Automated evals are great for speed, but they can’t catch everything. Human-in-the-loop (HITL) is essential for calibrating your automated graders.

We suggest a “Swiss Cheese” approach to safety. No single layer of evaluation is perfect. But when you layer automated checks, human reviews, and an adversarial testing protocol (where you actively try to “break” the AI), you create a robust system where errors are caught before they reach the customer.

Handling Eval Saturation and Long-Term Maintenance

A common pitfall is “eval saturation.” This happens when your agent becomes so good at your test suite that it scores 100% every time. While that feels good, it means your evaluation is no longer providing a signal for improvement.

Look at SWE-bench Verified. Coding models jumped from 30% to 80% in a single year. When a benchmark nears 80-90%, it’s time to retire it and build harder, more complex tasks. Evaluation is a living artifact. If your test suite hasn’t changed in six months, you aren’t measuring progress—you’re just checking a box.

Common Pitfalls in AI Agent Evaluation Design

The biggest danger in AI evaluation is overfitting. This happens when you tweak your prompts so much to pass a specific test that the AI becomes worse at handling real-world variety.

Another pitfall is ignoring the “Swiss Cheese Model” of safety. If you only evaluate for accuracy, you might miss issues with latency or cost. An agent that is 99% accurate but takes three minutes to respond and costs $5 per query is likely a poor fit for a customer service role.

Teams should also consult NIST’s AI Robustness Framework to ensure they are looking at the full spectrum of risk, including security vulnerabilities and prompt injections.

The Danger of Class-Imbalanced Evaluation Tasks

One-sided evaluations lead to one-sided optimization. For example, if you only test your AI on how well it finds information, you might end up with an agent that “searches” for everything—even when the answer is right in front of it.

This is the problem of class-imbalanced evals. You must test both:

- Positive Cases: Does it act when it should?

- Negative Cases: Does it refrain from acting when it shouldn’t?

An effective evaluation suite includes “trap” questions designed to see if the AI will hallucinate or take an action it isn’t authorized to perform.

Frequently Asked Questions about How to Evaluate AI

What is the difference between capability and regression evals?

Capability evals measure the “ceiling”—how smart is this model at its best? Regression evals measure the “floor”—did we break something that used to work? You need both. Capability evals help you choose a model; regression evals help you keep your job after an update.

How do you handle non-determinism in AI outputs?

You handle it through volume. Never judge a model based on one output. Run 10 or 20 trials per task and use the pass@k metric to understand the statistical likelihood of success. If the results vary wildly, your prompt is likely too ambiguous.

When should a team move from manual testing to automated evals?

The moment you find yourself saying, “I hope this change didn’t break anything.” Usually, this happens once you have more than 10-20 distinct tasks. Manual “vibe-testing” doesn’t scale, and it leads to “eval fatigue” where humans start missing obvious errors.

Conclusion

At Demandflow, we believe that clarity comes from structure. How to evaluate AI isn’t just a technical hurdle; it’s a strategic one. If you can’t measure your AI’s performance, you can’t leverage it for compounding growth.

Whether you are building a coding assistant or a multi-agent marketing workflow, the goal is to move from reactive “bug hunting” to proactive “growth architecture.” By building a rigorous evaluation suite, you turn AI from a black box into a reliable engine for your business.

For more insights on building structured growth systems and More info about AI tools, explore our framework library. We’re here to help you build the infrastructure that turns AI potential into measurable leverage.