Why AI Infrastructure Best Practices Define Competitive Advantage

AI infrastructure best practices are the foundation for scalable, cost-effective AI deployment. Organizations succeeding with AI today share common architectural principles:

- Design for elastic scalability from the start using containerized workloads and orchestration (Kubernetes)

- Adopt FinOps principles to optimize compute costs through spot instances, workload scheduling, and token efficiency

- Implement robust monitoring across model performance, data pipelines, and infrastructure health

- Standardize MLOps workflows for automated retraining, version control, and safe deployments

- Balance deployment models (cloud, on-premises, hybrid) based on data sovereignty and workload characteristics

- Prioritize data architecture with high-throughput storage, low-latency networking, and clean pipelines

- Enforce security and governance through RBAC, encryption, and compliance frameworks (GDPR, HIPAA)

The challenge isn’t AI adoption—81% of executives are prioritizing it. The challenge is infrastructure. 44% of organizations struggle because their IT systems weren’t designed for how AI moves data. Traditional infrastructure operates on predictable, transactional patterns. AI workloads demand massive parallel processing, long-running data transfers, continuous model updates, and unpredictable resource spikes.

This creates a “digital divide”: AI needs to scale up dramatically for training, then scale back cost-effectively for production inference. Organizations that solve this tension through structured infrastructure architecture see 2.5x higher returns on AI initiatives compared to those treating AI as a tactical add-on.

The infrastructure decisions you make today determine whether AI becomes a competitive advantage or an operational liability. Poor choices compound: wasted compute costs, model performance degradation, security vulnerabilities, and teams bottlenecked by unreliable systems.

I’m Clayton Johnson, and I’ve spent years helping organizations architect scalable growth systems—including AI infrastructure best practices that align technical capabilities with strategic objectives. My focus is turning fragmented AI experiments into durable, measurable infrastructure that compounds value over time.

Common ai infrastructure best practices vocab:

- Enterprise AI Strategy

- ai strategy for large organizations

- implementing ai governance frameworks

Core Components of Modern AI Infrastructure

Building a robust environment for artificial intelligence isn’t just about buying the fastest chips. It’s about assembling a “symphony” of specialized tools that work in harmony. Modern AI infrastructure is a highly integrated combination of hardware, software, and networking designed to handle massive data throughput and parallel processing.

Unlike traditional IT, which focuses on sequential tasks, AI infrastructure must support non-deterministic behavior and the heavy computational load of neural networks. According to Stanford AI Index Research, distributed computing advancements have reduced model training times by a staggering 80% over the last five years. This progress is driven by four core pillars: compute, storage, networking, and the software stack.

Compute Power: GPUs, TPUs, and CPUs in AI Infrastructure Best Practices

The “brain” of your AI setup depends on the workload. While CPUs (Central Processing Units) are great for general-purpose tasks and basic data shuffling, they struggle with the heavy math required for deep learning.

- GPUs (Graphics Processing Units): These are the gold standard for AI training. A single high-end NVIDIA A100 GPU can deliver up to 20x faster performance than a CPU for AI tasks because it can perform thousands of calculations simultaneously.

- TPUs (Tensor Processing Units): These are ASICs (Application-Specific Integrated Circuits) designed specifically by Google for tensor operations. They are incredibly efficient for large-scale deep learning models.

- NPUs (Neural Processing Units): Often found in edge devices, these are optimized for low-power, high-speed inference.

Using ai infrastructure best practices, we recommend matching the hardware to the stage of the model lifecycle. Use GPUs for the intensive training phase and consider right-sized instances or specialized inference chips for production to keep costs under control.

Data Foundations: Storage and Networking for Scalability

If compute is the brain, data is the fuel. AI models are only as good as the data they can access. Traditional file systems often buckle under the pressure of millions of small files or massive multi-terabyte datasets used in Large Language Models (LLMs).

- Data Lakes and Object Storage: McKinsey Insights on Data Foundations suggests that scalable data storage is non-negotiable. Object storage is ideal for unstructured data (images, video, text), while data warehouses handle structured, analytics-ready info.

- High-Performance Networking: Distributed training requires GPUs to “talk” to each other at lightning speeds. Technologies like InfiniBand or high-bandwidth Ethernet are essential to prevent networking bottlenecks that leave expensive GPUs sitting idle while waiting for data.

Strategic Deployment Models: Cloud, On-Premises, and Hybrid

Choosing where your AI lives is a strategic decision that impacts everything from latency to your monthly bill. There is no one-size-fits-all answer; the right model depends on your data residency requirements and budget.

| Feature | Public Cloud | On-Premises | Hybrid |

|---|---|---|---|

| Scaling | Instant / Elastic | Limited by Hardware | Flexible |

| Upfront Cost | Low (OpEx) | High (CapEx) | Mixed |

| Data Control | Shared Responsibility | Full Control | High (Sensitive data stays home) |

| Maintenance | Managed by Provider | Internal Team Needed | Shared |

Choosing the Right AI Infrastructure Best Practices for Your Maturity

We often use a Crawl-Walk-Run framework to help organizations find their footing. Pushing into a self-managed, on-premises cluster before you’ve even validated a use case is a recipe for wasted capital.

- Crawl (Fully Managed): Use services like AWS Bedrock or Azure OpenAI. This is best for rapid prototyping and teams with limited DevOps expertise.

- Walk (Partially Managed): Leverage platforms like AWS SageMaker or Google Vertex AI. You get more control over the environment without managing the underlying hardware.

- Run (Self-Managed/Hybrid): For AI-heavy enterprises with stable, predictable workloads. This might involve dedicated NVIDIA DGX systems or bare-metal clusters for maximum performance and cost-efficiency at scale. IBM AI Strategy and Infrastructure emphasizes that your choice should align with your technical readiness and FinOps maturity.

Overcoming Challenges in Building and Scaling AI

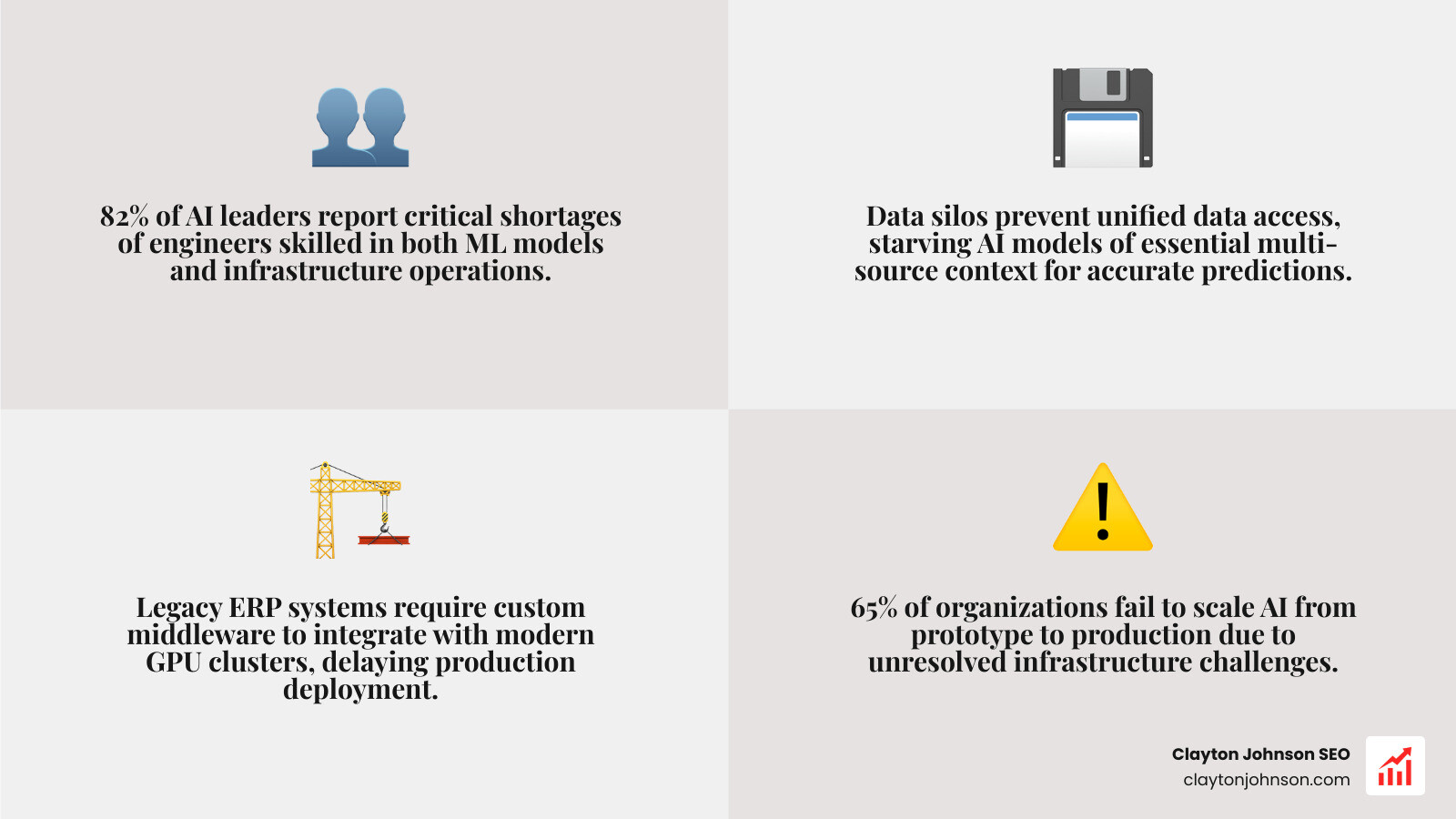

Scaling isn’t just a hardware problem; it’s a human and process problem. ClearML Research on AI Scalability highlights that many organizations hit a wall when moving from a “cool demo” to a production system.

- The Talent Gap: Finding engineers who understand both the “AI” (models) and the “Ops” (infrastructure) is difficult and expensive.

- Data Silos: AI requires a unified view of data. If your marketing data can’t talk to your sales data, your model lacks context.

- Legacy Integration: Bridging the gap between 20-year-old ERP systems and modern GPU clusters requires significant architectural “glue.”

Operationalizing AI Infrastructure Best Practices

Once the hardware is humming, the real work begins. Operationalizing AI means moving away from manual “artisan” model building toward a repeatable, industrial process. This is where MLOps (Machine Learning Operations) and GenAIOps come into play.

FinOps and Cost Optimization for AI Workloads

AI is notoriously expensive. In fact, AI inference workloads—the process of the model actually answering queries—can account for 60% of an enterprise’s cloud computing costs. To stay profitable, you must treat cost as a first-class engineering metric.

- Spot Instances: Use “spare” cloud capacity for non-critical training jobs to save up to 90% on compute costs.

- Token Efficiency: In GenAI, you pay by the token. Gartner Insights on Cloud Cost Management suggests that better prompt engineering and using smaller, specialized models for simple tasks can drastically reduce spend.

- Auto-Shutdown: If a GPU isn’t processing a job, it should be turned off. Automated budget controls and idle-resource detection are essential ai infrastructure best practices.

Security, Governance, and Compliance in AI Systems

AI models often handle sensitive customer data, making them a prime target for attackers. Furthermore, global regulations like GDPR and industry-specific rules like HIPAA require strict data handling.

Grant Thornton Research on AI Automation points out that while AI can cut operational expenses by 30-50%, those savings disappear if you face a massive compliance fine.

Best practices include:

- RBAC (Role-Based Access Control): Ensure only authorized users can touch the training data or deploy a model.

- Encryption: Protect data at rest, in transit, and even “in use” during computation where possible.

- Model Auditability: Keep a detailed history of how a model was trained, what data it used, and which hyperparameters were selected. This is vital for “explainable AI” and regulatory scrutiny.

Designing for Resilience and Performance

A model that takes ten seconds to answer a customer query is a model that won’t be used. High-performance AI requires designing for both speed and reliability.

According to the Flexential Report on the State of AI Infrastructure, 90% of enterprises are now deploying AI-specific infrastructure to meet these demands. To measure your success, you should track specific KPIs:

- Inference Latency: How long does it take for a user to get a response?

- Model Accuracy/Drift: Is the model becoming less accurate over time as real-world data changes?

- GPU Utilization: Are you getting your money’s worth out of your expensive hardware?

- Training ROI: Does the performance boost from a new training run justify the compute cost?

Steps to Assess, Design, and Implement AI Systems

We recommend a structured approach to building your “growth architecture”:

- Assess Use Cases: Don’t build for the sake of building. Identify high-value projects (e.g., fraud detection, supply chain optimization).

- Design the Architecture: Decide on your deployment model (Cloud/On-Prem/Hybrid) and select your tech stack.

- Implement Security: Build in RBAC and encryption from day one.

- Automate and Integrate: Use CI/CD pipelines for your models so that updates are seamless and safe.

- Monitor and Iterate: Use tools like Prometheus and Grafana to watch your systems and refine them based on production data.

Real-World Implementations and Future Trends

We are seeing ai infrastructure best practices transform industries in real-time:

- Retail: Using GPU clusters and real-time image recognition to detect shelf misplacements, reducing restocking delays by 30%.

- Finance: JPMorgan Chase and other leaders use AI to analyze thousands of variables per transaction, slashing fraud losses by 40%.

- Autonomous Vehicles: Companies are ingesting millions of miles of driving data daily, requiring massive distributed training environments and edge-based inference.

Looking ahead, we expect to see deeper integration with Quantum Computing for complex optimization and a massive shift toward Edge AI, where processing happens on the device rather than the cloud to eliminate latency.

Frequently Asked Questions about AI Infrastructure

What is the difference between AI infrastructure and traditional IT?

Traditional IT is designed for predictable, sequential workloads like running a database or a website. AI infrastructure is built for massive parallel processing, non-deterministic outputs, and high-volume data movement. It uses specialized hardware like GPUs and TPUs and requires MLOps for continuous model management.

How can organizations control the rising costs of AI inference?

Inference costs can be managed by using FinOps principles: right-sizing models (using a “mini” model when a “large” one isn’t needed), optimizing prompt length, implementing caching for common queries, and using specialized inference hardware that is more energy-efficient than general-purpose GPUs.

Why is private AI becoming essential for modern enterprises?

Private AI allows companies to keep their sensitive data within their own controlled environments (on-premises or private cloud). This is critical for data sovereignty, regulatory compliance (GDPR/HIPAA), and protecting intellectual property while still gaining the benefits of advanced machine learning.

Conclusion

At the end of the day, AI success isn’t about having the most “tactics”—it’s about having a structured growth architecture. At Demandflow.ai, we believe that clarity leads to structure, which leads to leverage, and ultimately to compounding growth.

Your AI infrastructure is the physical manifestation of that leverage. By following these ai infrastructure best practices, you ensure that your organization isn’t just experimenting with AI, but building a durable engine for the future.

If you’re ready to move beyond the “digital divide” and build a resilient, scalable AI foundation, we can help you bridge the gap between technical complexity and business results.