Why AI Prompt Engineering Matters for Every Business

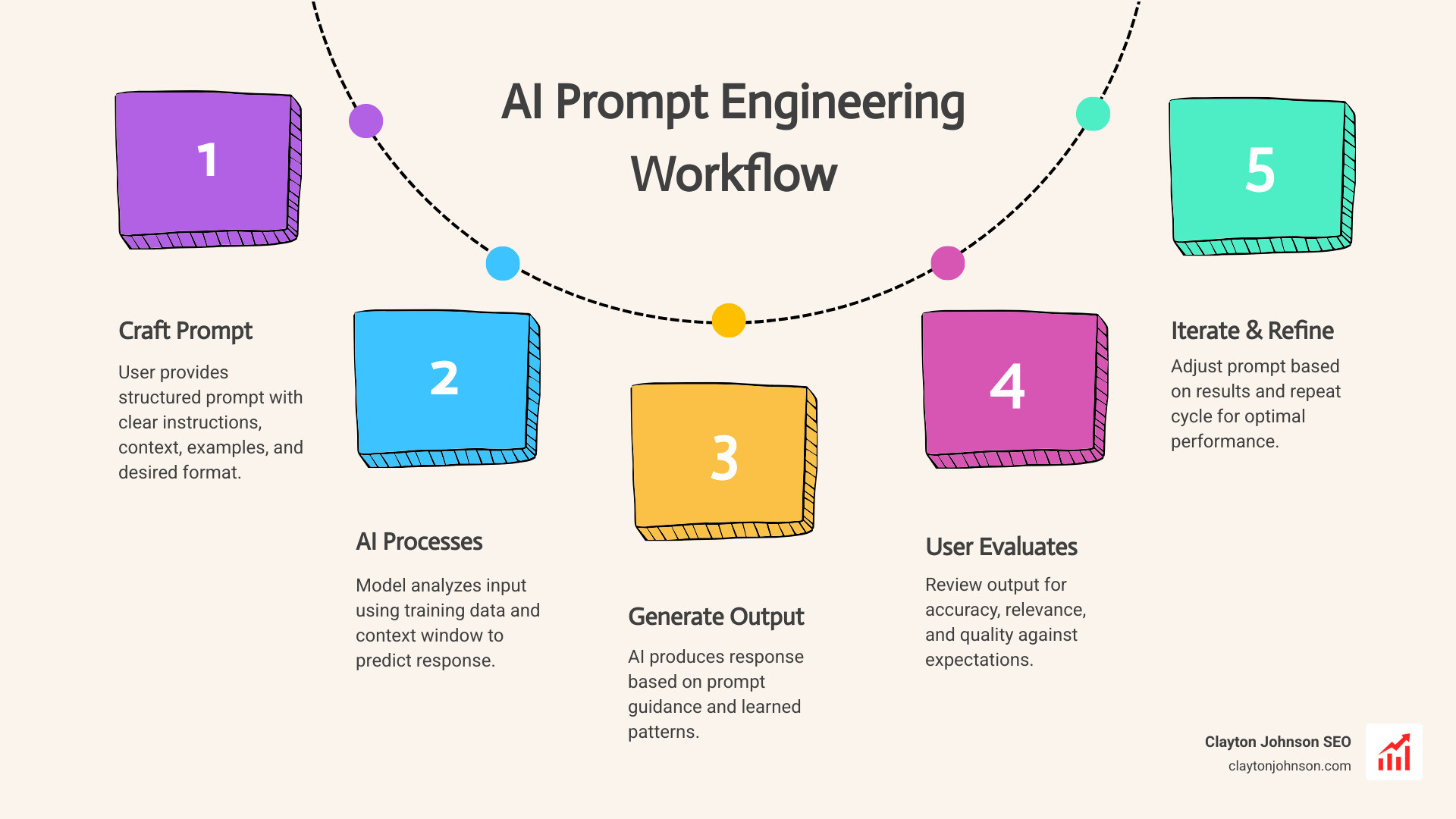

AI Prompt Engineering is the process of designing and optimizing instructions to guide large language models like ChatGPT, Claude, and Gemini toward generating accurate, useful, and consistent responses. At its core, it’s about crafting clear prompts—the input text you provide—to help AI understand your intent and deliver the output you need.

Key elements of effective prompt engineering:

- Clarity – Use specific, unambiguous language

- Context – Provide relevant background information

- Structure – Organize instructions logically

- Examples – Show the AI what you want (few-shot prompting)

- Role definition – Assign the AI a persona or expertise level

- Iteration – Refine prompts based on results

Generative AI has become one of the most transformative tools for modern businesses. Models like GPT-4, Claude, and Gemini can write code, analyze data, generate marketing content, and automate workflows. But getting consistent, high-quality results requires more than just typing questions into a chat box.

Think of prompt engineering as providing a roadmap. The AI is a prediction engine—it generates the next most likely word based on your input and its training data. Without clear instructions, context, and structure, you’ll get generic or off-target responses. With well-engineered prompts, you unlock the full potential of these tools.

The stakes are real. Learn Prompting’s guide has taught over 3 million people and is cited by Google, Microsoft, and Wikipedia. A 2024 survey identified more than 50 distinct text-based prompting techniques. Research shows that reordering examples in a prompt can shift accuracy by over 40 percent. Small changes in how you phrase instructions can dramatically impact results.

This matters for everyone—not just developers. Marketing teams use prompts to generate campaign ideas. Sales teams automate outreach. Operations leaders build workflows. Founders use AI to draft strategy documents. The better you prompt, the more productive and valuable AI becomes in your workflow.

But prompt engineering isn’t about “tricking” the AI. It’s about clear communication. You’re teaching the model to think clearly by structuring your instructions, providing relevant context, and iterating based on feedback. As models improve, the focus shifts from clever phrasing to problem formulation—defining what you actually need before you ask for it.

I’m Clayton Johnson, and I’ve spent years building SEO and growth systems that rely on structured content, clear intent modeling, and AI-augmented workflows. AI Prompt Engineering has become a core part of how I design scalable marketing systems and operationalize strategy for clients.

Core Principles of Effective AI Prompt Engineering

When we dive into AI Prompt Engineering, we’re essentially learning the “art and science” of communication with machines. According to the Vertex AI Prompt engineering guide, the goal is to provide the model with enough context and instruction that it understands your intent perfectly.

To do this effectively, we rely on a few foundational pillars:

Clarity and Precision

Vague prompts lead to vague results. If you ask an AI to “write a blog post about SEO,” you might get 500 words of generic fluff. If you ask it to “write a 1,200-word deep dive into technical SEO for e-commerce sites, focusing on canonical tags and site speed,” you’re much closer to a usable draft.

Context and Instructions

AI models don’t live in your head. They don’t know your brand voice, your target audience, or the specific problem you’re trying to solve unless you tell them. Effective prompts often include a “Reference” or “Context” section, often separated by delimiters like ### or """ to help the model distinguish between what it should process and what it should follow.

Persona and Role Definition

One of the most powerful “hacks” in AI Prompt Engineering is assigning a persona. Telling the AI, “You are a senior SEO strategist with 15 years of experience,” changes the tone and depth of the response compared to, “You are a helpful assistant.”

Formatting the Output

Don’t just ask for information; ask for it in a specific format. Whether you need a table, a JSON object, or bullet points, specifying the “Type of Output” saves you hours of manual reformatting.

| Vague Prompt | Precise Prompt |

|---|---|

| “Write a social media post about AI.” | “Write a 3-sentence LinkedIn post for marketing managers explaining the benefits of AI in workflow automation. Use a professional yet encouraging tone.” |

| “Help me summarize this article.” | “Summarize the following article in 5 bullet points. Focus specifically on the financial statistics and the CEO’s quotes.” |

| “Give me some code for a website.” | “Generate a Python script using the Flask framework to create a simple contact form with fields for ‘Name’, ‘Email’, and ‘Message’.” |

Advanced Prompting Techniques and Frameworks

Once you’ve mastered the basics, it’s time to look at structured frameworks that ensure consistency. In our work at Clayton Johnson SEO, we often use these methods to build AI growth strategies that scale.

The C.R.E.A.T.E. Framework

Developed by AI consultant Dave Birss, this framework provides a reliable checklist for every prompt:

- Character: Define the role (e.g., “You are an expert copywriter”).

- Request: State the task clearly.

- Examples: Provide a few samples of what you want.

- Additions: Mention specific style or point-of-view requirements.

- Type of Output: Specify the format (Table, List, Code).

- Extras: Include any additional reference text or data.

The PARSE Acronym

Another popular method found in community discussions is PARSE: Persona, Action, Requirements, Situation, and Examples. By filling in these five buckets, you create a comprehensive “blueprint” for the AI to follow.

Mastering AI Prompt Engineering with Chain-of-Thought

Sometimes, an AI fails because the task is too complex to solve in one go. Scientific research on Chain-of-Thought shows that asking the model to “think step-by-step” significantly improves its reasoning capabilities.

By prompting the AI to break down a problem into intermediate steps, you allow it to “show its work.” This is particularly effective for:

- Arithmetic Reasoning: Solving multi-step math problems.

- Logic Puzzles: Navigating complex “if-then” scenarios.

- Strategic Planning: Breaking a large goal into actionable tasks.

According to latest research on zero-shot reasoners, even adding the simple phrase “Let’s think step by step” can elicit better logic from the model without any prior examples.

The Rhetorical Approach to AI Prompt Engineering

Developed by academic Sébastien Bauer, this approach treats the prompt like a classical speech. You define the Rhetorical Situation:

- Ethos: The credibility or persona of the speaker (the AI).

- Pathos: The emotional appeal or tone.

- Logos: The logical argument and data provided.

- Audience: Who is the output for?

This method is fantastic for high-stakes communication, such as drafting investor updates or sensitive client emails.

Overcoming AI Limitations and Hallucinations

As much as we love AI, it isn’t perfect. It can be biased, it can make things up (hallucinations), and it can sometimes be confidently wrong.

The Risk of Hallucination

AI models are prediction engines, not databases. They don’t “know” facts; they predict the most likely next word. This leads to AI hallucinations where the model provides coherent but entirely fabricated information. We saw this in the news when an outlet’s AI-generated stories were plagued with factual errors.

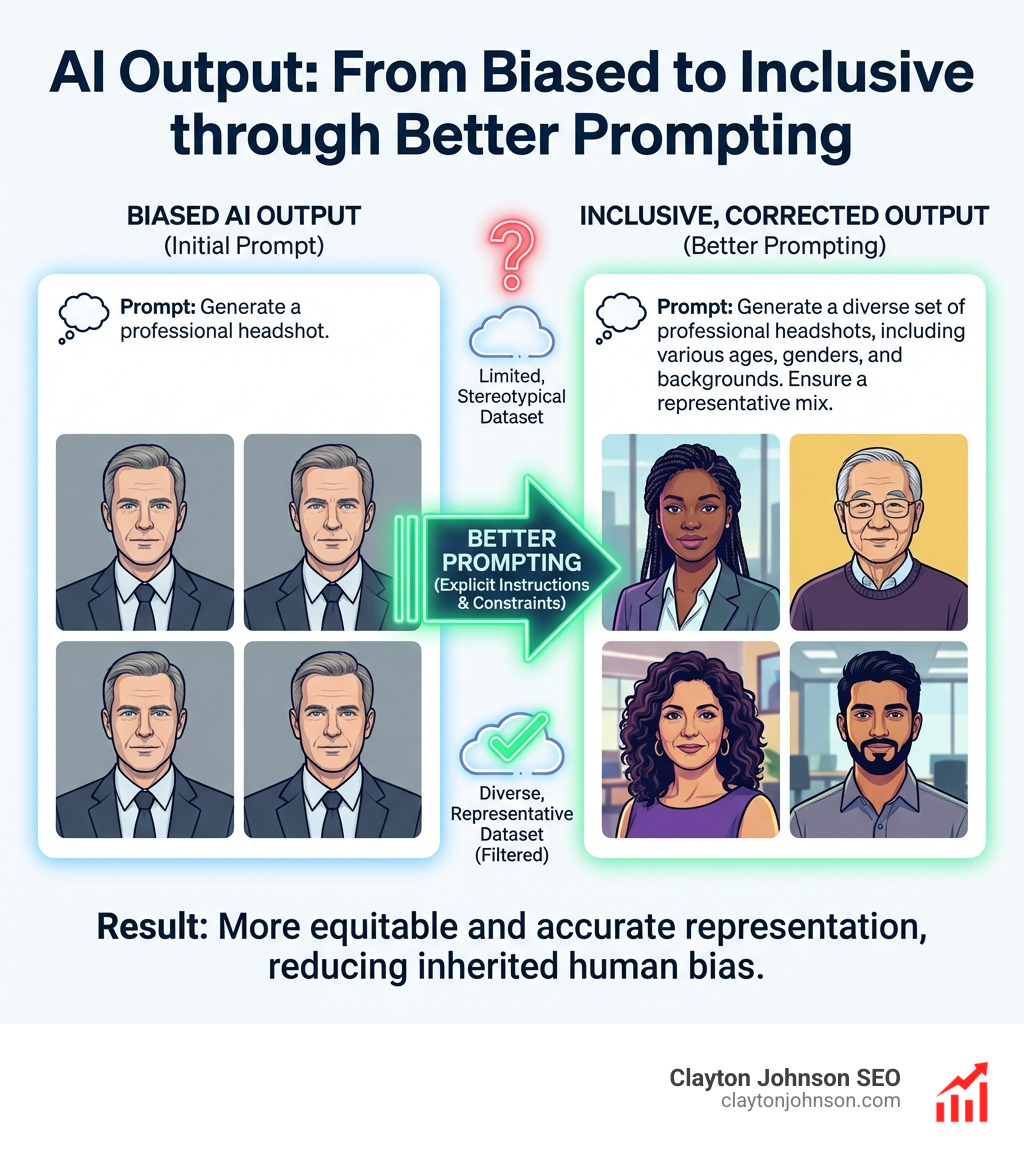

Bias and Inclusivity

AI models are trained on human data, which means they inherit human biases. For example, image generators have been known to lighten skin tones or change eye colors when asked to make a headshot “more professional.” As every developer needs AI tools, they must also be the “human in the loop” to catch and correct these biases.

Mitigation Strategies

To reduce these risks, we recommend:

- Retrieval-Augmented Generation (RAG): Providing the AI with the specific documents it should use to answer, rather than relying on its internal training.

- Critical Review: Always fact-check names, dates, and statistics.

- Negative Prompts: Explicitly tell the AI what not to do (e.g., “Do not use jargon,” “Do not mention competitors”).

Using Context Engineering to Improve AI Prompt Engineering

A major part of managing AI is understanding the Context Window. This is the “memory” the AI has during a conversation.

- Tokens: Models don’t read words; they read tokens (chunks of characters).

- Memory Limits: If a conversation gets too long, the AI will “forget” the beginning.

- Context Engineering: This is the practice of managing that window. It involves using Claude skill packs or RAG systems to ensure the most relevant information is always “visible” to the model.

For large enterprises, we use GraphRAG, which uses knowledge graphs to connect disparate pieces of information, helping the AI synthesize insights across thousands of documents without losing context.

Essential Skills for the Modern Prompt Engineer

You don’t need a computer science degree to excel at AI Prompt Engineering, but you do need a specific set of “leverage skills.”

- Critical Thinking: The ability to look at an AI output and say, “This is 80% there, but it missed the core problem.”

- Writing and Editing: Since we communicate with LLMs through language, being a clear writer is a massive advantage.

- Domain Expertise: To prompt an AI to write code, you need to understand coding principles. To prompt it for image generation, you need to know about lighting, texture, and art history.

- Technical Communication: Being able to translate a business goal into a technical instruction.

- Python and Data Structures: While not always required, knowing Python helps you automate prompts via APIs and handle structured data like JSON.

As we discuss in the real truth about coding AI growth, the demand for these “liberal arts” skills—writing, editing, and logic—has actually increased because of AI. The AI handles the “doing,” but humans must handle the “directing.”

Frequently Asked Questions about Prompting

What is the difference between zero-shot and few-shot prompting?

Zero-shot prompting is when you ask the AI to perform a task without giving it any examples. You rely entirely on its pre-existing knowledge. Few-shot prompting involves providing a few examples of the input and desired output within the prompt. This “shows” the AI the pattern you want it to follow, which research shows can drastically improve accuracy.

How does the context window affect long conversations?

The context window is the limit of how many tokens the AI can process at once. In long conversations, once you exceed this limit, the AI starts “dropping” the earliest parts of the chat. To avoid this, you should start new chats for new topics or use “context engineering” techniques like summarizing previous parts of the conversation to keep the AI on track.

Will prompt engineering become obsolete?

There is a debate about this. Some experts believe that as AI becomes smarter, it will “intuit” our intentions better, making “clever” prompts less necessary. However, the core skill of Problem Formulation—knowing how to define a task, set boundaries, and evaluate quality—will remain an enduring and highly valuable skill. We don’t just need prompt engineers; we need “AI architects.”

Conclusion

At Clayton Johnson SEO, we believe that AI Prompt Engineering is a force multiplier for any business. It’s not about finding a magic “cheat code”; it’s about learning to communicate clearly, logically, and iteratively with the most powerful tools ever created.

By mastering the principles of clarity, context, and structure, you can turn a generic chatbot into a specialized assistant that drafts strategy, writes code, and optimizes your growth. Whether you are building SEO content marketing systems or streamlining internal operations, the quality of your input will always determine the value of your output.

The future belongs to those who can bridge the gap between human intent and machine execution. Start small, iterate often, and make the AI work for you.