Why Prompt Engineering Is the Skill Every AI User Needs

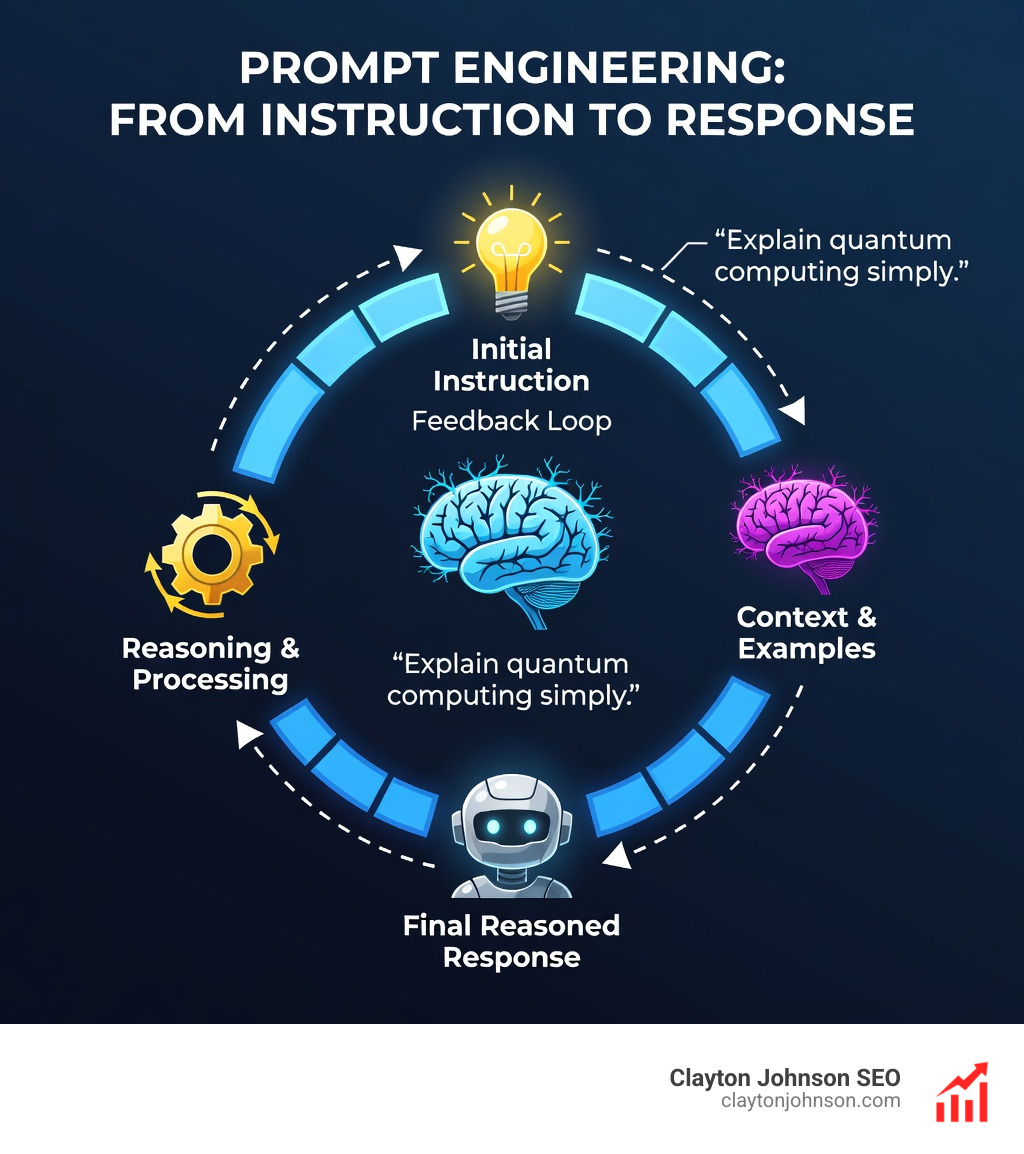

Prompt engineering is the practice of crafting and optimizing inputs to get better, more reliable outputs from AI language models.

Here is what you need to know at a glance:

| Concept | What It Means |

|---|---|

| What it is | Designing instructions that guide AI toward the response you actually want |

| Who uses it | Developers, marketers, researchers, founders, and operators |

| Core techniques | Zero-shot, few-shot, chain-of-thought, role prompting |

| Why it matters | Small prompt changes can shift AI accuracy by 40% or more |

| End goal | Consistent, high-quality AI output without guesswork |

You have probably been there. You type something into ChatGPT. The answer comes back vague, off-topic, or just… wrong. So you type it again, louder somehow, with more words. Still not what you needed.

That is not an AI problem. That is a prompting problem.

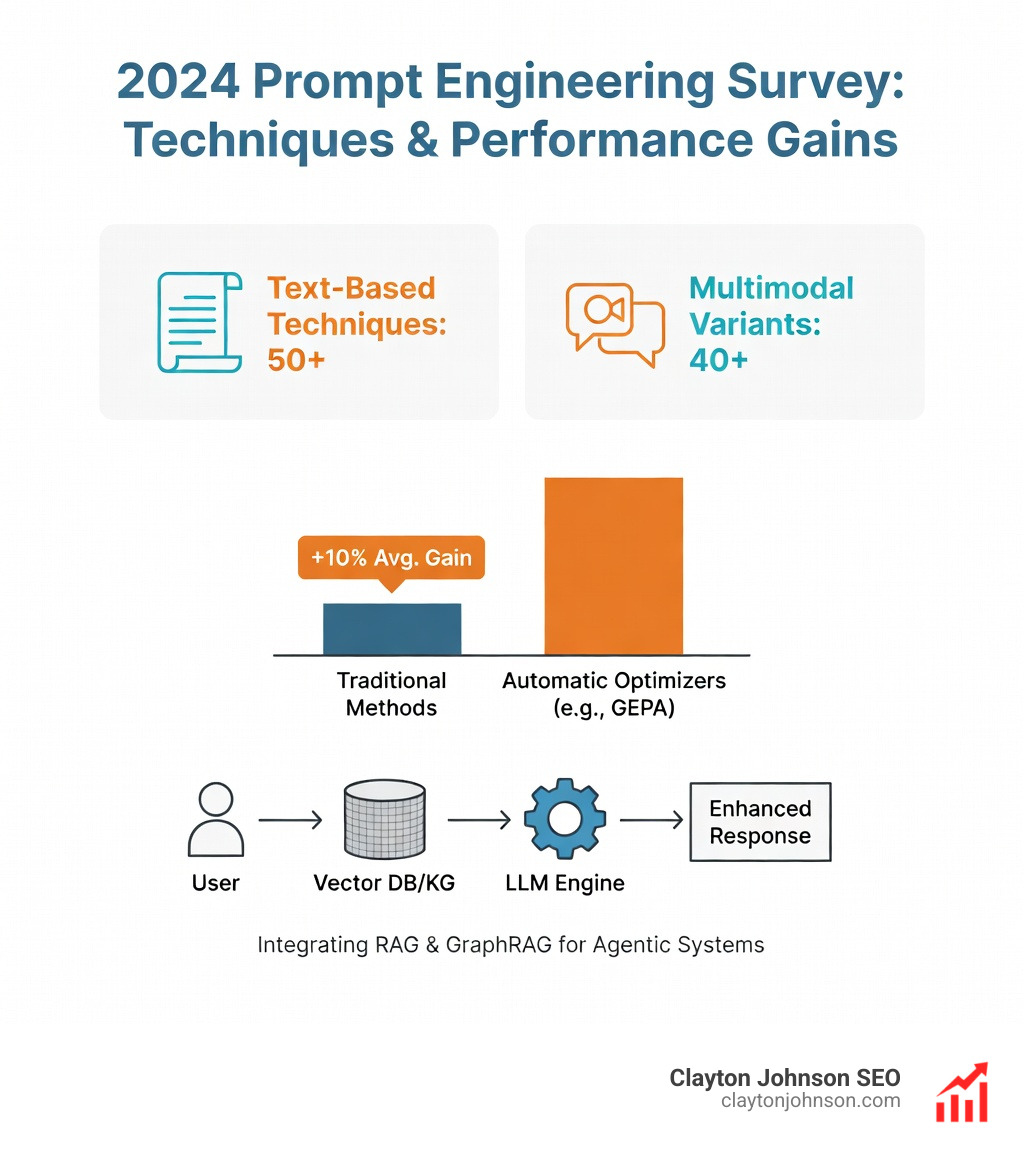

The way you talk to an AI model shapes everything it gives back. A 2024 survey identified over 50 distinct prompting techniques used to steer language models. Researchers found that simply reordering examples inside a prompt produced accuracy shifts of more than 40%. Formatting changes alone created swings of up to 76 accuracy points in some tests.

This is not a trivial skill. It is a leverage skill.

“Prompt engineering is a relatively new discipline for developing and optimizing prompts to efficiently use language models for a wide variety of applications and research.” — Prompt Engineering Guide, DAIR.AI

Think of a prompt as a blueprint. A vague blueprint produces a shaky building. A precise one produces exactly what you designed. The AI is not the variable. Your instructions are.

I’m Clayton Johnson, an SEO strategist and growth operator who works at the intersection of AI-assisted workflows, structured content systems, and scalable marketing architecture. Prompt engineering is central to how I build AI-augmented growth systems that convert intelligence into measurable business outcomes.

The Fundamentals of Prompt Engineering

At its heart, Prompt Engineering is the art and science of guiding an AI model—specifically a Large Language Model (LLM)—to produce the exact result you need. We often hear it described as “the new coding,” but instead of Python or C++, we use natural language.

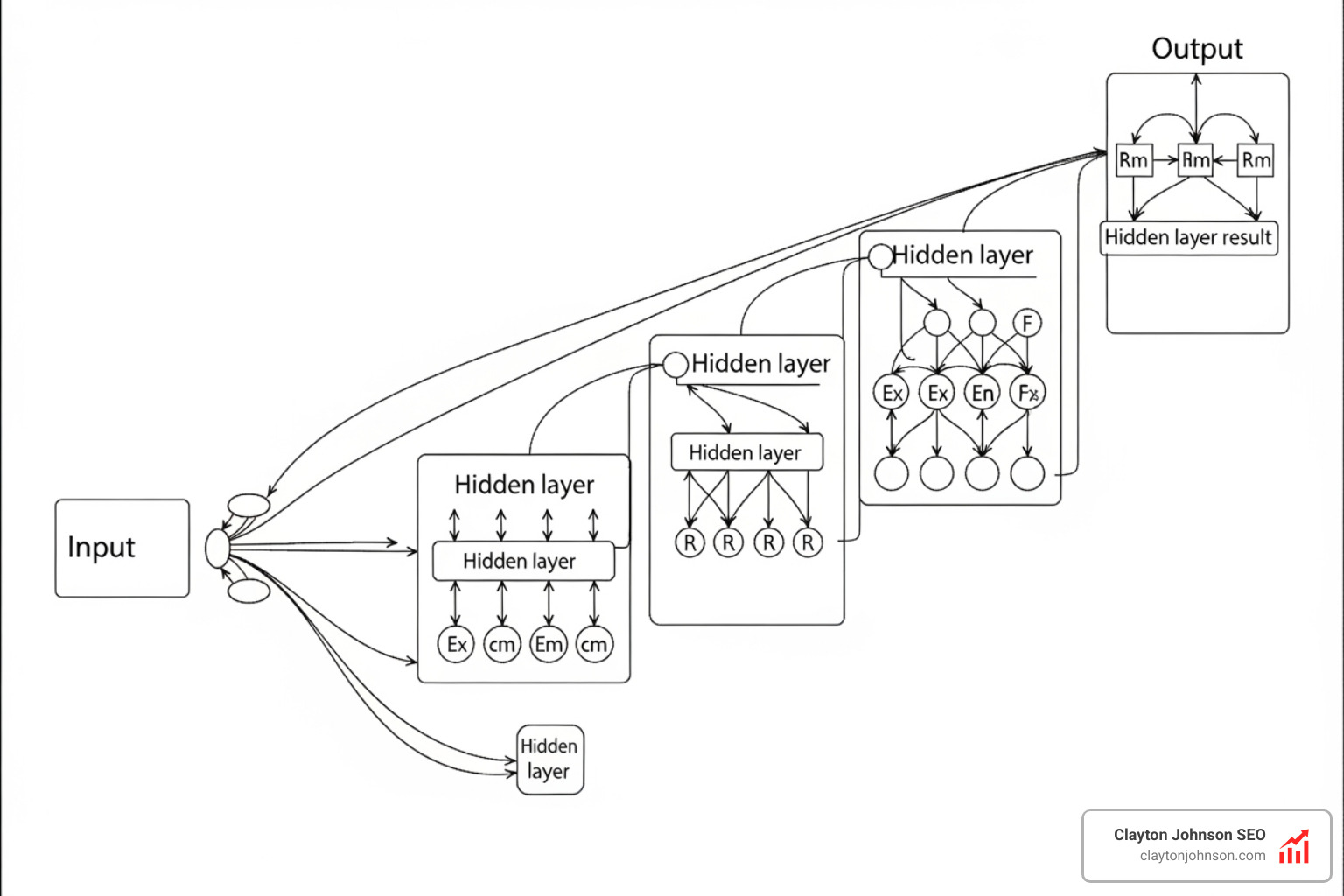

When we interact with an LLM, we aren’t just sending text into a vacuum. We are providing a “roadmap” for the model to follow. To do this effectively, we need to understand a few technical basics that govern how these models “think.”

- Large Language Models (LLMs): These are prediction engines trained on massive datasets. They don’t “know” facts the way a person does; they predict the next most likely chunk of data based on the patterns they’ve learned.

- Tokens: LLMs don’t read words; they read “tokens.” A token can be a single character, a part of a word, or a whole word. For example, “summarize” might be one token, while “artificial intelligence” might be two.

- Context Window: This is the model’s “working memory.” It is the total number of tokens a model can consider at one time (including your prompt and the history of the conversation). Context windows vary wildly, from 100,000 tokens to over one million in newer models like GPT-4o.

If you are just getting started, you might find our beginners guide to ai prompt engineering helpful for a deeper dive into these terms. It’s also worth noting that the history of this field goes back further than the ChatGPT boom; foundational scientific research on unsupervised multitask learners showed as early as 2019 that models could perform tasks they weren’t specifically trained for if the prompt was structured correctly.

Defining the Prompt Engineer Role

A proficient prompt engineer isn’t just someone who is “good with words.” It requires a specific blend of skills:

- AI Literacy: Understanding the specific capabilities and limitations of different models.

- Iterative Design: The patience to test, tweak, and refine a prompt through trial and error.

- Model Steering: Knowing how to use specific parameters (like temperature or max tokens) to control the creativity and length of the output.

Understanding the Context Window

Think of the context window as a physical desk. If your desk is cluttered with 10,000 papers, you might lose track of the most important one. In Prompt Engineering, managing this window is crucial. If a prompt is too long, the model may suffer from “middle-loss,” where it forgets instructions placed in the center of the text. This is why we place the most critical instructions at the very beginning or the very end of our messages.

Core Techniques for High-Performance Output

To move beyond simple questions, we use structured techniques that help the AI organize its internal logic.

One of the most powerful discoveries in the field is in-context learning. This is the model’s ability to learn a new task just by looking at the examples you provide in the prompt, without any permanent changes to its underlying architecture.

Mastering Zero-Shot and Few-Shot Prompt Engineering

These are the bread-and-butter techniques of any growth-focused workflow.

- Zero-Shot Prompting: You give the model a task without any examples.

- Example: “Classify this email as ‘Spam’ or ‘Not Spam’.”

- Few-Shot Prompting: You provide a handful of examples (usually 3 to 5) to show the model exactly what you want.

- Example: “Translate English to French.

Input: house -> Output: maison

Input: cat -> Output: chat

Input: dog -> Output: [Model fills in ‘chien’]”

- Example: “Translate English to French.

Research shows that few-shot learning is a massive accuracy booster. In fact, scientific research on few-shot learners highlights how scaling these examples can make models competitive with specialized systems. To learn more about setting the right “vibe” for these examples, check out the ultimate guide to gpt ai persona prompts.

Chain-of-Thought and Reasoning Models

Sometimes, the AI needs to “show its work.” Chain-of-Thought (CoT) prompting involves asking the model to think through a problem step-by-step. By simply adding the phrase “Let’s think step by step,” you can often solve complex math or logic problems that would otherwise fail.

Newer “reasoning models” (like OpenAI’s o1 series) have this logic built-in. They generate internal hidden tokens to analyze the prompt before they ever start writing a response. While these models are slower and often more expensive, they excel at multi-step planning and complex code generation. For a deep dive into how to guide these logical processes, see the ultimate claude chain-of-thought tutorial and the latest research on reasoning in LLMs.

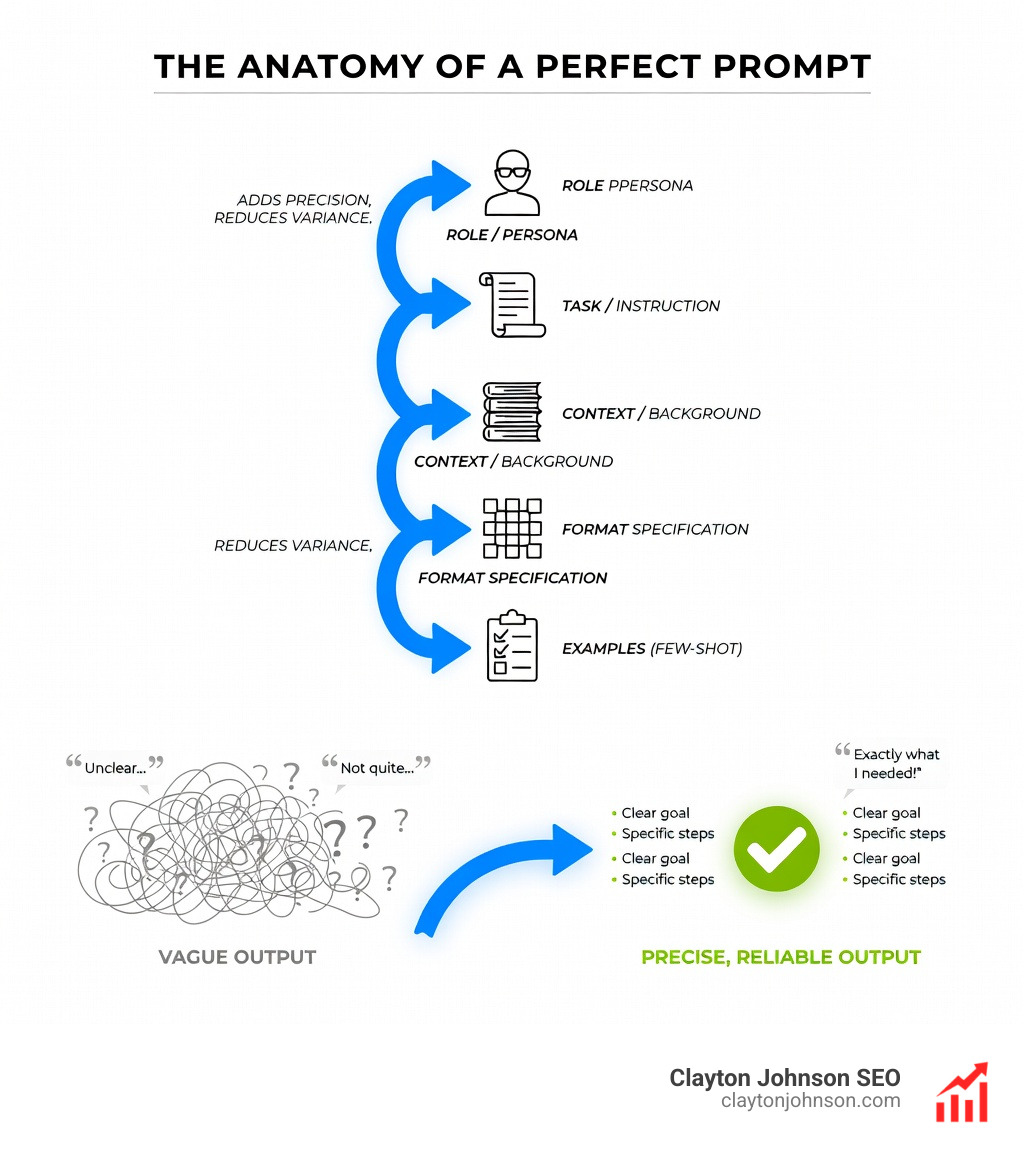

Structuring Prompts for Maximum Precision

How you format your message is just as important as what you say. We find that using clear hierarchies helps the model understand which parts of the text are instructions and which parts are data.

| Model Type | Best For | Prompting Strategy |

|---|---|---|

| GPT Models (e.g., GPT-4o) | Creative writing, speed, simple tasks | Precise, explicit instructions |

| Reasoning Models (e.g., o1) | Math, logic, complex coding, strategy | High-level goals and constraints |

Using Message Roles

When using an API or advanced interface, we assign “roles” to different parts of the conversation:

- Developer/System Role: This sets the high-level rules (e.g., “You are a senior SEO analyst. Always use Markdown.”).

- User Role: This is the specific request or data provided by the person.

- Assistant Role: This is the model’s previous responses, which help maintain conversation state.

Using Markdown and XML Tags

We use Markdown and XML tags to create “logical boundaries.” This prevents the model from getting confused between your instructions and the text you want it to process.

- Markdown: Use headers (#) and lists (-) to organize your thoughts.

- XML Tags: Use tags like

For developers, this is essential for coding with Claude and other high-end models.

Authority and System Instructions

Think of the developer message as a “contract.” It has a higher level of authority than the user message. If you tell the model in the system prompt “Never mention competitors,” it is much harder for a user to trick it into doing so later. This is where you set the tone, goals, and safety parameters for the entire interaction.

Advanced Architectures and Emerging Trends

The field is moving fast. We are shifting from “chatting” with a bot to building complex “agentic” systems.

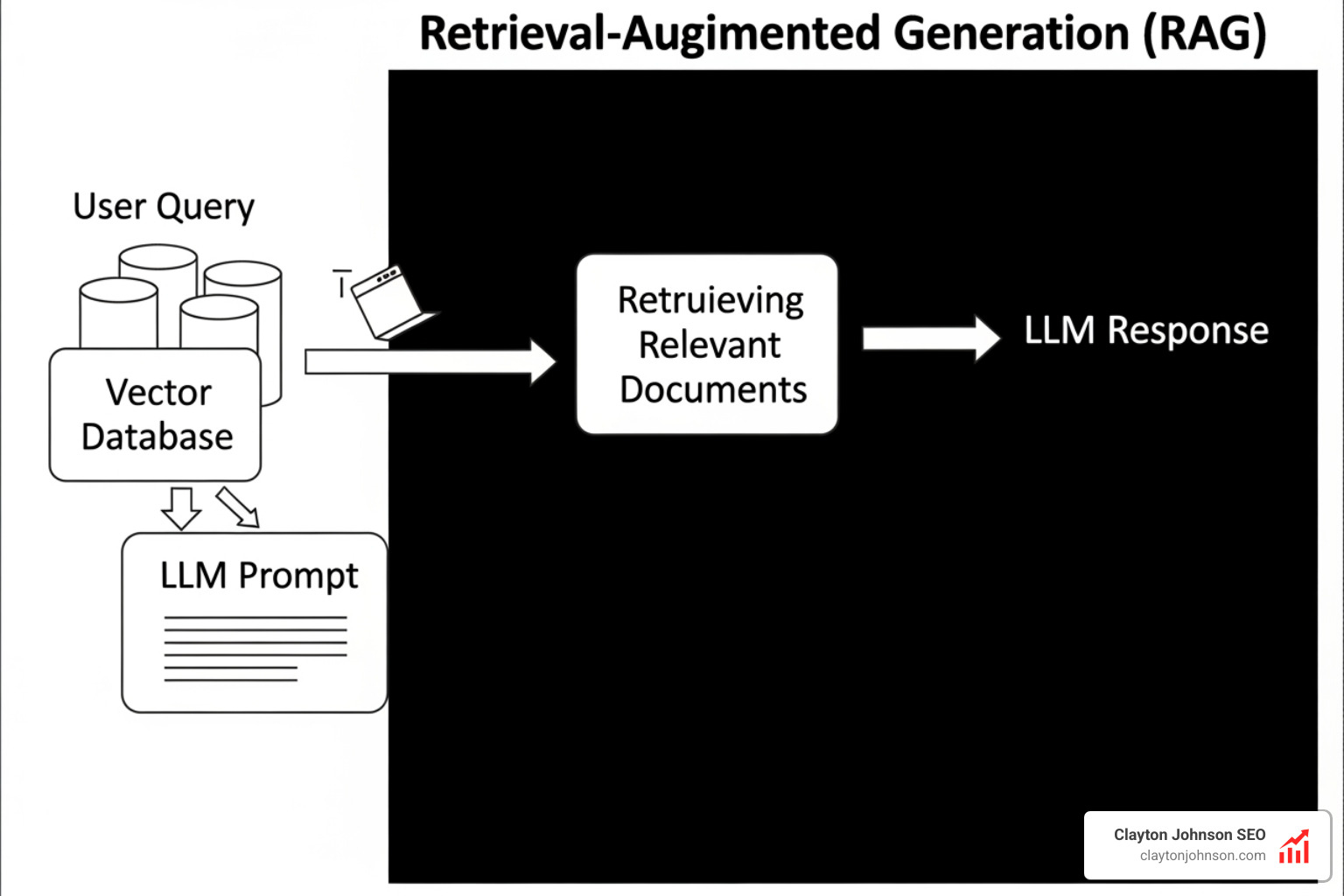

Retrieval-Augmented Generation (RAG) and GraphRAG

One of the biggest limitations of LLMs is that their knowledge is frozen in time. RAG solves this by letting the model “look up” information from your own documents or a live database before it answers.

GraphRAG takes this a step further by using knowledge graphs to understand the relationships between different pieces of data. This allows for much deeper discovery in complex narrative data. You can explore more about these research findings on GraphRAG discovery through official channels.

Multimodal and Text-to-Image Prompting

Prompt Engineering isn’t just for text. In tools like Midjourney or DALL-E, we use descriptive language to control lighting, style, and composition. A common technique is “personalized rewriting,” where an LLM takes a simple user idea and expands it into a highly detailed image prompt.

Security Risks and Prompt Injection

As we build more powerful tools, we have to talk about security. Prompt injection occurs when a user provides input that “overwrites” the model’s original instructions. This can lead to “jailbreaking” (making the AI say things it shouldn’t) or data exfiltration. Robust Prompt Engineering includes defensive layers to filter out these adversarial attacks.

Frequently Asked Questions about Prompt Engineering

What are the key skills required to become a proficient prompt engineer?

It’s a mix of technical and soft skills. You need domain expertise (if you’re prompting for code, you need to understand coding principles), linguistic precision (the ability to choose exactly the right word), and an analytical mindset to test and measure which prompts actually perform better.

How does prompt engineering help prevent AI hallucinations?

Hallucinations happen when the model tries to fill in gaps in its knowledge with “likely” but false information. We prevent this through grounding. By providing the model with the correct context (via RAG) and telling it, “If you don’t know the answer based on this text, say you don’t know,” we drastically reduce errors.

Is prompt engineering still relevant as AI models improve?

Yes. While models are getting “smarter” and better at following vague instructions, the need for steerability in complex business workflows is only growing. As we move toward structured growth systems, we need precise “hooks” to connect AI models to our data and tools.

Conclusion

At Clayton Johnson, we believe that clarity leads to structure, and structure leads to leverage. Prompt Engineering is the ultimate leverage tool in a modern business environment. It is the bridge between raw AI potential and the structured growth architecture your company needs to scale.

Whether you are automating email outreach, building a custom search-intent engine, or designing a competitive positioning model, how you talk to the machine determines your speed.

Stop yelling at your AI. Start engineering it.

Work with me and let’s build the growth infrastructure of the future together.