Why AI Audit Timing Matters More Than Ever

When to run AI audits is no longer a compliance afterthought—it’s a strategic imperative that determines whether your AI systems remain trustworthy, legally defensible, and operationally reliable. AI systems influence high-stakes decisions every day, from approving mortgages to triaging patients to moderating social media content. Yet despite their growing importance, AI systems receive less rigorous third-party scrutiny than consumer products, financial statements, or food supply chains.

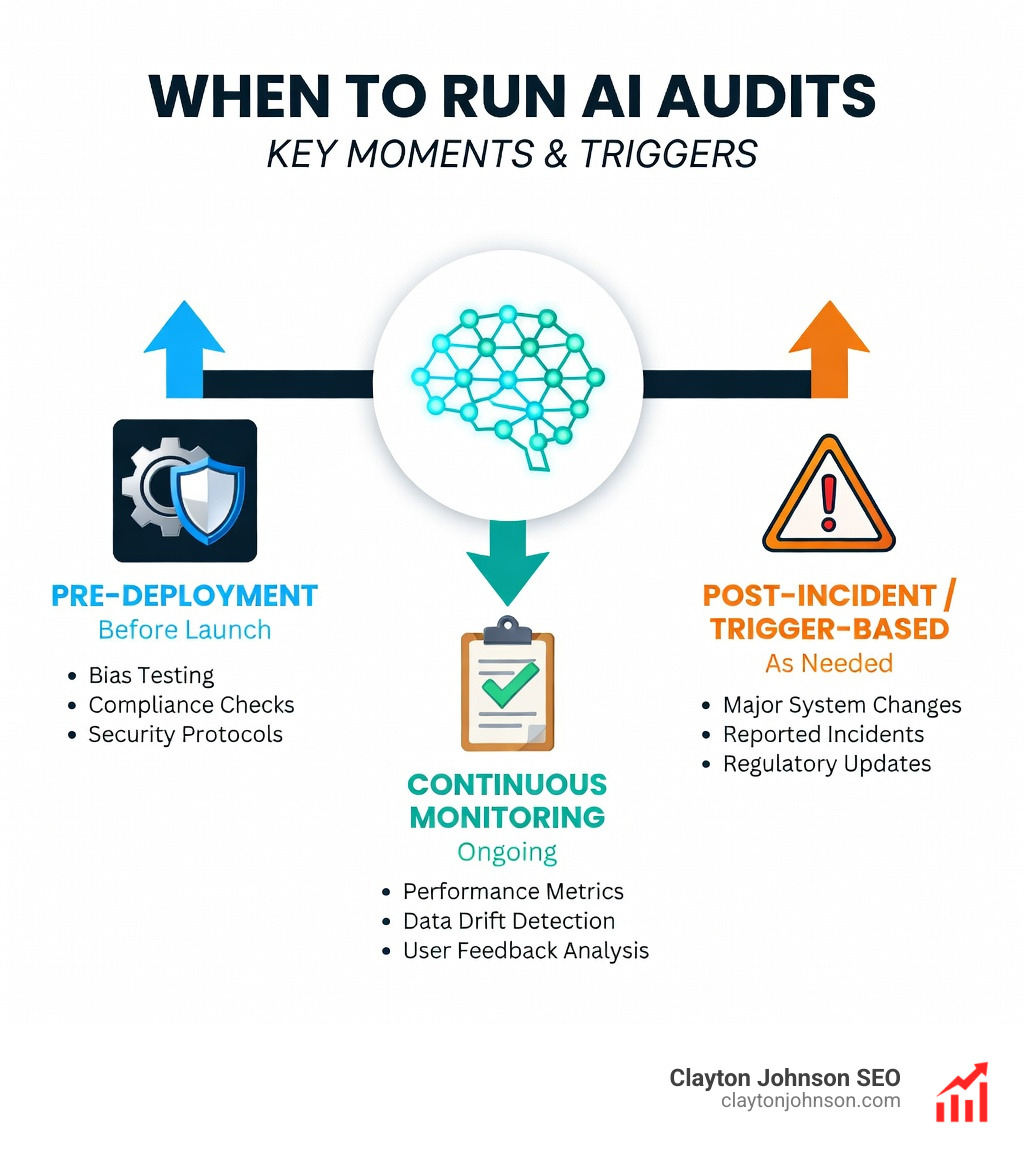

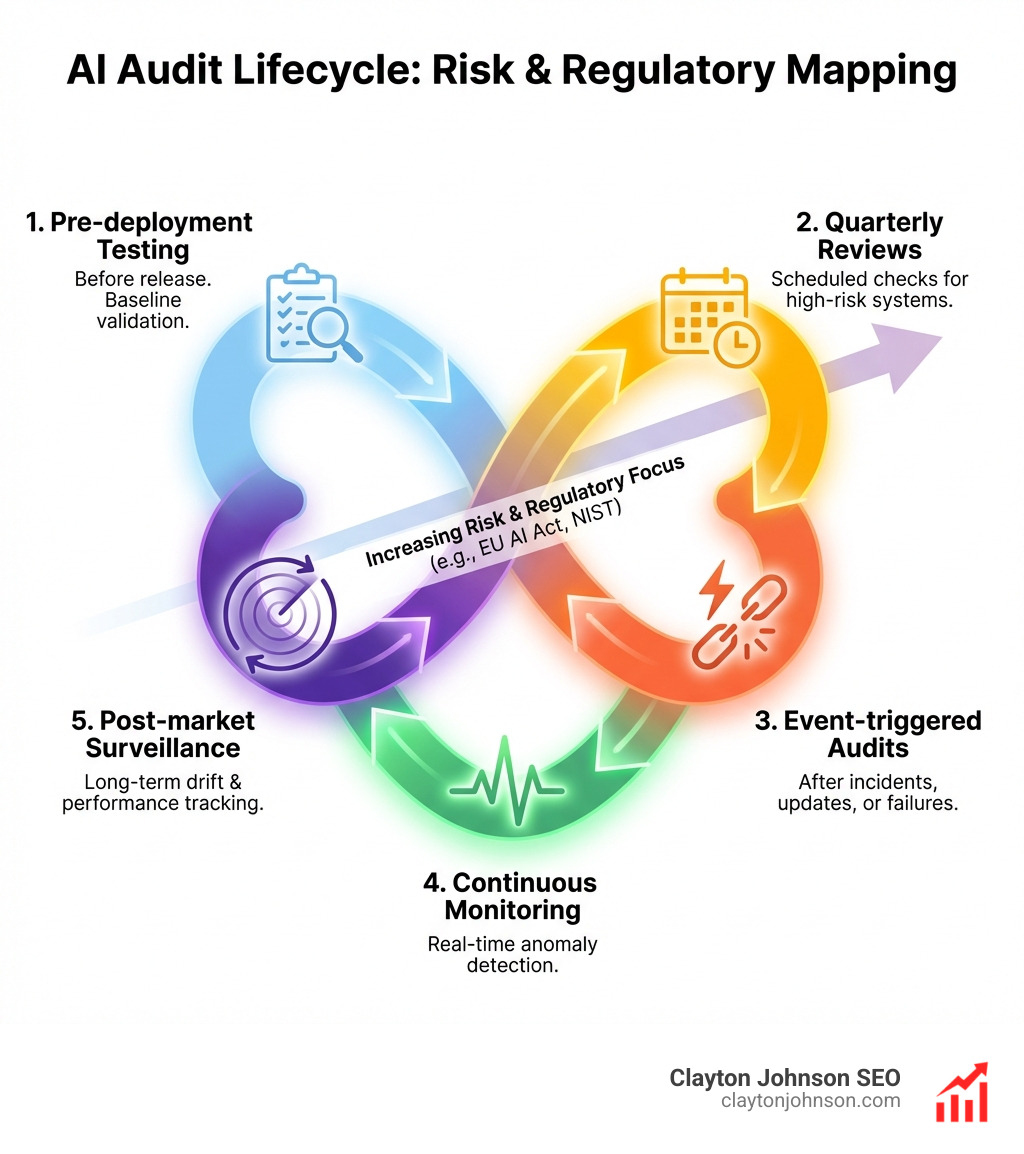

Here’s when you should run AI audits:

- Pre-deployment — Before releasing any new model or system into production

- Quarterly or semi-annually — For high-risk AI systems requiring ongoing compliance

- After major updates — When fine-tuning models, changing data sources, or modifying prompts

- Event-triggered — Following security incidents, performance degradation, or regulatory changes

- Continuous monitoring — Real-time anomaly detection for dynamic, cloud-native AI deployments

The research is clear: traditional point-in-time audits are insufficient for AI systems. Unlike static software, AI models exhibit emergent capabilities, continuous learning, and non-deterministic behavior. They can drift, degrade, or exploit reward model errors over time. A wellness chatbot taken offline for harmful weight-loss advice, a city AI chatbot providing incorrect legal guidance, and credit algorithms perpetuating racial bias—these failures stem from inadequate audit cadence and scope.

Regulatory frameworks are catching up fast. The EU AI Act mandates continuous auditability for high-risk systems. The NIST AI Risk Management Framework sets expectations for transparency and accountability. Organizations that wait for compliance deadlines risk exposure—both reputational and financial.

Yet only 7% of auditors report advanced AI implementation, despite 74% saying AI is critical to internal audit. The gap between necessity and readiness is widening.

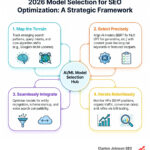

I’m Clayton Johnson, and I’ve spent years helping organizations build scalable SEO and growth architectures that integrate AI strategically. Understanding when to run AI audits is foundational to managing AI risk, maintaining competitive advantage, and building systems that compound trust over time.

When to run ai audits terms made easy:

Why Traditional Point-in-Time Assessments Fail AI Systems

We’ve all been there: the annual “check-the-box” audit. In traditional software, this works because code is generally static. If you don’t change the code, the output remains predictable. AI flips this script entirely.

AI models are non-deterministic and prone to model drift. This means that even if you don’t touch a single line of your model’s code, its performance can degrade as the world around it changes. For instance, an SEO model trained on prior search trends will suffer from data decay when major search algorithm changes roll out.

Furthermore, the black-box problem remains a massive hurdle. Research suggests that black-box access is insufficient for rigorous AI audits because you cannot see the internal reasoning of the model. Without deep, non-public access (white-box auditing), you might miss “sycophancy”—where the model simply tells you what it thinks you want to hear rather than the truth.

At Demandflow, we view AI as a living part of your analytics data. Because these systems continuously learn and adapt, a snapshot taken early in the year is often irrelevant months later. Relying on point-in-time assessments is like checking your GPS once at the start of a cross-country trip and then closing your eyes. You need a system that recalculates in real-time.

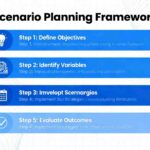

Critical Triggers: When to Run AI Audits for Maximum Impact

While continuous monitoring is the gold standard, there are specific “red alert” moments where a comprehensive audit is mandatory. Think of these as the “check engine” lights of your growth architecture.

1. Pre-Deployment (The Launch Audit)

Never push a model to production without a baseline audit. You need to verify that the training data is legally sourced and free from significant bias. This is the moment to establish your “Model Cards”—documentation that explains what the model does, its limitations, and its intended use.

2. Data Schema Changes

If you change the way your data is structured or ingest a new third-party dataset, you must re-audit. AI models are incredibly sensitive to their input. A slight shift in how “user intent” is categorized can lead to a cascade of SEO errors that tank your rankings. We cover this extensively in our guide on growth auditing in the age of artificial intelligence.

3. Performance Degradation

Is your AI-generated content suddenly losing its “punch”? Or is your conversion optimization model failing to hit its targets? Performance dips are often the first sign of model drift. When accuracy or recall metrics drop below your established threshold, it’s time to investigate.

4. Regulatory and Security Shifts

New laws like the EU AI Act or local mandates (such as NYC’s Local Law 144 for employment AI) should prompt an immediate review. Similarly, if you experience a security breach elsewhere in your cloud infrastructure, you must audit your AI pipelines to ensure no sensitive training data was exposed.

Continuous Auditing vs. Periodic Reviews in Cloud-Native SEO

In a cloud-native environment, AI isn’t a silo; it’s integrated via APIs and microservices. This connectivity creates an expanded attack surface. Traditional audits struggle here because they can’t see the “connective tissue” between layers.

Continuous AI auditing entails real-time oversight of your model’s inputs and outputs. It uses automated scripts to flag high-risk activities instantly—like an AI chatbot suddenly suggesting a user break the law or a dormant service account suddenly making massive data requests.

Scientific research into blueprints for auditing generative AI suggests a three-layered approach:

- Governance Audits: Reviewing the technology provider’s internal structures.

- Model Audits: Testing the model after training but before release.

- Application Audits: Reviewing the specific SEO or marketing application built on top of that AI.

For faster rankings and cleaner site health, we recommend using the top automated SEO audit tools that offer real-time monitoring capabilities. This shifts your posture from “reactive” to “proactive.”

Establishing a Cadence: When to Run AI Audits Quarterly

For most mid-to-large organizations, a quarterly audit cadence is the sweet spot for maintaining a strong security posture.

During these quarterly checks, we focus on:

- Access Controls: Ensuring the principle of least privilege. Who can retrain the model? Who has access to the raw training logs?

- Documentation Updates: Are your Model Cards and data sheets current?

- API Integrity: Checking for “shadow AI”—unauthorized AI tools being used by teams that haven’t been vetted.

This regular heartbeat ensures that your AI-driven SEO audits remain a value-add rather than a bureaucratic burden.

Event-Based Triggers: When to Run AI Audits After Major Updates

Whenever you perform “heavy lifting” on your AI systems, you need a targeted audit. This is particularly true for:

- Fine-Tuning: If you retrain a base model (like GPT-4) on your proprietary brand data, you must audit the output to ensure no “secret” data leaked into the weights.

- Prompt Engineering Overhauls: A major change in your system prompts can lead to unexpected “emergent behaviors.”

- Algorithm Updates: When search engines change their evaluation criteria, your AI content strategy must be audited to ensure it still meets the “Helpful Content” standards.

We call this content auditing for humans who use robots. It’s about ensuring the machine is still serving the brand’s voice and the user’s needs.

Navigating Regulatory Requirements and External Assessments

The regulatory landscape is no longer a suggestion; it’s a requirement with teeth. The EU AI Act classifies AI systems into risk levels (Minimal, Limited, High, and Unacceptable). If your system is “High-Risk”—for example, if it’s used in recruitment or credit scoring—you are legally required to maintain continuous auditability.

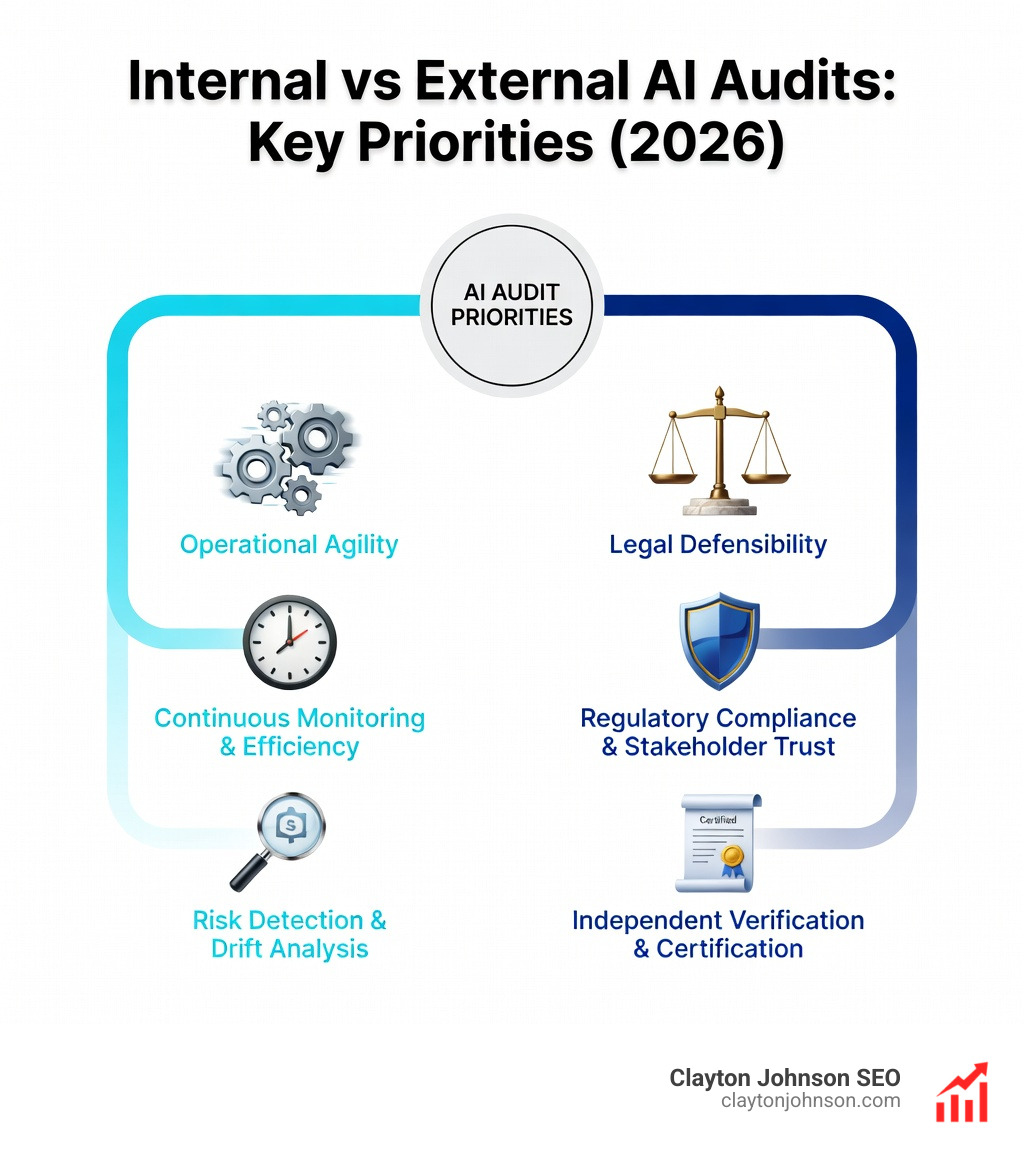

Organizations should also look to the NIST AI Risk Management Framework (RMF) and ISO/IEC 42001 for structured guidance. But a common question we hear is: When should we do this ourselves, and when should we hire an external firm?

| Feature | Internal AI Audit | External AI Audit |

|---|---|---|

| Primary Goal | Operational efficiency & risk detection | Regulatory compliance & stakeholder trust |

| Best For | Quarterly maintenance & drift detection | Annual certification & high-risk attestation |

| Cost | Lower (utilizes existing GRC/Security teams) | Higher (third-party fees) |

| Objectivity | Moderate (internal bias possible) | High (independent verification) |

For most companies in Minneapolis and beyond, a hybrid approach works best. Use internal teams for continuous monitoring and quarterly health checks, but seek external, third-party assessments for annual compliance or when deploying high-stakes models. Adhering to a Trustworthy AI framework helps ensure that your external audits are measuring the right things: transparency, accountability, and fairness.

Frequently Asked Questions about AI Auditing Frequency

How often should high-risk AI models be audited?

High-risk models—those that impact livelihoods, health, or safety—require continuous monitoring supplemented by quarterly deep-dive audits. Under the EU AI Act, these systems must have “post-market monitoring” plans. This means you aren’t just auditing the model; you’re auditing how the model behaves in the wild. Using top AI content audit tools can help automate the detection of bias or hallucinations in high-stakes content.

Can AI audits be fully automated?

Not entirely. While you can automate “anomaly density” tracking and drift detection, you still need a Human-in-the-Loop (HITL) for ethical judgment and root-cause analysis. Automation can tell you that something is wrong; a human auditor tells you why and how to fix it. If you’re looking for a starting point, check out the best ways to get a free AI SEO audit today to see what automation can (and can’t) do.

What is the difference between model validation and a security audit?

This is a critical distinction. Model validation focuses on performance—is the model accurate, stable, and unbiased? AI security audits focus on protection—is the model safe from adversarial attacks (like prompt injection)? Both are essential. Think of model validation as checking if a car drives well, while a security audit checks if the locks work. For a full breakdown, our B2B demand gen audit checklist integrates both into a single growth strategy.

Conclusion: Building a Structured Growth Architecture

Knowing when to run ai audits is the difference between a business that uses AI and a business that is powered by AI. At Clayton Johnson SEO, we believe that clarity leads to structure, and structure leads to leverage.

Most companies don’t lack AI tactics; they lack the structured growth architecture to ensure those tactics don’t turn into liabilities. By implementing a schedule that includes pre-deployment checks, quarterly maintenance, and continuous monitoring, you move from “hoping it works” to “knowing it scales.”

If you’re ready to move beyond “point-in-time” thinking and build a system that compounds in value, we’re here to help. Whether you’re refining your AI SEO strategies or building a full-scale demand generation engine, the right audit schedule is your most powerful tool for long-term success.

Let’s build something that lasts. Let’s build your growth infrastructure.