What Is Generative AI? A Plain-English Answer

What is generative AI is one of the most searched technology questions right now — and for good reason. It’s reshaping how businesses create content, write code, and build products.

Here’s the short answer:

Generative AI is a type of artificial intelligence that creates new content — text, images, audio, video, and code — by learning patterns from large datasets.

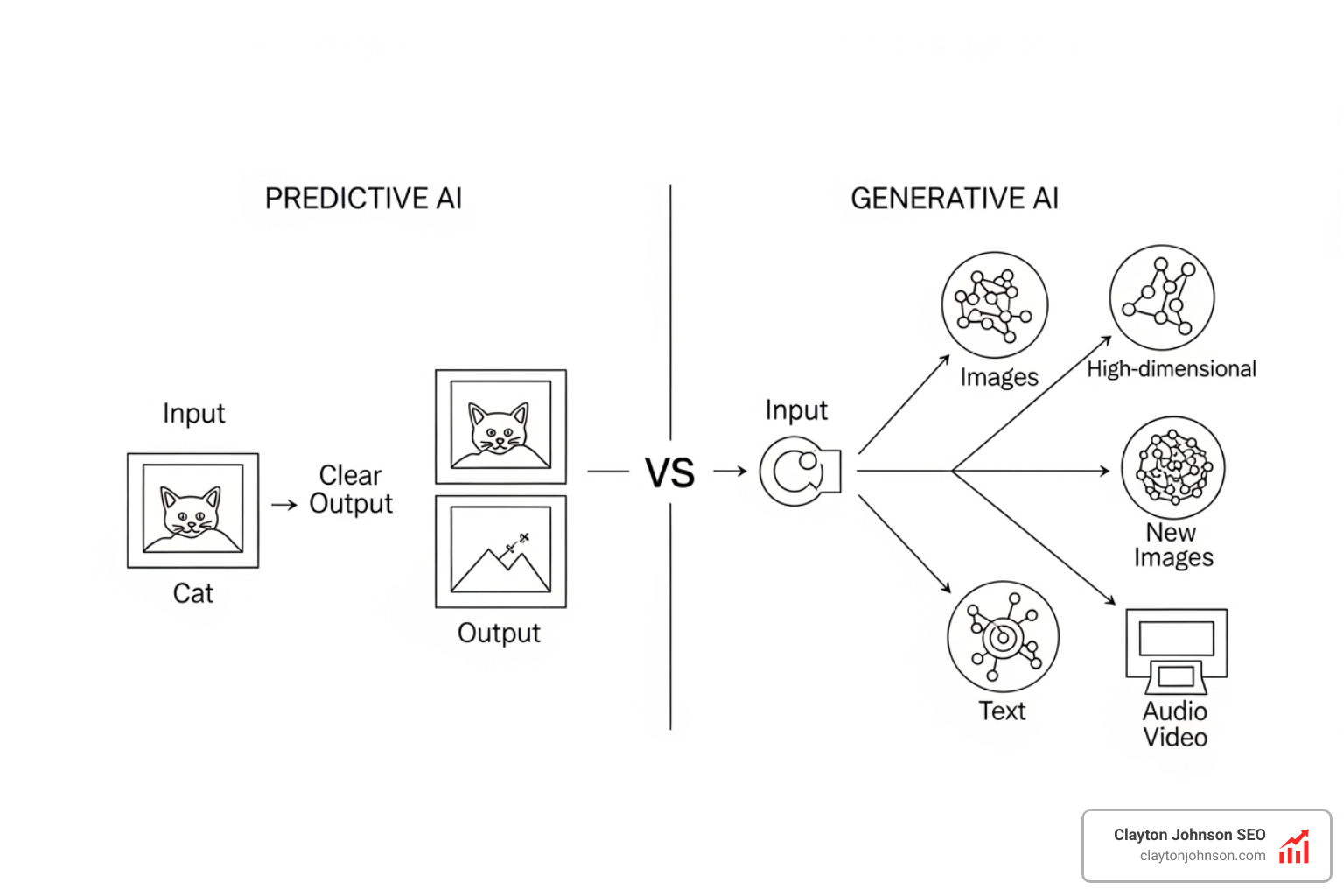

Unlike traditional AI, which analyzes or classifies existing data, generative AI produces something new in response to a prompt.

| Feature | Traditional AI | Generative AI |

|---|---|---|

| Primary function | Classify or predict | Create new content |

| Output type | Labels, scores, decisions | Text, images, audio, video |

| Learning type | Supervised | Self-supervised |

| Example use | Fraud detection | Writing a blog post |

| Data role | Input for analysis | Blueprint for generation |

The scale of impact is hard to overstate. Generative AI applications are projected to add up to $4.4 trillion to the global economy annually. AI adoption has more than doubled over the past five years. This is not a trend — it’s infrastructure.

What makes generative AI different from a simple search or autocomplete? It doesn’t retrieve existing information. It generates statistically probable new content based on patterns it learned during training. Think of it as a system that has read billions of documents and learned to write, draw, and speak — on demand.

The technology runs on foundation models — large deep-learning models trained on massive datasets. GPT-3, for example, was trained on roughly 45 terabytes of text data. These models form the backbone of tools like ChatGPT, DALL-E, Midjourney, and hundreds of enterprise applications.

I’m Clayton Johnson, an SEO strategist and growth operator who works at the intersection of AI-augmented marketing systems and structured content architecture — including how to leverage tools built on what is generative AI to drive compounding business growth. In this guide, I’ll break down how generative AI works, what it can and can’t do, and how founders and marketing leaders can use it as a strategic asset rather than a novelty.

What is Generative AI and How Does It Differ from Traditional AI?

To understand the shift we are seeing in the marketplace, we have to look at the fundamental difference between “finding” and “creating.” Traditional AI systems are largely discriminative. If you feed a traditional model thousands of photos of cats and dogs, it learns to recognize the patterns that distinguish them. When you show it a new photo, it can tell you with high confidence, “This is a cat.” It is excellent at pattern recognition, classification, and prediction.

Generative AI takes this a step further. Instead of just recognizing a cat, it learns the underlying distribution of what makes a cat look like a cat — the shape of the ears, the texture of the fur, the positioning of the eyes. Once it understands these features, it can synthesize entirely novel content. It doesn’t just identify the cat; it draws a cat that has never existed before.

This transition from perception to creation is what makes this technology so disruptive. Traditional AI helps us organize the world; generative AI helps us build it. For founders and marketing leaders, this means moving from systems that simply analyze SEO data to systems that can help architect an entire authority-building ecosystem.

Learn more about how generative AI functions to see how these models move beyond simple algorithms to create sophisticated, human-like outputs.

The Core Mechanics of What is Generative AI

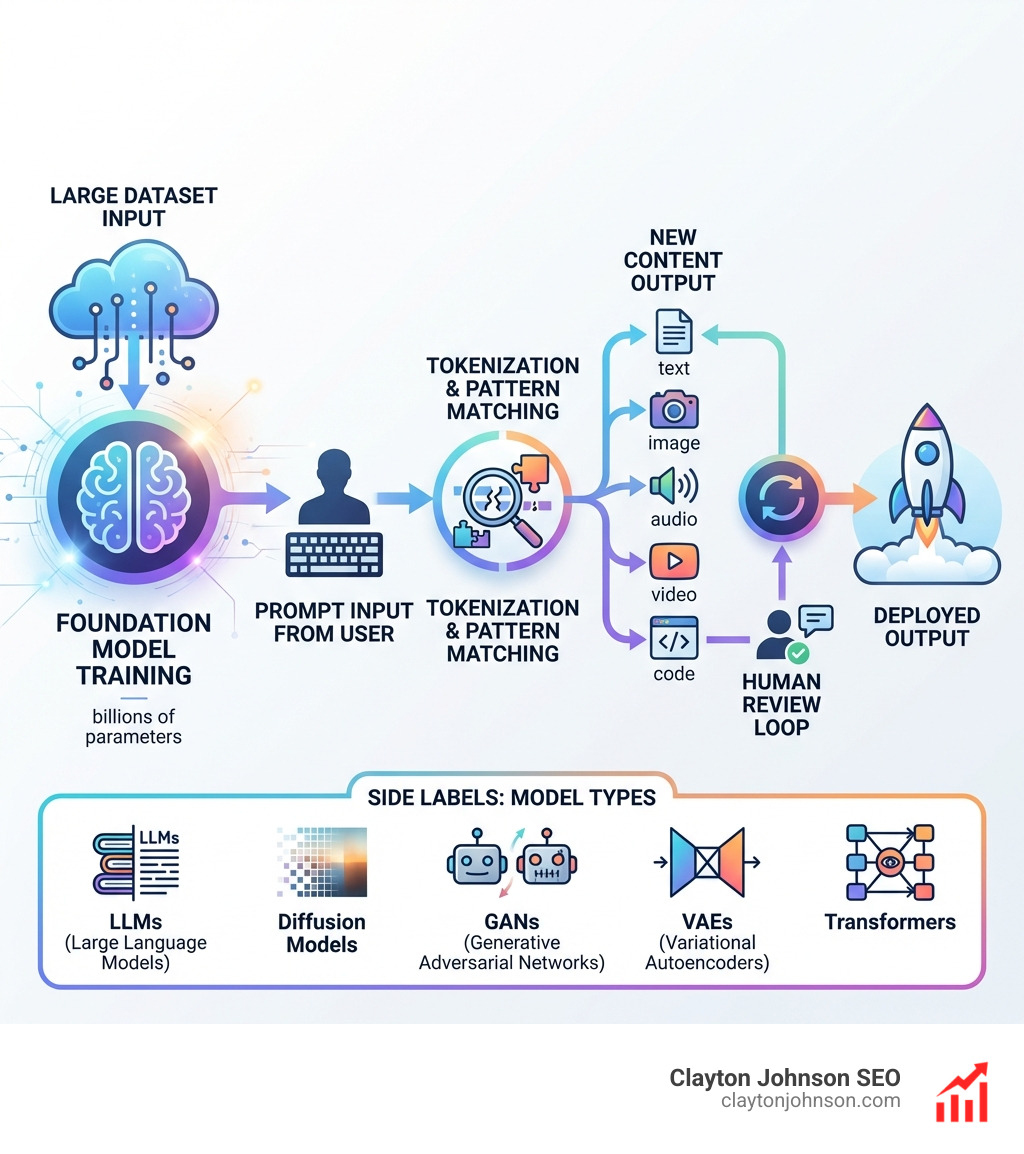

At the heart of this technology are foundation models. These are deep learning models trained on vast, unstructured datasets. Think of a foundation model as a versatile base that can be adapted for many different tasks.

Large Language Models (LLMs) are the most famous type of foundation model. They use neural networks — computational structures inspired by the human brain — to process and synthesize data. When we ask an LLM a question, it isn’t “thinking” in the human sense. Instead, it is performing a massive “fill in the blank” exercise. It looks at the words in your prompt and calculates the statistical probability of which word (or “token”) should come next based on the trillions of patterns it saw during training.

This process of data synthesis allows the model to produce coherent, contextually relevant responses. However, because it relies on statistical probability rather than a database of facts, it can sometimes produce “hallucinations” — statements that sound perfectly confident but are factually incorrect.

Key Capabilities of What is Generative AI

The versatility of these models allows them to operate across multiple modalities:

- Text Generation: From drafting emails and blog posts to writing complex software code. Models can mimic specific tones, summarize long documents, and even write poetry or jokes.

- Image Synthesis: Tools like DALL-E and Midjourney can create photorealistic images, digital art, and technical diagrams from simple text descriptions.

- Audio Creation: Generative AI can synthesize realistic human speech, clone voices for narration, and even compose original music across various genres.

- Video Production: Emerging models are now capable of generating short video clips or animations from text prompts, significantly lowering the barrier to entry for high-quality visual storytelling.

Main Types of Generative Models and Their Architecture

Not all generative AI is built the same way. Different architectures are suited for different types of content creation. Understanding these is key to knowing which tool to use for a specific business objective.

- Diffusion Models: These are the powerhouse behind modern image generation. They work by taking an image and adding “noise” (random pixels) until it is unrecognizable. The model then learns to reverse this process, starting from pure noise and “denoising” it to create a clear, high-resolution image that matches your prompt.

- Generative Adversarial Networks (GANs): A GAN consists of two neural networks — a “generator” and a “discriminator” — that compete against each other. The generator tries to create fake data, while the discriminator tries to spot the fake. As they battle, the generator becomes incredibly skilled at creating realistic outputs, such as high-quality deepfakes or synthetic medical images.

- Variational Autoencoders (VAEs): These models compress input data into a compact “latent space” and then learn to reconstruct it. They are often used for data augmentation and cleaning up noisy datasets.

- Transformers: This is the architecture that changed everything. Introduced by researchers at Google, the transformer uses a “self-attention mechanism.”

The self-attention mechanism allows the model to weigh the importance of different parts of an input sequence differently. When reading a sentence, the model doesn’t just look at words in order; it understands how a word at the beginning of a paragraph relates to a word at the end. This contextual awareness is why LLMs like ChatGPT feel so much more human than the chatbots of the past.

Scientific research on generative AI provides a deeper look into how these architectures are evolving to handle increasingly complex data structures.

Training Foundation Models and LLMs

Building a foundation model is a massive undertaking. It requires three main ingredients: massive amounts of data, thousands of high-performance GPUs, and millions of dollars in investment.

- Data Scraping and Preparation: Models are fed terabytes of text, images, and code scraped from the open internet. This data is often raw and unstructured.

- Tokenization: The model breaks down the data into smaller chunks called tokens. In text, a token might be a word or a part of a word.

- Self-Supervised Learning: The model trains itself by hiding parts of the data and trying to predict what’s missing. If it gets it wrong, it adjusts its internal “parameters” — the mathematical weights that determine how information flows through the neural network.

- Fine-Tuning: Once the base model is trained, it often undergoes Reinforcement Learning from Human Feedback (RLHF). Human reviewers rank the model’s responses, teaching it to be more helpful, accurate, and safe.

Latest research on foundation models highlights both the incredible opportunities and the systemic risks associated with training these massive systems.

Popular Tools and Examples

We are currently seeing an explosion of consumer and enterprise tools built on these architectures:

- ChatGPT (OpenAI): The most widely used text generator, capable of everything from conversational chat to complex reasoning.

- Claude (Anthropic): Known for its long context window and focus on safety and constitutional AI.

- Google Gemini: A multimodal model integrated into the Google ecosystem, capable of processing text, images, and video simultaneously.

- Microsoft Copilot: An AI assistant integrated directly into productivity software like Word and Excel, powered by OpenAI’s GPT-4.

- Midjourney: A high-end image generator favored by designers for its artistic style and high-resolution output.

Real-World Applications and Industry Impact

Generative AI is no longer just a playground for tech enthusiasts; it is being integrated into the core operations of major industries. We are seeing a shift from general-purpose tools to specialized applications that solve high-value problems.

Industry Applications of What is Generative AI

In Healthcare, generative models are accelerating drug discovery by designing novel molecular structures that could lead to new treatments. They are also used to generate synthetic radiology images to train diagnostic models without compromising patient privacy.

In Software Development, tools like GitHub Copilot and Cursor are transforming the workflow of engineers. Instead of writing every line of code from scratch, developers can use AI to generate boilerplate code, suggest bug fixes, and explain complex legacy systems. This doesn’t just save time; it changes the economics of building software.

In Entertainment, generative AI is being used for everything from scriptwriting and music composition to sophisticated video editing. While this has sparked significant labor disputes, it also allows independent creators to produce high-fidelity content that was previously only possible for major studios.

In Education, we are seeing the rise of personalized learning. AI can create custom study aids, quizzes, and even act as a 24/7 tutor that adapts its teaching style to the individual student’s needs.

Research on AI in healthcare details the specific pathways for implementing these models in clinical settings responsibly.

Economic and Global Adoption Trends

The economic potential is staggering. Beyond the $4.4 trillion annual contribution to the global economy, we are seeing a massive surge in innovation. For example, entities in certain regions have filed over 38,000 generative AI patents in recent years, signaling a global race for dominance in this space.

Adoption rates are also climbing. Surveys show that in some markets, over 80% of respondents are already using generative AI in their daily lives or work, significantly higher than the global average. This widespread adoption is driving a productivity boom, with some workers reporting saving nearly 3% of their total work time just by using basic chatbots.

Ethical Considerations, Risks, and Legal Frameworks

With great power comes significant responsibility — and risk. As we integrate what is generative AI into our businesses and lives, we must navigate a complex landscape of ethical and legal challenges.

- Misinformation and Disinformation: Because these models can generate highly convincing text and media, they can be weaponized to spread false information at scale.

- Algorithmic Bias: If the training data contains human biases (racial, gender, or cultural), the AI will reflect and often amplify those biases in its output.

- Privacy Concerns: Many models were trained on data scraped from the internet, raising questions about whether personal or sensitive information was included without consent.

- Environmental Impact: Training and running these massive models requires enormous amounts of electricity and water for cooling data centers. Some estimates suggest the carbon footprint of the AI industry could rival major industrial sectors in the coming years.

Intellectual Property and Academic Integrity

One of the fiercest battlegrounds is Copyright Law. Currently, the U.S. Copyright Office has ruled that works created entirely by AI without human input cannot be copyrighted. This creates a “gray area” for businesses using AI to create marketing materials or products.

There are also ongoing lawsuits from publishers and artists who argue that their copyrighted work was used to train these models without compensation. Research on AI and copyright law provides an overview of how courts are beginning to address these novel legal questions.

In academia, the concern is Academic Integrity. As students use tools like ChatGPT to write essays, educators are having to rethink how they assess knowledge. Detection tools exist, but they are often unreliable and can produce false positives, leading to a shift toward more interactive and oral assessments.

Mitigating Risks and Regulatory Oversight

Governments are moving quickly to create guardrails. The EU AI Act is one of the first comprehensive regulatory frameworks, requiring companies to disclose copyrighted material used in training and to label AI-generated content.

For organizations, mitigation strategies include:

- Human-in-the-loop: Ensuring every AI-generated output is reviewed by a human before it is used or published.

- Digital Watermarking: Using technology to embed invisible markers in AI-generated images and video to identify their origin.

- Safety Protocols: Implementing strict internal policies on what data can be fed into AI prompts to prevent the leakage of proprietary information.

Frequently Asked Questions about Generative AI

Is generative AI the same as machine learning?

Not exactly. Machine learning is a broad field of AI where computers learn from data. Generative AI is a specific subset of machine learning. While traditional machine learning focuses on analyzing and predicting, generative AI focuses on replication and creation. All generative AI is machine learning, but not all machine learning is generative.

Can generative AI replace human jobs?

This is a nuanced issue. While generative AI can automate certain tasks — like writing basic code or generating simple copy — it is best viewed as a tool for human augmentation. It handles the “grunt work,” allowing humans to focus on high-level strategy, creative direction, and complex problem-solving. In our experience at Demandflow, AI doesn’t replace the strategist; it gives the strategist a superpower.

What are the hardware requirements for generative AI?

Running these models “locally” (on your own computer) requires significant power, specifically high-end GPU clusters with large amounts of VRAM. However, most users and businesses access these models through cloud infrastructure provided by companies like OpenAI, Microsoft, and Google, which removes the need for expensive local hardware.

Conclusion: Building for the Future with Structured Growth

Generative AI is not a magic wand that you wave to solve all your business problems. It is a powerful engine, but an engine is useless without a chassis and a map.

At Demandflow.ai, our thesis is simple: Clarity → Structure → Leverage → Compounding Growth.

Most companies don’t lack AI tactics; they lack structured growth architecture. We don’t just help you use generative AI to write more content. We help you build authority-building ecosystems and AI-augmented marketing workflows that turn information into an asset.

Whether it’s through our taxonomy-driven SEO systems, competitive positioning models, or interactive diagnostic tools, our goal is to provide the infrastructure founders and marketing leaders need to scale. Generative AI is the leverage; Demandflow is the structure.

The future belongs to those who can bridge the gap between the raw power of what is generative AI and the disciplined execution of a clear business strategy. By focusing on SEO strategy and content architecture that is human-led and AI-enhanced, we can create systems that don’t just generate noise — they generate results.

Ready to move beyond one-off tactics and start building a growth system that compounds? Start by mapping your workflows, testing small AI-assisted experiments, and turning what works into repeatable processes.