What Is AI Ecosystem — And Why Infrastructure Is the Real Story

What is AI ecosystem is one of those questions that sounds simple but reveals a much bigger picture once you dig in.

Here is the short answer:

An AI ecosystem is the full network of companies, governments, universities, and users that work together to build, run, and apply artificial intelligence — from the chips inside data centers all the way to the apps on your phone.

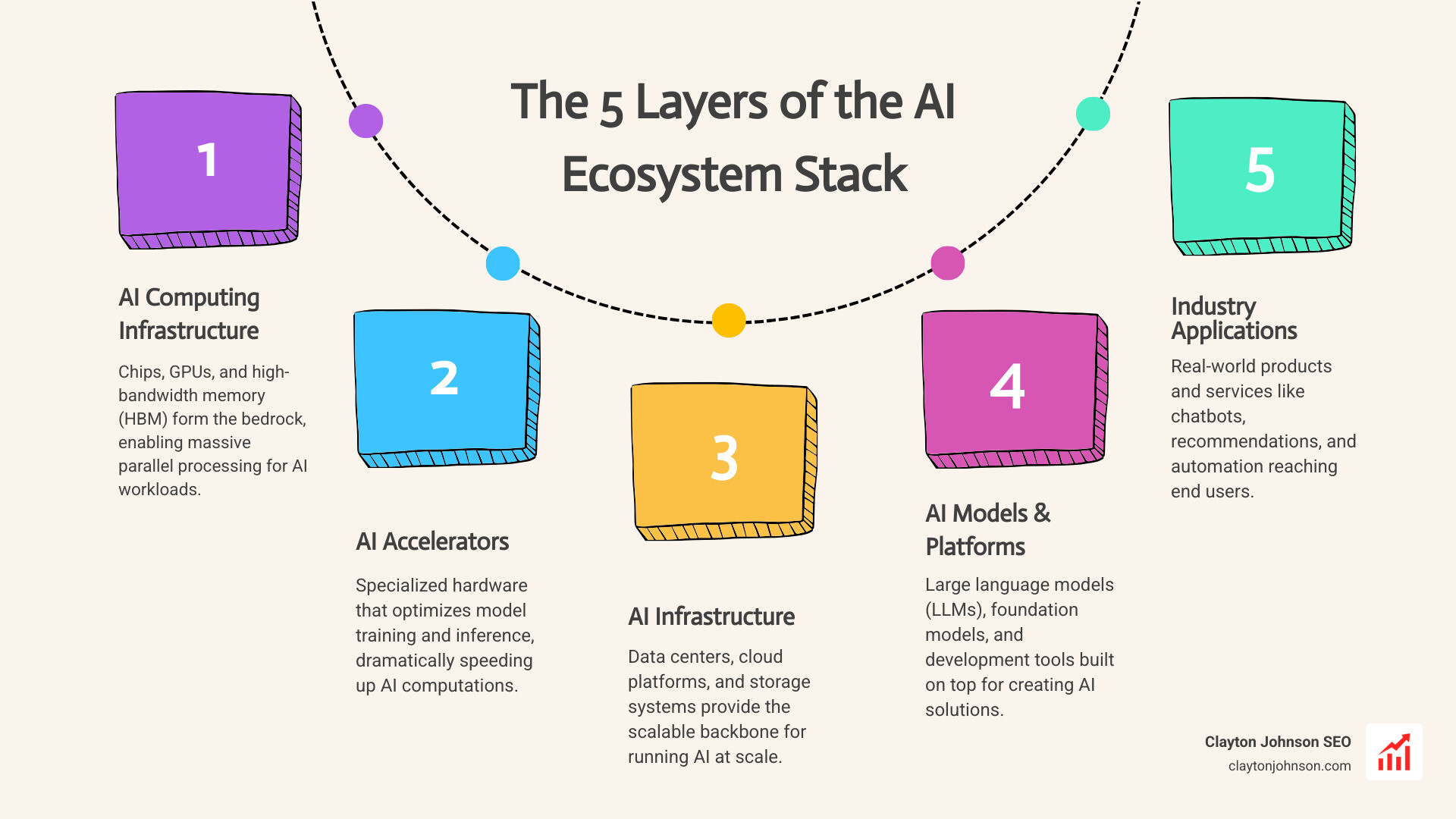

The core layers, at a glance:

- AI Computing Infrastructure — chips, GPUs, and high-bandwidth memory (HBM) that power everything

- AI Accelerators — specialized hardware that speeds up model training and inference

- AI Infrastructure — data centers, cloud platforms, and storage systems

- AI Models and Platforms — large language models, foundation models, and the tools built on top of them

- Industry Applications — the real-world products and services that reach end users

These layers are not isolated. Each one depends on the others. Remove any single layer and the whole system stalls.

Today, roughly one in six people worldwide uses generative AI tools — and that number is climbing fast. Behind every prompt, recommendation, and automated decision sits a complex stack of hardware, software, data pipelines, and policy frameworks that most people never see.

That invisible infrastructure is exactly what this guide breaks down.

I’m Clayton Johnson, an SEO strategist and growth architect who maps complex systems — including the question of what is AI ecosystem — into structured frameworks that founders and marketing leaders can actually act on. If you are building AI-assisted workflows or trying to understand where your infrastructure decisions fit inside a larger stack, this guide gives you the strategic clarity to do that.

Understanding What is AI Ecosystem and Its Core Layers

When we talk about what is AI ecosystem, we are describing more than just a piece of software like a chatbot. We are describing a “digital city.” In a city, you need power plants (hardware), roads (data pipelines), zoning laws (regulations), and residents (users). If the power plant fails, the residents can’t turn on their lights.

In the AI world, this ecosystem is a symbiotic network. It includes the massive tech giants providing cloud compute, the nimble startups building niche models, the academic institutions pushing the boundaries of research, and the governments setting the rules of the road.

At its heart, the ecosystem functions through constant interaction. A researcher at a university might develop a new algorithm; a hardware company builds a chip to run it faster; a cloud provider hosts that chip; a developer uses it to build a medical diagnostic tool; and a doctor uses that tool to save a life. This interconnectedness is why the ecosystem is so resilient—and so complex.

Historical evolution of the AI stack

The path to our current state wasn’t a straight line. It was more like a series of “starts and stops” known as AI winters.

- The Early Days and AI Winters: In the mid-twentieth century, the concept of AI was born, but it quickly hit a wall. Computing power was too expensive and data was scarce, leading to periods where funding and interest dried up completely.

- Machine Learning Resurgence: In the late twentieth century, we saw a revival through machine learning. Instead of trying to program every rule, we started teaching computers to recognize patterns in data.

- Deep Learning Revival: A major turning point occurred when figures like Geoffrey Hinton (often called the “godfather of AI”) helped revive interest in neural networks. This “Deep Learning” era allowed computers to process unstructured data like images and speech with incredible accuracy.

- The GPU Explosion: In the early 2010s, researchers realized that hardware designed for video games—Graphics Processing Units (GPUs)—was perfect for the heavy math required by deep learning. This was the match that lit the fire.

- The Generative AI Shift: Today, we have moved into the era of foundation models. These are massive models trained on broad swaths of public internet data, capable of generating text, code, and art. We no longer build AI from scratch; we “fine-tune” these giants for specific tasks.

The 5 Layers of the AI Infrastructure Stack

To truly grasp what is AI ecosystem, we have to look at it as a vertical stack. Each layer provides the foundation for the one above it.

| Layer | Primary Function | Key Components |

|---|---|---|

| Industry Applications | Solves specific user problems | Medical tools, self-driving cars, SEO systems |

| AI Models & Platforms | The “intelligence” layer | LLMs, Foundation models, APIs |

| AI Infrastructure | The “delivery” layer | Cloud platforms, Data centers, Storage |

| AI Accelerators | The “speed” layer | Specialized AI chips, NPU, TPU |

| Computing Infrastructure | The “physical” layer | GPUs, High-Bandwidth Memory (HBM) |

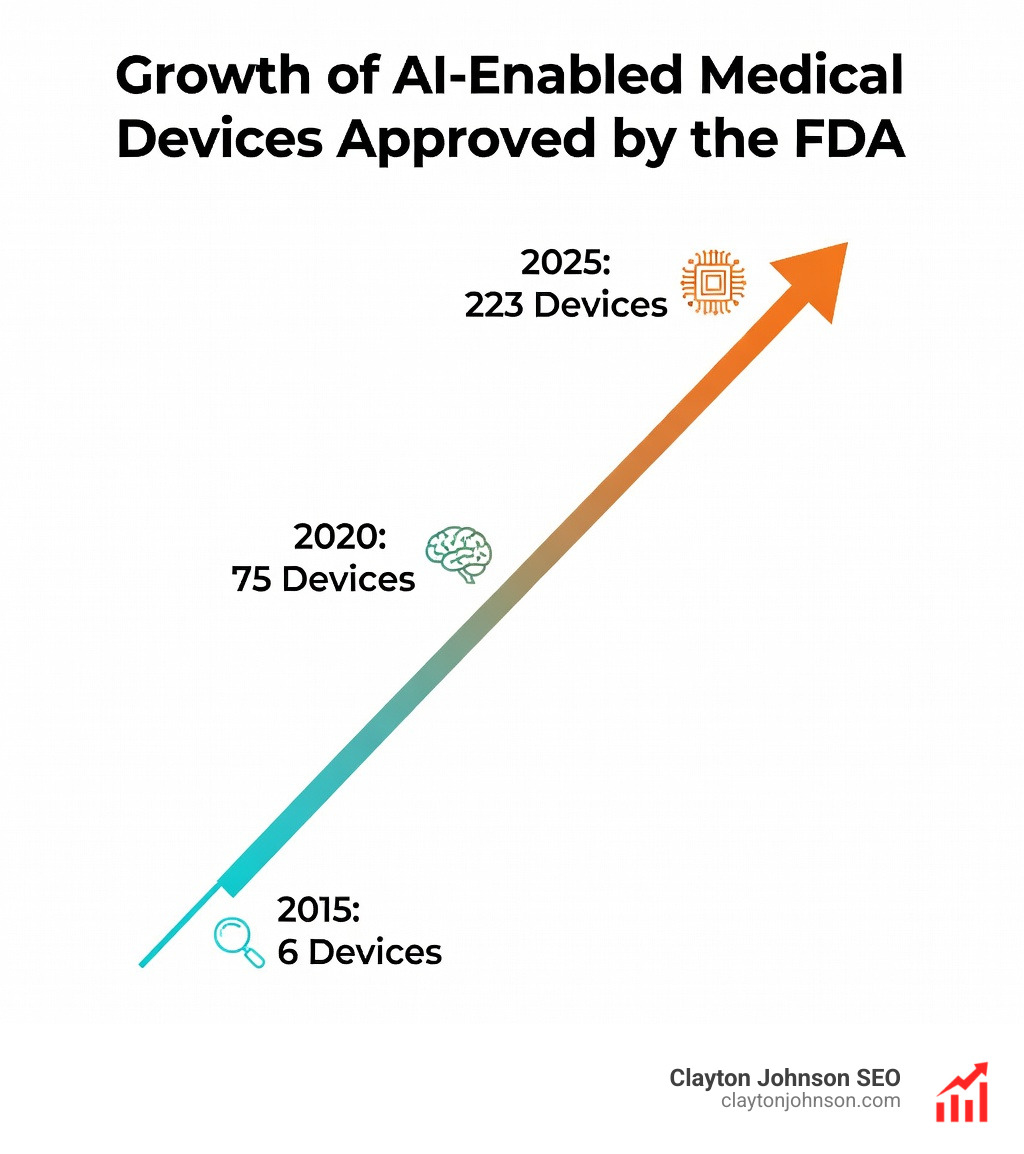

1. Industry Applications

This is the “face” of the ecosystem. It is where AI meets the real world. For example, the BMW Group data delivery solution uses AI and cloud computing to improve data efficiency tenfold in vehicle development. From precision agriculture to cancer screening, this layer is about taking raw intelligence and making it useful.

2. AI Models and Platforms

These are the “brains.” Foundation models like GPT-4 or Claude are massive, pre-trained systems that can be adapted for thousands of different uses. Instead of spending months training a model, a developer can now use an API to “plug in” intelligence.

3. AI Infrastructure

Running these models requires massive scale. This layer includes the data centers and cloud platforms (like AWS, Azure, or Google Cloud) that host the models. It also includes the Guide on the use of generative artificial intelligence which helps organizations manage how these tools are deployed safely.

4. AI Accelerators

Standard computer chips (CPUs) aren’t fast enough for modern AI. Accelerators are specialized hardware designed specifically to handle the “math” of neural networks. They act as the turbocharger for the AI engine.

5. AI Computing Infrastructure

At the very bottom are the physical components. This is the bedrock. Without high-end GPUs and specialized memory, the entire stack collapses. This layer is currently seeing the most intense global competition.

Key players in what is AI ecosystem

The ecosystem is dominated by a few “hyperscalers” and hardware giants, but it is supported by a massive web of researchers.

- NVIDIA: Currently the king of the hardware layer, providing the GPUs that almost everyone uses to train models.

- Microsoft & OpenAI: A powerhouse partnership that has defined the generative AI era through the Azure cloud and GPT models.

- Google Cloud & AWS: The “landlords” of the internet, providing the vast majority of the compute power needed to run AI at scale.

- Research Institutes: Organizations like the CIFAR research initiatives play a critical role in talent development. In fact, the first phase of the Pan-Canadian AI Strategy was launched under CIFAR’s leadership to strengthen the talent base.

Hardware Foundations: Why GPUs and HBM are the Bedrock

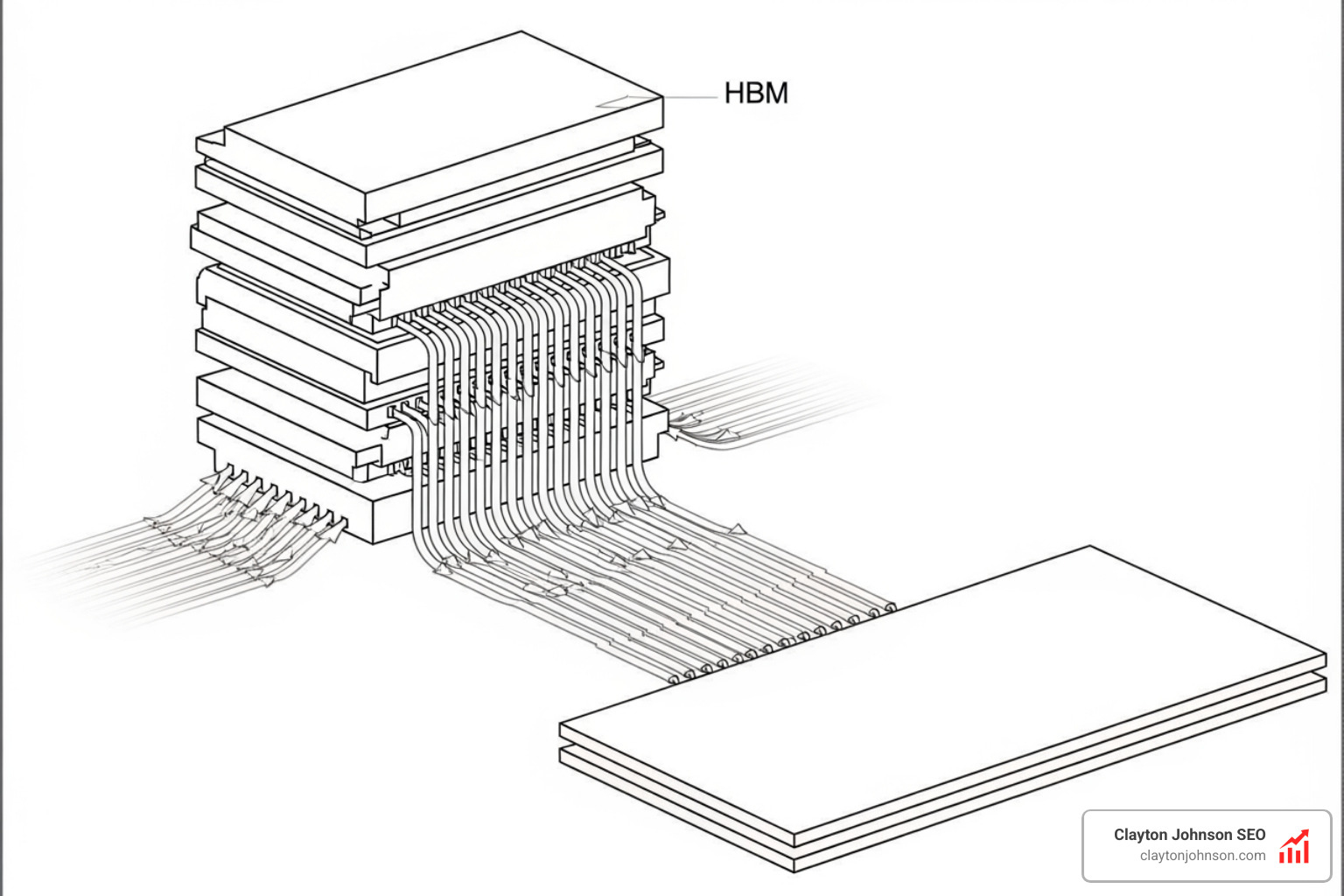

If AI is the “brain,” then GPUs are the “neurons” and HBM is the “bloodstream.”

Standard memory is often too slow to keep up with the lightning-fast processing of a GPU. This creates a bottleneck. To solve this, the industry developed High-Bandwidth Memory (HBM). HBM stacks memory chips vertically, like a skyscraper, allowing data to move much shorter distances at much higher speeds.

There is a common saying in the industry: “There is no AI without HBM.”

Leading manufacturers like SK hynix have pushed this technology to its limits, recently delivering samples of 12-layer HBM4. This hardware innovation is what allows a model with over a trillion parameters, like GPT-4, to generate a response in seconds rather than hours.

The role of sovereign compute

As AI becomes central to national security and economic growth, many countries are realizing they cannot rely solely on foreign providers. This has led to the rise of “Sovereign Compute”—the idea that a nation should own and control its own AI infrastructure.

In Canada, this is being addressed through several major initiatives:

- AI Compute Access Fund: A multi-billion-dollar commitment to provide researchers and startups with the computing power they need to compete globally.

- Canadian AI Sovereign Compute Strategy: A plan to build domestic data centers so that Canadian data stays on Canadian soil.

- Digital Research Alliance of Canada: This organization provides dedicated computing capacity specifically for AI researchers, ensuring that academic progress isn’t slowed down by a lack of hardware.

Global Governance and Industry Adoption

A healthy what is AI ecosystem requires more than just chips; it needs trust. Without clear rules, businesses are hesitant to invest, and the public is hesitant to use the technology.

Canada has been a global pioneer in this area. The Pan-Canadian AI Strategy was one of the first of its kind, focusing on talent and research in its first phase and shifting toward commercialization and standards in its second.

Key regulatory and collaborative pillars include:

- Bill C-27 and AIDA: The Artificial Intelligence and Data Act (AIDA) is one of the first national AI regulatory frameworks in the world, designed to ensure AI is developed and used responsibly.

- International Collaboration: Through the Global Partnership on Artificial Intelligence, nations work together to ensure AI reflects democratic values and human rights.

- Standards Development: The AI-related standards development through the Standards Council of Canada ensures that different AI systems can work together safely and efficiently.

Future trends in what is AI ecosystem

The ecosystem is far from finished. We are currently seeing several “frontier” trends that will define the next decade:

- Edge AI Adoption: Moving AI away from massive data centers and directly onto devices (like your phone or a factory sensor). This reduces latency and improves privacy.

- Federated Learning: A way to train AI models on private data without that data ever leaving its original location. This is a game-changer for healthcare and finance.

- Sustainability and Energy: AI is power-hungry. We are seeing a massive shift toward energy-efficient hardware and data centers powered by renewable energy. Some companies are even reviving nuclear reactors to meet the demand.

- Quantum Computing: While still in the early stages, quantum computers could eventually handle math problems that are currently impossible for even the best GPUs.

Frequently Asked Questions about AI Infrastructure

What are the biggest challenges in the AI ecosystem?

The most immediate challenge is the infrastructure shortage. There simply aren’t enough GPUs to go around, leading to long wait times for startups. Energy consumption is another massive hurdle; training a single large model can emit as much carbon as several cars do in their entire lifetime. Finally, ethical bias remains a concern, as AI models can inherit the prejudices found in their training data.

How do governments shape AI development?

Governments act as both “accelerators” and “brakes.” They provide funding (including large-scale public investments in national AI strategies) and talent visas to attract the world’s best minds. At the same time, they create regulatory frameworks to protect citizens from deepfakes, misinformation, and privacy violations.

Why is collaboration essential for AI innovation?

The AI stack is too complex for any one company to own. A startup might have a brilliant idea for a model, but they need a cloud provider for compute, a hardware company for chips, and a government framework for legal safety. Open-source tools like PyTorch and TensorFlow allow millions of developers to build on each other’s work, speeding up innovation for everyone.

Conclusion

Understanding what is AI ecosystem is the first step in building a sustainable growth strategy. Whether you are a founder looking to integrate AI into your product or a marketing leader trying to optimize your workflows, the infrastructure you choose will dictate your ceiling.

At Demandflow.ai, we believe that clarity leads to structure, and structure leads to leverage. We don’t just look at AI as a series of tactics; we view it as a structured growth architecture. By mapping your SEO strategy and content architecture into an authority-building ecosystem, you create the kind of compounding growth that defines industry leaders.

Most companies don’t lack the tools to succeed—they lack the architecture to scale. Our mission is to provide that framework, combining competitive intelligence with AI-enhanced execution.

Ready to map your own growth architecture?

Build your AI strategy with Clayton Johnson